Cert-manager: ca.crt is empty after generating tls secret

Bugs should be filed for issues encountered whilst operating cert-manager.

You should first attempt to resolve your issues through the community support

channels, e.g. Slack, in order to rule out individual configuration errors.

Please provide as much detail as possible.

Describe the bug:

Hi there,

I created an issuer with letsencrypt staging and cloudflare acme dns01 provider, and later on I created a certificate for it. When it finished generating the certificate, I got the following error in the deployment logs:

0926 02:04:50.409208 1 logger.go:43] Calling GetOrder

I0926 02:04:50.562787 1 sync.go:76] cert-manager/controller/orders "level"=0 "msg"="updating Order resource status" "resource_kind"="Order" "resource_name"="test-cert-vlcntest-com-1823914796" "resource_namespace"="expr"

E0926 02:04:50.576234 1 sync.go:79] cert-manager/controller/orders "msg"="failed to update status" "error"="error finalizing order: acme: urn:ietf:params:acme:error:orderNotReady: Order's status (\"valid\") is not acceptable for finalization" "resource_kind"="Order" "resource_name"="test-cert-vlcntest-com-1823914796" "resource_namespace"="expr"

E0926 02:04:50.576266 1 controller.go:131] cert-manager/controller/orders "msg"="re-queuing item due to error processing" "error"="[error finalizing order: acme: urn:ietf:params:acme:error:orderNotReady: Order's status (\"valid\") is not acceptable for finalization, Operation cannot be fulfilled on orders.certmanager.k8s.io \"test-cert-vlcntest-com-1823914796\": the object has been modified; please apply your changes to the latest version and try again]" "key"="expr/test-cert-vlcntest-com-1823914796"

Expected behaviour:

I expect that the ca.crt is present and not empty in the corresponding certificate secret.

Steps to reproduce the bug:

I used the following configuration:

apiVersion: certmanager.k8s.io/v1alpha1

kind: ClusterIssuer

metadata:

name: letsencrypt-staging

spec:

acme:

email: [email protected]

server: https://acme-staging-v02.api.letsencrypt.org/directory

privateKeySecretRef:

name: mycompany-issuer-account-key

dns01:

providers:

- name: cf-dns

cloudflare:

email: [email protected]

apiKeySecretRef:

name: cloudflare-api-key

key: apiKey

apiVersion: certmanager.k8s.io/v1alpha1

kind: Certificate

metadata:

name: test-cert-mycompany-com

namespace: expr

spec:

secretName: test-cert-mycompany-com-tls

renewBefore: 360h # 15d

commonName: test-cert.mycompany.com

dnsNames:

- test-cert.mycompany.com

issuerRef:

name: letsencrypt-staging

kind: ClusterIssuer

acme:

config:

- dns01:

provider: cf-dns

domains:

- test-cert.mycompany.com

Environment details::

Kubernetes:

Client Version: version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.3", GitCommit:"2d3c76f9091b6bec110a5e63777c332469e0cba2", GitTreeState:"clean", BuildDate:"2019-08-19T12:36:28Z", GoVersion:"go1.12.9", Compiler:"gc", Platform:"darwin/amd64"}

Server Version: version.Info{Major:"1", Minor:"14+", GitVersion:"v1.14.6-eks-5047ed", GitCommit:"5047edce664593832e9b889e447ac75ab104f527", GitTreeState:"clean", BuildDate:"2019-08-21T22:32:40Z", GoVersion:"go1.12.9", Compiler:"gc", Platform:"linux/amd64"}

- Cloud-provider/provisioner:

AWS EK - cert-manager version (e.g. v0.4.0):

cert-manager v0.10 - Install method (e.g. helm or static manifests):

via helm with default configuration

/kind bug

All 25 comments

Seems related to this: https://github.com/jetstack/cert-manager/issues/1571

(tls.crt is already the full chain in the case for letsencrypt issuer I believe)

And in one of the threads I found this snippet, which splits it out properly ... not ideal but :/

kubectl patch secret \

-n <namespace> <secret name> \

-p="{\"data\":{\"ca.crt\": \"$(kubectl get secret \

-n <namespace> <secret name> \

-o json -o=jsonpath="{.data.tls\.crt}" \

| base64 -d | awk 'f;/-----END CERTIFICATE-----/{f=1}' - | base64 -w 0)\"}}"

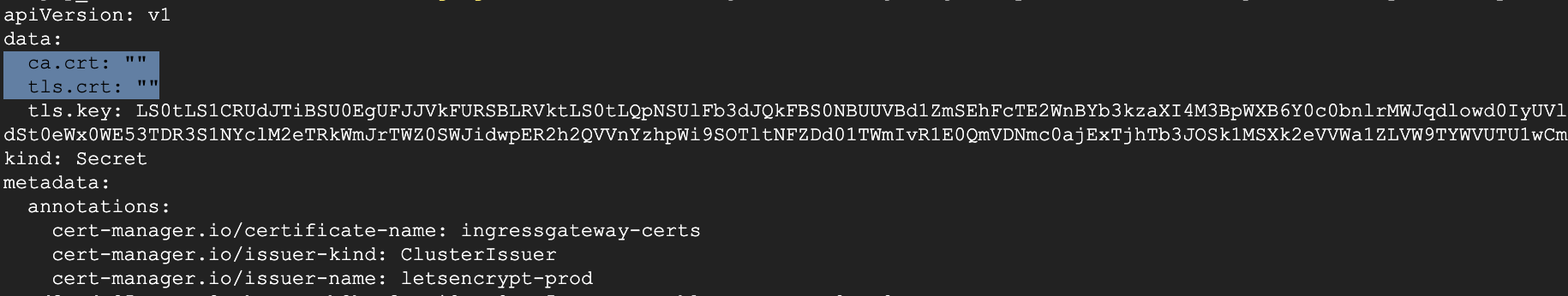

Having same issue:

# kubectl get secret -n myapp my-domain -o yaml

apiVersion: v1

data:

ca.crt: ""

tls.crt: ""

tls.key: LS0tLS1CRUdJTiBSU0E...ZAo4VnJBS1NXL0lxM29qQk1sbjczSTNzeVpXNlBKbXBtVHBJa1hDQnhiTU1mNElreVpJc009Ci0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

kind: Secret

metadata:

annotations:

cert-manager.io/certificate-name: my-domain

cert-manager.io/issuer-kind: ClusterIssuer

cert-manager.io/issuer-name: letsencrypt

creationTimestamp: 2019-12-07T16:19:28Z

name: my-domain

namespace: myapp

resourceVersion: "17355"

type: kubernetes.io/tls

And nginx can't use it:

W1207 16:19:31.891802 7 controller.go:1125] Error getting SSL certificate "ga-monitor/gamonitor2-midtrans-work": local SSL certificate myapp/mydomain was not found. Using default certificate

So my app is served with Kubernetes Ingress Controller Fake Certificate

Same issue:

Name: wildcard-ost-ai-tls

Namespace: default

Labels: <none>

Annotations: cert-manager.io/certificate-name: wildcard-ost-ai

cert-manager.io/issuer-kind: ClusterIssuer

cert-manager.io/issuer-name: letsencrypt-prod

Type: kubernetes.io/tls

Data

====

ca.crt: 0 bytes

tls.crt: 0 bytes

tls.key: 1675 bytes

Same thing =(

$> kubectl get secret echo-tls -o yaml

apiVersion: v1

data:

ca.crt: ""

tls.crt: ""

tls.key: "LS0tLS1CRUdJTiBSU...S0tLS0tCg=="

kind: Secret

metadata:

annotations:

cert-manager.io/certificate-name: echo-tls

cert-manager.io/issuer-kind: ClusterIssuer

cert-manager.io/issuer-name: letsencrypt-staging

creationTimestamp: "2020-01-02T20:46:10Z"

name: echo-tls

namespace: default

resourceVersion: "7206"

selfLink: /api/v1/namespaces/default/secrets/echo-tls

uid: 4c55a2f4-3e14-4bc1-8cae-9ff299772b76

type: kubernetes.io/tls

Any updates?

Same issue.

➜ xooa-charts git:(nk-1.4.1) ✗ kubectl get secret -n testorg5 hlf-testorg5-tls -o yaml

apiVersion: v1

data:

ca.crt: ""

tls.crt: ""

tls.key: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNS (deleted for security reasons)

kind: Secret

metadata:

Do we have a fix for it yet?

This same set of helm charts was working for me in my old cluster. On my new cluster which has upgraded cert manager, I am getting this issue.

@ashishxooa I'm having a similar issue. From which version did you do the upgrade, or what is the last version which works?

For who needs a temporary/immediate solution to this issue: since the discussion in https://github.com/jetstack/cert-manager/issues/1571 seems to hint that the empty ca.crt will not be fixed in the near future, I wrote a small operator which completes the ca.crt in TLS secrets by evaluating the cert chain as mentioned by @aranair.

@dkarnutsch I didn't upgrade. There were 2 different set of cluster, one which was built about 6 months ago, which was running the latest cert manager which was available that time, I always use the latest stable helm chart, that didn't have any issue and unfortunately

I have nuked that cluster. Now I created a new cluster 10 days back, with the current latest helm chart and this is having the issue.

Hi,

i must add ClusterIssuer and Issuer too.

So i add.

apiVersion: cert-manager.io/v1alpha2

kind: ClusterIssuer

metadata:

name: letsencrypt-prod

spec:

acme:

# You must replace this email address with your own.

# Let's Encrypt will use this to contact you about expiring

# certificates, and issues related to your account.

email: letsencrypt-prod-sejn@xxx

server: https://acme-v02.api.letsencrypt.org/directory

privateKeySecretRef:

# Secret resource used to store the account's private key.

name: letsencrypt-prod

# Add a single challenge solver, HTTP01 using nginx

solvers:

- http01:

ingress:

class: nginx

and

apiVersion: cert-manager.io/v1alpha2

kind: Issuer

metadata:

name: letsencrypt-prod

spec:

acme:

# You must replace this email address with your own.

# Let's Encrypt will use this to contact you about expiring

# certificates, and issues related to your account.

email: letsencrypt-prod-sejn@xxx

server: https://acme-v02.api.letsencrypt.org/directory

privateKeySecretRef:

# Secret resource used to store the account's private key.

name: letsencrypt-prod

# Add a single challenge solver, HTTP01 using nginx

solvers:

- http01:

ingress:

class: nginx

Than you check secret generation 👍

kubectl describe CertificateRequest -A

at last Events you can see errors

I ran into this issue as well. In my case, I changed DNS records AFTER publishing the ingress. I deleted the namespace and re-applied after DNS was changed and it all worked the second time around.

This bug, having the secret with only tls.key first, and then updating with the tls.crt effects behaviour of pods.

Without this bug, secret arrives after tls.crt and tls.key is available.

This way you can mount the secret in the pod, and the pod's scheduling waits for the availabilty of the certificate secret. So scheduled pod is always sure that the certificate is really available.

With this bug, pod is scheduled but the certificate may not be not available. You need to also add waits for the availability of the crt. And that may or may not ever arrive.

I ran into this issue as well. In my case, I changed DNS records AFTER publishing the ingress. I deleted the namespace and re-applied after DNS was changed and it all worked the second time around.

@jpiepkow which namespace did you delete? Can you please mention the steps again?

@harshmaur after changing the dns settings on my provider(in my case digitalocean) to allow for traffic from the domain -> cluster. I deleted the namespace that had the ingress file and re-applied everything.

I realised that it does not matter if it's empty or not in cert manager. I found that the real problem was that my acme solver wasn't configured properly

@fsniper

This bug, having the secret with only tls.key first, and then updating with the tls.crt effects behaviour of pods.

We've just introduced a new certificate controller implementation that no longer behaves like this, and will instead atomically issue the certificate and private key once both are ready.

You can test this out by using the recent v0.15.0-alpha.1 release and enabling the ExperimentalCertificateControllers feature gate with --feature-gates=ExperimentalCertificateControllers=true as a flag passed to the controller.

@javiertoledos when using the ACME issuer type, we are unable to retrieve the certificate of the CA that signed your certificate, hence why this field is empty. As this is working as intended, I think this issue can be closed :) feel free to re-open if you think there's anything else actionable here however.

I had the same problem and found this issue. Tried v0.15.0-alpha.1 as suggested and it fixed the problem!

After upgrading to the alpha version, I removed the old Secret and Certificate and created the Certificate again. The Secret was created correctly by cert-manager a few seconds later.

For anyone using helm, enable that feature-gate by adding --set featureGates="ExperimentalCertificateControllers=true" to your install or upgrade command.

Thanks @munnerz !

I have this issue, using jetstack Helm chart. Tried to add feature gate proposed here, no luck.

cert-manager version: v0.15.0, deployed with standard values and separate crd manifest (builtin installCRDs also don't work)

featureGates and installCRDs worked for me.

I confirm, v0.15.0-alpha.1 installed with Helm option --set featureGates="ExperimentalCertificateControllers=true" did worked for me, thank you

Hi, does someone have an example of a cluster-issuer that's being used to generate ca.crt in the secret. I'm currently running version 0.15.2 with --set featureGates="ExperimentalCertificateControllers=true" and my secret still has an empty ca.crt value.

apiVersion: cert-manager.io/v1alpha2

kind: ClusterIssuer

metadata:

name: #@ data.values.name

annotations:

kubernetes.io/psp: privileged

spec:

acme:

server: "https://acme-v02.api.letsencrypt.org/directory"

email: #@ data.values.email

privateKeySecretRef:

name: #@ data.values.private_key

solvers:

- dns01:

route53:

region: #@ data.values.region

hostedZoneID: #@ data.values.hosted_zone_id

name: #@ data.values.aws_access_key_secret

key: AWS_SECRET_ACCESS_KEY

selector:

dnsZones:

- #@ data.values.base_domain

Hi, does someone have an example of a cluster-issuer that's being used to generate ca.crt in the secret. I'm currently running version 0.15.2 with

--set featureGates="ExperimentalCertificateControllers=true"and my secret still has an empty ca.crt value.

hi, I've had these symptoms as well, and I was surprised why this is not working for me. After a few hours of tearing my hears out the solution appears to be very simple, but also not quite straight forward.

install v0.15.0 first

helm install cert-manager jetstack/cert-manager --namespace cert-manager --set installCRDs=true --version v0.15.0

wait until it has all pods running then uninstall it

helm uninstall cert-manager -n cert-manager

now you can install v0.15.1

helm install cert-manager jetstack/cert-manager --namespace cert-manager --set installCRDs=true --version v0.15.0-alpha.1 --set featureGates="ExperimentalCertificateControllers=true"

and it will work. I cannot comment re v0.15.2 as I never tried this particular version. I also barely understand why it works this way and not out of the box if I try to install v0.15.1 first without prior installation of v0.15.0

hope it will help, thank you

Hi @m0rg0th thanks for the reply. Your proposed solution doesn't seem to work for me. Are you seeing this working on a consistent basis?

yep, behavior is pretty much consistent on my side, its currently part of my ansible deployment script for K8S clusters; however I'm using bare metal installations on Debian Buster, not on AWS, hence your experience could be different.

@munnerz

I am struggling with the same issue while trying to collect letsencrypt certificate using default istio_dex_1.0.2.yaml configuration file as well as even after uninstall the same adding up v0.15.2 using helm , the need of the hour is to have the whole setup with istio and kubeflow along with https SSL encryption certificate, where else I can find below details with empty ca.crt and tls one,

can you please try to help here,

I have used the possible the stable k8s API version 1.14 on GKE and faced the same issue even after updating the same using v0.15 of cert-manager , which is in the top for legacy k8s cluster

@munnerz

I did the installation without helm. How Can I pass the parameter -set featureGates="ExperimentalCertificateControllers=true" ?

Most helpful comment

Having same issue:

And nginx can't use it:

So my app is served with

Kubernetes Ingress Controller Fake Certificate