There should be an option to only build dependencies.

All 241 comments

@nagisa,

Why do you want it?

I do not remember exactly why, but I do remember that I ended just running rustc manually.

@posborne, @mcarton, @Devyn,

You reacted with thumbs up.

Why do you want it?

Sometimes you add a bunch of dependencies to your project, know it will take a while to compile next time you cargo build, but want your computer to do that as you start coding so the next cargo build is actually fast.

But I guess I got here searching for a cargo doc --dependencies-only, which allows you to get the doc of your dependencies while your project does not compile because you'd need the doc to know how exactly to fix that compilation error you've had for a half hour :smile:

As described in #3615 this is useful with build to setup a cache of all dependencies.

@gregwebs out of curiosity do you want to cache compiled dependencies or just downloaded dependencies? Caching compiled dependencies isn't implemented today (but would be with a command such as this) but downloading dependencies is available via cargo fetch.

Generally, as with my caching use case, the dependencies change infrequently and it makes sense to cache the compilation of them.

The Haskell tool stack went through all this and they seemed to generally decided to merge things into a single command where possible. For fetch they did end up with something kinda confusing though: build --dry-run --prefetch. For build --dependencies-only mentioned here they do have the same: build --only-dependencies

@gregwebs ok thanks for the info!

@alexcrichton,

It looks like I should continue my work on the PR.

Will Cargo's team accept it?

@KalitaAlexey I personally wouldn't be convinced just yet, but it'd be good to canvas opinions from others on @rust-lang/tools as well

@alexcrichton,

Anyway I have no time right now)

I don't see much of a use case - you can just do cargo build and ignore the output for the last crate. If you really need to do this (for efficiency) then there is API you can use.

What's the API?

Implement an Executor. That lets you intercept every call to rustc and you can do nothing if it is the last crate.

I wasn't able to find any information about an Executor for cargo. Do you have any links to documentation?

Docs are a little thin, but start here: https://github.com/rust-lang/cargo/blob/609371f0b4d862a94e2e3b8e4e8c2a4a2fc7e2e7/src/cargo/ops/cargo_rustc/mod.rs#L62-L64

You can look at the RLS for an example of how to use them: https://github.com/rust-lang-nursery/rls/blob/master/src/build.rs#L288

A question of Stack Overflow wanted this feature. In that case, the OP wanted to build the dependencies for a Docker layer.

A similar situation exists for the playground, where I compile all the crates once. In my case, I just put in a dummy lib.rs / main.rs. All the dependencies are built, and the real code is added in the future.

@shepmaster unfortunately the proposed solution wouldn't satisfy that question because a Cargo.toml won't parse without associated files in src (e.g. src/lib.rs, etc). So that question would still require "dummy files", in which case it wouldn't specifically be serviced by this change.

I ended up here because I also am thinking about the Docker case. To do a good docker build I want to:

COPY Cargo.toml Cargo.lock /mything

RUN cargo build-deps --release # creates a layer that is cached

COPY src /mything/src

RUN cargo build --release # only rebuild this when src files changes

This means the dependencies would be cached between docker builds as long as Cargo.toml and Cargo.lock doesn't change.

I understand src/lib.rs src/main.rs are needed to do a good build, but maybe build-deps simply builds _all_ the deps.

The dockerfile template in shepmaster's linked stackoverflow post above SOLVES this problem

I came to this thread because I also wanted the docker image to be cached after building the dependencies. After later resolving this issue, I posted something explaining docker caching, and was informed that the answer was already linked in the stackoverflow post. I made this mistake, someone else made this mistake, it's time to clarify.

RUN cd / && \

cargo new playground

WORKDIR /playground # a new project has a src/main.rs file

ADD Cargo.toml /playground/Cargo.toml

RUN cargo build # DEPENDENCIES ARE BUILD and CACHED

RUN cargo build --release

RUN rm src/*.rs # delete dummy src files

# here you add your project src to the docker image

After building, changing only the source and rebuilding starts from the cached image with dependencies already built.

someone needs to relax...

Also @KarlFish what you're proposing is not actually working. If using FROM rust:1.20.0.

cargo new playgroundfails because it wantsUSERenv variable to be set.RUN cargo builddoes not build dependencies for release, but for debug. why do you need that?

Here's a better version.

FROM rust:1.20.0

WORKDIR /usr/src

# Create blank project

RUN USER=root cargo new umar

# We want dependencies cached, so copy those first.

COPY Cargo.toml Cargo.lock /usr/src/umar/

WORKDIR /usr/src/umar

# This is a dummy build to get the dependencies cached.

RUN cargo build --release

# Now copy in the rest of the sources

COPY src /usr/src/umar/src/

# This is the actual build.

RUN cargo build --release \

&& mv target/release/umar /bin \

&& rm -rf /usr/src/umar

WORKDIR /

EXPOSE 3000

CMD ["/bin/umar"]

You can always review the complete Dockerfile for the playground.

Hi!

What is the current state of the --deps-only idea? (mainly for dockerization)

I agree that it would be really cool to have a --deps-only option so that we could cache our filesystem layers better in Docker.

I haven't tried replicating this yet, but it looks very promising. This is in glibc and not musl, by the way. My main priority is to get to a build that doesn't take 3-5 minutes ever time, not a 5 MB alpine-based image.

I ran into wanting this today.

@steveklabnik can you share more details about your case? Why would building only the dependencies be useful? Is starting with an empty lib.rs/main.rs not applicable to your situation?

As an aside, a cargo extension (cargo-prebuild?) could probably move aside the main.rs / lib.rs, replace it with an empty version, call cargo build, then move it back. That would "solve" the problem.

I wanted to time a clean build, but of my crate only, and not its dependencies. The easiest way to do that is to cargo clean followed by a hypothetical cargo build --deps-only, then time a cargo build.

Yeah, I mean, I could replace everything, and then pull it back, but that feels like working around things rather than doing what I actually want to do.

@shepmaster the replacing stuff gets very hairy when you have a workspace project with custom build.rs in multiple subprojects. My Dockerfile is now _very_ fragile and complicated.

Also on the workspace note, i don't even try to cache/shorten build times for the subprojects, only the main, so it's suboptimal as well. Any source change in a subproject means doing a full build without caching anything.

with custom build.rs

What impact does a build.rs have on your case? What do you expect a hypothetical cargo build --deps-only to do in the presence of a build.rs? Do you believe that this behavior applies to all cases using a build.rs?

when you have a workspace

What do you expect a hypothetical cargo build --deps-only to do in a workspace with two crates (A and B) where A depends on B? Is B built? Is A built?

What impact does a build.rs have on your case?

My (several) build.rs are for invoking bindgen around c-libs. Some are simply: bindgen + include!(concat!(env!("OUT_DIR"), "/bindings.rs")); in the lib.rs, and some lib.rs add a bit more to the bindings

I guess my expectation of a cargo build --deps only in a workspace scenario would be that, as a minimum, it would build any non project local deps of those submodules together with the non project local deps of my main lib (like libc, which is often in my submodules).

I only mention build.rs because the solution of doing empty projects, build, and then copying in the source becomes more messy when you also have a bespoke build with git submodules of various c sources built via a build.rs.

Maybe a cargo build --deps-only would do build _only dependencies that are not local to the project_. I.e. any dep that in a Cargo.toml (root level or submodule) that references the central cargo repo, own repos, or git sources.

I think it would be useful to decide whether to accept or reject this as a desirable feature (even though there is no implementation yet) and clearly state that at the top of the issue (or a new issue).

I'd also like to have this feature. I'm also doing a Docker cache thing.

@shepmaster 's Dockerfile isn't working in my case (cross compiling stuff).

I am very sad this got closed, this would be great for docker builds, and way less hacky than current solutions. I feel docker builds in their own right are also a good enough reason alone

@cmac4603 this has not been closed

Oh my goodness! I'm so glad! Yup, totally misread, thanks @mathroc

I would love to see this for docker builds then, particularly local dev builds involving docker-compose. Generally for final test/prod builds, I use a musl builder from scratch, but for development, would be a very nice-to-have in the toolkit

downloading dependencies is available via cargo fetch

cargo fetch downloads too much in general without having something like #5216 to allow the fetch to be constrained to a target.

I had a need for this feature today for pre-building a docker image cache. The trick to cargo init a fresh project and reusing only one's Cargo.toml _almost_ worked, but I have a build.rs script, so I had to make a stub build.rs file too. It would be easier to have a "standard" way of downloading and building the deps that doesn't depend on the particulars of your build and handles your corner cases correctly.

but I have a build.rs script, so I had to make a stub build.rs file too

@golddranks Why did you need it though? If the reason is that you have build = "build.rs" line in Cargo.toml, then just remove that line as it's not required nowadays and build.rs is automatically assumed to be a build script if found.

Ah, didn't know that. Thanks.

_Yet another_ reason to have this is that some CIs (AppVeyour in particular) have a limit on how much information is logged from the build. With cargo build --dependencies-only one can build dependencies normally, then switch to cargo build -vv for the source code that's being the primary target of the CI build.

I think we should probably consider the docker use-case in more detail. I have a hunch that there's more to the use-case than meets the eye.

I think that the CI use-case is a more interesting rationale for this feature, but I wonder if it argues instead for more control over the verbosity options (--crate-verbosity "my-crate=vv" or something along those lines), which I could imagine being useful for other purposes ("I really want to look more closely at what's going on when I build clap")

I came here looking for the same use-case of wanting to properly layer my Docker images to decrease build times.

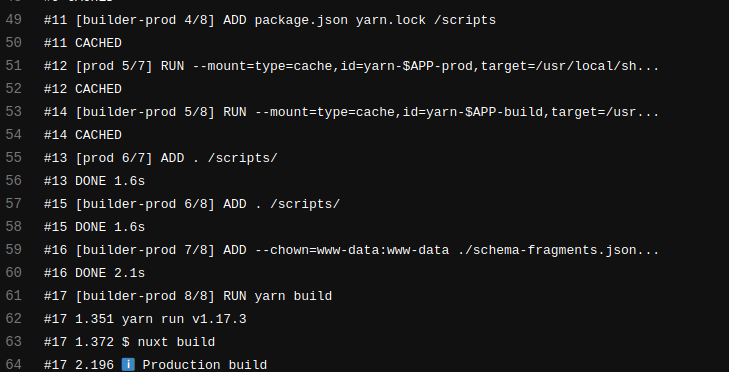

I ended up with the following solution, in case this helps anyone else:

FROM ekidd/rust-musl-builder AS builder

RUN sudo chown -R rust:rust /home/rust

RUN mkdir src && touch src/lib.rs

COPY Cargo.lock .

COPY Cargo.toml .

RUN cargo build --release

ADD . .

RUN cargo build --release

FROM scratch

COPY --from=builder \

/home/rust/src/target/x86_64-unknown-linux-musl/release/my-app \

/my-app

ENTRYPOINT ["/my-app"]

Here's the result of the first run, vs a second run with only source code changes, no dependency changes:

collapsed wall of text

❯ time docker build -t hello .; and docker run hello

Sending build context to Docker daemon 225.3kB

Step 1/10 : FROM ekidd/rust-musl-builder AS builder

---> 792e654be291

Step 2/10 : RUN mkdir src/ && touch src/lib.rs

---> Running in 2208ab6053f4

Removing intermediate container 2208ab6053f4

---> 572926d2738a

Step 3/10 : COPY Cargo.lock .

---> e8eb753e4881

Step 4/10 : COPY Cargo.toml .

---> 43f3d04fe0e8

Step 5/10 : RUN cargo build --release

---> Running in 374ba4a8a864

Updating registry `https://github.com/rust-lang/crates.io-index`

<SNIP>

Finished release [optimized] target(s) in 43.65s

Removing intermediate container 374ba4a8a864

---> 843e36ec3ce7

Step 6/10 : ADD . .

---> c66cc9b7f4dc

Step 7/10 : RUN cargo build --release

---> Running in bcd95a11ff47

Compiling hello-world v0.1.0 (file:///home/rust/src)

Finished release [optimized] target(s) in 0.40s

Removing intermediate container bcd95a11ff47

---> 1789bdcc6ed3

Step 8/10 : FROM scratch

--->

Step 9/10 : COPY --from=builder /home/rust/src/target/x86_64-unknown-linux-musl/release/hello-world /hello-world

---> Using cache

---> e8501d0c8738

Step 10/10 : ENTRYPOINT ["/hello-world"]

---> Using cache

---> fc179245d85b

Successfully built fc179245d85b

Successfully tagged hello:latest

53.53 real 2.17 user 0.80 sys

hello world

❯ time docker build -t hello .; and docker run hello

Sending build context to Docker daemon 225.3kB

Step 1/10 : FROM ekidd/rust-musl-builder AS builder

---> 792e654be291

Step 2/10 : RUN mkdir src/ && touch src/lib.rs

---> Using cache

---> 572926d2738a

Step 3/10 : COPY Cargo.lock .

---> Using cache

---> e8eb753e4881

Step 4/10 : COPY Cargo.toml .

---> Using cache

---> 43f3d04fe0e8

Step 5/10 : RUN cargo build --release

---> Using cache

---> 843e36ec3ce7

Step 6/10 : ADD . .

---> a9698b8f6a88

Step 7/10 : RUN cargo build --release

---> Running in 980a6d70a441

Compiling hello-world v0.1.0 (file:///home/rust/src)

Finished release [optimized] target(s) in 0.35s

Removing intermediate container 980a6d70a441

---> 1661e048b501

Step 8/10 : FROM scratch

--->

Step 9/10 : COPY --from=builder /home/rust/src/target/x86_64-unknown-linux-musl/release/hello-world /hello-world

---> d8b93e6a4ff9

Step 10/10 : ENTRYPOINT ["/hello-world"]

---> Running in e26f084ed361

Removing intermediate container e26f084ed361

---> 6684afc6b641

Successfully built 6684afc6b641

Successfully tagged hello:latest

7.43 real 2.26 user 1.00 sys

hello universe!

Same docker use case here. We can do the above, but anyone reading it would wonder what's going on. It would be much clearer to have an -dependencies-only flag.

Is the question whether there are sufficient use cases (Docker, performance timing, any others?)? (I'm assuming the cargo implementation would be fairly simple.).

To be fair docker is a pretty big use case...

Same docker use case here. I would _love_ this feature.

Same docker use case here. I don't want to have to pull and compile all the dependencies in my image everytime I change the source code.

I tried @JeanMertz approach but when you've got a workspace with a few crates in it, it's not so pretty. docker's COPY doesn't seem to like recursive globs, COPY **/Cargo.toml . for example doesn't seem to work for me.

That said, I'm not sure there's a nice way of doing this, because even if we said build deps only, if we copied in all our source to the docker image and the source had changed then it would be a cache miss anyway. I think the best we can ever do with docker is to generalise @JeanMertz 's approach.

Note that there is also https://crates.io/crates/cargo-build-deps.

@nrc i tried the 'Executor' path to no avail (https://github.com/azban/cargo-build-deps/blob/executor/src/main.rs). i had to hack around fingerprint updates because there is no way to be lenient here. but i finally got stuck on ignoring custom build scripts, because they don't use the executor and rather call commands directly here.

i ended up hacking around build_plan, which is messy because seemingly the only way to get it, is from stdout, and i was making assumptions about how to tell if something is an external dependency or not. (https://github.com/azban/cargo-build-deps/blob/build-plan/src/main.rs)

@JeanMertz @gilescope @emilk @anuraags , i ended up 'generalizing' the approach above in order to support more complex crates (workspaces mostly). mostly involves a separate build image which you only pass what's required to build (Cargo.toml, Cargo.lock, etc) into the docker context.. and then inherit from that image in a later build. you can check out an example here: https://github.com/paritytech/substrate/pull/1085/commits/a12e3040bca581e3e3a5b18c4de218499d3de937

Unfortunately, in many usecases approach based on Cargo.toml and Cargo.lock can't really work, because you need to update at least current crate version. And therefore Docker will invalidate cache immediately, even if dependencies versions are still the same.

I was recently experimenting with BuildKit approach: every rustc or build script invocation is done in own build stage (something like this). The image builder can manage "cache invalidation" and building concurrency on its own then.

I can call it a successful experiment, but I decided to go even deeper - I'm currently trying to build a BuildKit frontend for Cargo crates. This will help to achieve a mind-blowing

docker build -t my-tag -f Cargo.toml .

Apart from these experiments, you can use an "almost" production-ready caching approach today! You need to have Docker 18.06+, DOCKER_BUILDKIT=1 environment variable, and a special Dockerfile syntax:

# syntax=docker/dockerfile-upstream:experimental

FROM rustlang/rust:nightly as builder

# Copy crate sources and workspace lockfile

WORKDIR /rust-src

COPY Cargo.lock /rust-src/Cargo.lock

COPY cargo-container-tools /rust-src

# Build with mounted cache

RUN --mount=type=cache,target=/rust-src/target \

--mount=type=cache,target=/usr/local/cargo/git \

--mount=type=cache,target=/usr/local/cargo/registry \

["cargo", "build", "--release"]

# Copy binaries into normal layers

RUN --mount=type=cache,target=/rust-src/target \

["cp", "/rust-src/target/release/cargo-buildscript", "/usr/local/bin/cargo-buildscript"]

RUN --mount=type=cache,target=/rust-src/target \

["cp", "/rust-src/target/release/cargo-test-runner", "/usr/local/bin/cargo-test-runner"]

RUN --mount=type=cache,target=/rust-src/target \

["cp", "/rust-src/target/release/cargo-ldd", "/usr/local/bin/cargo-ldd"]

# Copy the binaries into final stage

FROM debian:stable-slim

COPY --from=builder /usr/local/bin/cargo-buildscript /usr/local/bin/cargo-buildscript

COPY --from=builder /usr/local/bin/cargo-test-runner /usr/local/bin/cargo-test-runner

COPY --from=builder /usr/local/bin/cargo-ldd /usr/local/bin/cargo-ldd

# syntax=... defines a Dockerfile builder frontend image and caching is done with --mount flags of RUN instruction.

Update: corrected Dockerfile stage names.

Update 2: changed nightly Cargo registry cache path.

After trying out cargo-build-deps, I noticed that it runs cargo update and seems slower than latest cargo build in Rust nightly. I would say it probably makes sense to just bake this functionality into cargo main for Docker and caching purposes.

awesome @denzp .. i hadn't checked out buildkit, and it looks like good timing as it's no longer experimental on the docker-ce released today :). i'm going to favor this over cargo-build-deps.

@denzp That looks promising. Do you think it would be possible to support both cargo build and cargo check with this? Currently I'm doing something like this mess (based on the suggestions above + rust-aws-lambda):

ADD $SRC/Cargo.toml $SRC/Cargo.lock ./

RUN mkdir src && touch src/lib.rs

ARG checkonly

RUN if [ "x$checkonly" = "x" ] ; then cargo build --target $BUILD_TARGET --release ; else cargo check ; fi

ADD $SRC/src src

RUN if [ "x$checkonly" = "x" ] ; then cargo build --target $BUILD_TARGET --release ; else cargo check && echo "return early after check, this is not an error" && false ; fi

which seems to cache both branches (with/without checkonly supplied as build-arg) separately

@J-Zeitler in your example, change of checkonly build argument alters the whole command. And therefore Docker decides to drop the cached result layer (containing target dir with build artifacts).

With RUN --mount it shouldn't be a problem anymore, because regardless of the cached layer presence, target dir with Cargo cache will be preserved.

Looks like you can simplify the Dockerfile and use only the second command. But is there a reason to run cargo check during image building stage?

@denzp Cool, will try to find some time to test it.

The reason is that I'm on a windows machine and building for an "amazonlinux" host. I guess I could run cargo check locally. Will cargo check always produce the same output regardless of --target?

(also, I noticed that I actually don't even use --target in the check command so it might not do what I want anyways)

eidt: also, yes, with a proper cache I could probably remove the second command. What I do now (as the others above) is using docker layers as cache and ADDing $SRC/src src will evict the cached layers below. That's the reason people poke in the fake src/lib.rs etc

Any new information on this issue? I think a lot of people would love this feature. It would make developing a lot easier in cases. In my project whenever the Rust guys change their code the build takes forever. Having a dependency layer would decrease the build time of our project from 15 minutes to about 45-60 ish seconds

Everyone is on-board with solving the problem, though exactly how is a bit up for debate. We do plan to address it soon though.

@nrc Great to hear!

And here is my version of a workaround, in case anyone else is interested.

FROM rust:slim AS builder

# Copy dependency information only.

WORKDIR /home/

COPY ./Cargo.toml ./Cargo.lock ./

# Fetch dependencies to create docker cache layer.

# Workaround with empty main to pass the build, which must be purged after.

RUN mkdir -p ./src \

&& echo 'fn main() { println!("Dummy") }' > ./src/main.rs \

&& cargo build --release --bin helloworld \

&& rm -r ./target/release/.fingerprint/helloworld-*

# Cache layer with only my code

COPY ./ ./

# The real build.

RUN cargo build --frozen --release --bin helloworld

# The output image

FROM debian:stable-slim

COPY --from=builder /home/target/release/helloworld /opt/app

ENTRYPOINT [ "/opt/app" ]

Running into the same issue, and using Docker. Are we planning on tackling this?

Based on this Stackoverflow question, there are several workaround, but a Cargo flag seems more convenient than what is possible. Workarounds:

- Add Cargo files, create fake

main.rs/lib.rs, then compile. Afterwards remove the fake source and add the real ones. [Caches dependencies, but several fake files with workspaces] - Add Cargo files, create fake

main.rs/lib.rs, then compile. Afterwards create a new layer with the compiled dependencies and continue from there. [Similar to above] - Externally mount a volume for the cache dir. [Caches everything, relies on caller to pass

--mount] - Use

RUN --mount=type=cache,target=/the/path cargo buildin the Dockerfile in new Docker versions. [Caches everything, seems like a good way, but currently too new to work for me. Executable not part of image] - Use cargo-build-deps. [Might work for some, but does not support Cargo workspaces (yet)].

Special note for workspaces: even with a flag like --dependencies-only it'll be a bit inconvenient to individually add all the Cargo files to Docker for this to work, but I don't think there's anything that can be done about that on the Cargo side.

It feels like, having --dependencies-only won't help for the Docker workflow: eventually you will need to bump a package version, or add dependency which will invalidate cache (due to either Cargo.lock or Cargo.toml change).

On the other hand, even after a while working with Rust microservices deployed as Docker images I can confirm that RUN --mount=type=cache works like a charm! But indeed, it requires a modern infrastructure - docker-ce v18.09.

I think that is the desired behavior: if the dependencies change, the

"dependency-only" layer changes, if they don't, they don't. Slow full

builds are not the end of the world, it's just them being the common case

that is the problem.

On Wed, Mar 13, 2019 at 1:17 PM Denys Zariaiev notifications@github.com

wrote:

It feels like, having --dependencies-only won't help for the Docker

workflow: eventually you will need to bump a package version, or add

dependency which will invalidate cache (due to either Cargo.lock orCargo.toml change).

On the other hand, even after a while working with Rust microservices

deployed as Docker images I can confirm that RUN --mount=type=cache works

like a charm! But indeed, it requires a modern infrastructure - docker-ce

v18.09.—

You are receiving this because you are subscribed to this thread.

Reply to this email directly, view it on GitHub

https://github.com/rust-lang/cargo/issues/2644#issuecomment-472588303,

or mute the thread

https://github.com/notifications/unsubscribe-auth/ABxXhvG_WyI74JTMhMb_hyw62x7AfIsfks5vWVzagaJpZM4IXR7U

.

@ohAitch Is on point. Of course full builds will take a while. But there's a big difference between me changing a line in my source code and going through the full cargo dependency tree or just my source code. If dependencies change obviously there is no way to not have to rebuild them but that doesn't happen nearly as often as source code or resource changes

It is possible to not have to rebuild all of them, which I think is the

caching solution; but this issue's titular suggestion is a lot more

explicit, and thus straightforward to integrate with other Docker-like

systems such as Nix

On Wednesday, 13 March 2019, Tristan Schönhals notifications@github.com

wrote:

@ohAitch https://github.com/ohAitch Is on point. Of course full builds

will take a while. But there's a big difference between me changing a line

in my source code and going through the full cargo dependency tree or just

my source code. If dependencies change obviously there is no way to not

have to rebuild them but that doesn't happen nearly as often as source code

or resource changes—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

https://github.com/rust-lang/cargo/issues/2644#issuecomment-472608164,

or mute the thread

https://github.com/notifications/unsubscribe-auth/ABxXhn6C9lBnsvSBRdOPrCj2efuvyy43ks5vWWo4gaJpZM4IXR7U

.

So, the dummy file doesn't really work on workspaces, which is the issue I currently have. And I think --deps-only would be handy specially for when you have workspaces as it could automate that entire process for you.

@felipellrocha - the dummy file _does_ work with workspaces... sort of. What you end up having to do is recreate the structure of your entire workspace in the Docker image, which is super brittle and not ideal.

A --dependencies-only flag is the right solution here, but know that you can still use workspaces while you wait for a native solution and not a hacky one.

So, if you have a Cargo.toml for your workspace similar to this one:

[workspace]

members = [

"shared_library",

"bin_one",

"bin_two",

"bin_three",

]

You're going to end up with a directory structure more or less similar to this:

.

├── Cargo.lock

├── Cargo.toml

├── bin_one

│ ├── Cargo.toml

│ └── src

│ ├── lib

│ │ └── ...

│ └── main.rs

├── bin_two

│ ├── Cargo.toml

│ └── src

│ ├── lib

│ │ └── ...

│ └── main.rs

├── bin_three

│ ├── Cargo.toml

│ └── src

│ ├── lib

│ │ └── ...

│ └── main.rs

├── Dockerfile

├── docker

│ └── dummy

│ ├── bin_one

│ │ ├── Cargo.toml

│ │ └── src

│ │ └── main.rs

│ ├── bin_two

│ │ ├── Cargo.toml

│ │ └── src

│ │ └── main.rs

│ ├── bin_three

│ │ ├── Cargo.toml

│ │ └── src

│ │ └── main.rs

│ └── shared_lib

│ ├── Cargo.toml

│ └── src

│ └── lib.rs

└── shared_lib

├── Cargo.toml

└── src

├── lib.rs

└── stuff

Where your root Cargo.toml, Cargo.lock and your docker/dummy/* all get copied into the Docker image so you can build your release dependencies. Then can can come back later and COPY your _actual_ source into the image and build _that_.

I haven't found a better solution for this, if you have one - I'd absolutely love to know, because right now it's a very manual process and I'd feel really bad for anyone who has to manage more complexity than this or has to figure this out on their own.

I've used the same strategy as @davidarmstronglewis for building my workspaces.

It's been finicky to set up, fragile to maintain, and difficult to document/explain to new team members.

is recreate the structure of your entire workspace in the Docker image, which is super brittle and not ideal.

A

--dependencies-onlyflag is the right solution here

For Docker, wouldn't you have to copy in all of the same Cargo.toml files in the same directory structure in order to specify the dependencies? At the same time, you'd also have to avoid copying in any source files at that point, otherwise changes to your source code would invalidate the dependencies layer.

While I'd like to have a --dependencies-only flag, I don't follow how simply having it will actually make the Docker situation appreciably better. Is there a Dockerfile command to add all files matching a name, preserving their directory structure but not copying any other files?

Could someone sketch out exactly how this would work in a world where:

- Cargo knows

--dependencies-only - You are trying to create a Docker image with:

- one layer for the compiled dependencies

- one layer for the compiled workspace

- Changing a workspace's source file does not cause the dependencies to rebuild

I think the main difference of a situation like this would be not having to create dummy projects anymore. For my project I found a decent workaround by

- creating an empty project

- copying over cargo.toml and lock

- executing

cargo build --release && rm ./src/*.rs ./target/release/deps/<project>* - copying over the rest of the (re-)sourcefiles

- executing another cargo build

From what I can tell this situation would not greatly improve by having the flag, but it would reduce the initial barrier and cranky-ness of setting this up (especially when building multiple modules relying on each other). The flag would (in my example) allow skipping 1 and the && part of 3. I'm assuming it would also speed up the dependency build by a bit.

Of course this is only one example for how it would have affected me. At the time of commenting on this issue previously (a couple months ago) I did not have a working solution and it was really annoying. This approach does work well enough for my usage but of course having the --dependencies-only flag would be a nice to have for sure and clean up my Dockerfile/make it a lot easier to explain what's happening.

Furthermore it would prevent me from having to fiddle around in the /target directory. Maybe that's just me but I thought it was a really dirty solution

@shepmaster Yeah for workspaces, it doesn't help all that much, because we still have to add all Cargo files one by one.

What is saves is not having to generate dummy main/lib files, and delete them afterwards. So only 1/3 of the code is needed, but workspace members still have to be named individually.

even with a flag like --dependencies-only it'll be a bit inconvenient to individually add all the Cargo files to Docker for this to work

(And, if Docker ever decided to introduce a convenient way to add files by pattern but keep their structure, then it'd be really easy: COPY **/Cargo.toml. But it can't be solved by Cargo alone).

Concretely the Dockerfile would look like this:

COPY Cargo.toml ./Cargo.toml

COPY Cargo.lock ./Cargo.lock

COPY sub_one/Cargo.toml sub_one/Cargo.toml

COPY sub_two/Cargo.toml sub_two/Cargo.toml

COPY sub_three/Cargo.toml sub_three/Cargo.toml

COPY sub_four/Cargo.toml sub_four/Cargo.toml

RUN cargo build --dependencies--only

COPY . .

RUN cargo build

Which is better than

COPY Cargo.toml ./Cargo.toml

COPY Cargo.lock ./Cargo.lock

COPY sub_one/Cargo.toml sub_one/Cargo.toml

COPY sub_two/Cargo.toml sub_two/Cargo.toml

COPY sub_three/Cargo.toml sub_three/Cargo.toml

COPY sub_four/Cargo.toml sub_four/Cargo.toml

RUN printf "fn main() {}" > sub_one/main.rs && \

printf "" > sub_one/lib.rs && \

printf "" > sub_one/lib.rs && \

printf "fn main() {}" > sub_one/main.rs && \

cargo build && \

rm sub_one/main.rs sub_two/lib.rs sub_three/lib.rs sub_four/main.rs

COPY . .

RUN cargo build

but not ideal.

EDIT: build.rs must also be 'mocked' in the same way, if present. Build files need to contain fn main() {}(and maybe println!("cargo:rerun-if-changed=build.rs");?)

This is going a bit farther in the woods, but I wonder if it would help if cargo would be able to export a build plan and execute it later, independently of the project files:

cargo build --dependecies-only --export plan1234.toml

And then in Dockerfile:

COPY plan1234.toml .

RUN cargo build --import plan1234.toml

COPY . .

RUN cargo build

Hm, I think that still has the problem of the context in which you run the

first command: when the dependencies do change, you still want the

dockerfile to do the right thing. Though I guess if --export is a

significantly cheaper "dry-run" that doesn't try to actually compile

anything, the resulting lockfile might be useful as a cache key?

On Tuesday, 19 March 2019, Pyry Kontio notifications@github.com wrote:

This is going a bit farther in the woods, but I wonder if it would help if

cargo would be able to export a build plan and execute is:cargo build --dependecies-only --export plan1234.toml

And then in Dockerfile:

COPY plan1234.toml .

RUN cargo build --import plan1234.toml

COPY . .

RUN cargo build—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

https://github.com/rust-lang/cargo/issues/2644#issuecomment-474656063,

or mute the thread

https://github.com/notifications/unsubscribe-auth/ABxXhpJslMj6Ku51WK7yAt14htxpbRTCks5vYZjqgaJpZM4IXR7U

.

to export a build plan and execute it later

I was looking into something in a similar vein. Since Docker can import a tarball and automatically extract it, one could create a Cargo subcommand that creates the skeleton workspace and tars it up. Something like

cargo docker-plan -o plan.tar

ADD plan.tar

RUN cargo build

ADD ...source-files...

RUN cargo build

However, this feels unsatisfying as there's no automatic way to cause Docker to regenerate that plan.tar.

I'm not really excited about a solution that has to be done outside Dockerfile. It's a confusing workflow for other people or my future self. I don't think Docker will add a way to create a tar file as part of the build, as builds should not affect the host OS.

It it my belief that some change on the Docker side is needed for Cargo workspaces to work well with it - it can't be solved entirely on the Cargo side. My personal favourite is a structure-preserving copy command for Docker (15858, 29211): CP **/*.toml. Don't hold your breath though...

That said, --dependencies-only would

- Solve the problem for non-workspace projects

- Make the current 'workaround' with manually added Cargo.toml files as easy as I think it can currently be

- Work well if Docker ever introduces a way to add all the Cargo.toml files at once

Is it possible to synthesize CP **.toml with something like

FROM debian:stable-slim as toml

COPY . .

RUN find . -type f -not -name '*.toml' -delete

FROM rust:slim AS builder

COPY --from=toml . .

COPY ./Cargo.lock .

{...etc}

We discussed this in today's Cargo meeting. We would like to have some better support for Docker in particular, and there are a few other use cases for building just the dependencies of a project (e.g., the RLS did this for a while, or preparing a lab exercise). However, it seems that for all these use cases, just building deps is necessary but not sufficient and therefore just adding a flag is likely not to be the best solution.

Focusing on the Docker use case, I think that an ideal solution would be a third-party custom sub-command, cargo docker or something similar. However, we are missing a lot of detail to know if this is possible and what it would look like.

So we would like to know more specifics about the use case - what are the common tasks and workflows? How would we need to interact with Docker? How would users want to customise the tool? Given these requirements, is there anything that prevents cargo docker being built today? (There is API exposed by Cargo today to do a deps-only build, but it would mean linking against its own version of Cargo, which is sub-optimal).

@nrc Maybe it's good to try and put into words the goal, rather than the method of getting there.

Goal:

For me this is all about making efficient use of docker layers. I want to use the layers to speed up my rust builds so that a small source code change doesn't require a full rebuild. I have no problems with incurring the full rebuild if I change my Cargo.toml deps. I want this to work for both workspace and standalone.

Method in abstract:

Conceptually you achieve this in docker by:

ADD <dependency spec files>

RUN <build dependencies>

ADD <source files>

RUN <build final output>

As long as the files in row 1 are unchanged, Docker uses the cached layer of row 2. If I introduce a change in row 3, then docker will run row 4.

Echoing what @lolgesten said, this is the exact workflow I use. The only thing I'd like to add to that is that this seems to have become a very standard workflow in Dockerfiles for projects in any language with dependency management: I use that same pattern in Dockerized projects for Ruby, NodeJS, Go, Elixir... some variation of those exact same 4 steps:

| Ecosystem | Dep files for first COPY | Install and/or build deps only |

|-----------|--------------------------------------|------------------------------------|

| Ruby | Gemfile, Gemfile.lock | bundle install --deployment |

| Node | package.json, package-lock.json | npm ci |

| Go | go.mod, go.sum | go mod download |

| Elixir | mix.exs, mix.lock | mix deps.get, mix deps.compile |

| Rust | Cargo.toml, Cargo.lock | N/A |

For me personally, cargo build --deps-only et al. does seem it could support that general-use use case without being _too_ Docker specific. _However, if Cargo was to adopt something different that is more clever/bespoke/unique, I'd desire for that solution to be sufficiently advantageous to overcome the additional cognitive overhead / discoverability of having to treat Rust projects differently than what I do most everywhere else._

@nrc With regards to a third-party cargo sub-command, the concern for me would be that this is now something you have to take steps to pre-install in your Dockerfile, rather than just having things work out of the box with a default official Rust docker image. This would then likely lead to a number of unofficial preconfigured rust-with-docker-cargo type images that community folks maintain and keep up to date, that an end-user would either have to discover, and/or copypasta some steps they found elsewhere to get things going. Not necessarily a problem, but certainly less "batteries included" than being able to do things with the official image.

@nrc Can you elaborate more on this?

However, it seems that for all these use cases, just building deps is necessary but not sufficient and therefore just adding a flag is likely not to be the best solution.

I am not sure where this idea came from, given the comments so far on this thread. What problems have been brought up that wouldn't be solved with --dependencies-only, and are they not orthogonal enough to be solved separately?

[edit] a little more:

Because honestly, this seems kind of dubious:

Focusing on the Docker use case, I think that an ideal solution would be a third-party custom sub-command, cargo docker or something similar. However, we are missing a lot of detail to know if this is possible and what it would look like.

We have a proposal for a simple solution that would solve a common use case. I'm worried about this. "We want to make a cargo docker subcommand instead of just implementing the simple solution, but we don't know what it should do yet." At least without hearing the use cases that you're already thinking of, it sounds like over-engineering at this stage.

At least without hearing the use cases that you're already thinking of, it sounds like over-engineering at this stage.

Adding on to @radix - not only that, but the solution would only be applicable for Docker. Any other piece of software that would need to build a project in two stages as well would need a re-implementation. I would argue instead that have the ability to build dependencies separate from the application(s) themselves would enable more composability for future needs as well as satisfy the current one.

On Thursday, 21 March 2019, Christopher Armstrong notifications@github.com

wrote:

@nrc https://github.com/nrc Can you elaborate more on this?

However, it seems that for all these use cases, just building deps is

necessary but not sufficient and therefore just adding a flag is likely not

to be the best solution.I am not sure where this idea came from, given the comments so far on this

thread. What problems have been brought up that wouldn't be solved with

--dependencies-only, and are they not orthogonal enough to be solved

separately?

There is still the "CP **.toml" workspaces problem, which could

hypothetically have a

cargo extract-workspace-configs dest/

command to solve it similar to the build-plan.tar idea; but it could only

really replace the "RUN find -delete" line in my proposed staging solution,

so having the Dockerfile explicitly know about *.toml files instead seems

honestly fine.

Regardless, it's definitely both a separate issue and not docker-specific:

I got linked this discussion from Nix land, which has a very similar "build

one step, hash the entire filesystem after that step, if the hash matches

you can skip ahead" execution model.

(I do get the impression the "but not sufficient" example may have come up

verbally in the meeting, which leads us back to "super curious what the

additional problems are" if there's anything other than workspaces)

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

https://github.com/rust-lang/cargo/issues/2644#issuecomment-475293173,

or mute the thread

https://github.com/notifications/unsubscribe-auth/ABxXhk5RjTzoOp25X0R9BP03pEfglz0Mks5vY619gaJpZM4IXR7U

.

Focusing on the Docker use case, I think that an ideal solution would be a third-party custom sub-command, cargo docker or something similar. However, we are missing a lot of detail to know if this is possible and what it would look like.

@nrc you may want to take a look at Haskell's stack tool integration with docker (or nix!). It is transparent in that you just configure it to use docker and no sub command is needed. As mentioned, previously, they do have a build --only-dependencies command. Although I am not up to date on whether there is a better workflow now for those being discussed here.

Hm, there seems to be an important inversion-of-control difference here of

"cargo(/stack) runs docker to do a build" vs "a docker build runs cargo".

Like, the former requires you to have rust and cargo installed directly on

each machine you're building on, at the correct version etc; which will

sometimes be the case, but does seem like a different environment than the

"top-level Dockerfile(which may in turn be part of a docker-compose or

such)" one originally mentioned.

On Fri, Mar 22, 2019 at 11:16 AM Greg Weber notifications@github.com

wrote:

Focusing on the Docker use case, I think that an ideal solution would be a

third-party custom sub-command, cargo docker or something similar. However,

we are missing a lot of detail to know if this is possible and what it

would look like.@nrc https://github.com/nrc you may want to take a look at Haskell's

stack tool integration with docker (or nix!). It is transparent in that you

just configure it to use docker and no sub command is needed. As mentioned,

previously, they do have a build --only-dependencies command. Although I

am not up to date on whether there is a better workflow now for those being

discussed here.—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

https://github.com/rust-lang/cargo/issues/2644#issuecomment-475727075,

or mute the thread

https://github.com/notifications/unsubscribe-auth/ABxXhrrZUROGhqgOw-WoJiS5wzlEqsM5ks5vZR38gaJpZM4IXR7U

.

@ohAitch if you are doing a build in docker driven by cargo, then you still don't need your compiler (rustc) installed: that would be in the docker image used. In stack, you essentially specify the compiler version, and if you enable docker it will pull a Linux image that has that version. If not, I should note that stack doesn't require you to have the Haskell compiler installed on your machine in any case: it will automatically download and use the version you have specified in your stack.yaml.

I agree that there are situations where one might prefer to have Docker drive cargo, for example a CI build environment that assumes that.

To show the scope of the problem for rust docker using the solutions above, this person was able to reduce rebuild time by 90%, and build size by 95%

At PingCAP we are wanting this too, for the docker use case.

If a --deps-only style flag is not accepted into cargo, on a casual glance I think a cargo plugin could accomplish the same thing.

Since individual deps can be built with the -p flag, like cargo build -p failure, it is almost trivial to read the deps and issue all the individual build commands, wrapped up in a nice cargo build-deps command.

Is there anything obviously wrong with the idea?

@siddontang also had the idea to use the --exclude flag to exclude all the local packages, like cargo build --all --exclude tikv --exclude --fuzzer-afl etc. which seems relatively easy, and again could be done in a plugin.

I admit though I don't understand the issues with docker layers, and why the workarounds all require building a "fake" source tree.

@brson The problem with Docker layers is of cache invalidation. You want to separate the layer of seldom changing artefacts (dependencies) from oft-changing artefacts (the root crate) so that you can invalidate just the oft-changing ones without having to re-build everything. If you include the project files to the same layer with dependencies, you'll end trashing your cache constantly.

Somewhat similar to the docker use case, we at Standard are looking to make cargo play nicely with our build system sandbox which does not allow network access during build.

In order to avoid unnecessary work we decided to approach this problem by implementing a solution which essentially vendors the dependencies. However, if the only thing vendored are sources for dependencies, then all the crates in the dependency tree would have to be rebuilt every build. To avoid the rebuilds, we could "vendor" the already-built dependencies (essentially caching the build output directory), however without cargo build --dependencies-only that is difficult to achieve.

A while back I commented an example of a Docker workflow without a --dependencies-only flag.

I'd like to note that I've found out that I forgot about build.rs in that example. If there is a build.rs, that also needs to be 'mocked' along with lib.rs and main.rs.

@brson I've just found this, looks a lot like what you've proposed: https://github.com/nacardin/cargo-build-deps

EDIT: it has appeared in this discussion before, and there's been some feedback already.

I found this because i had a Dockerfile that always produced a hello world. rm main.rs as in some examples didn't help either. I now added a touch main.rs before the second build to avoid the problem that the first build is run after the original main.rs was modified.

Which interestingly seemed to work for a some time because the first build was cached and thus the main.rs modification time was later.

Does anyone know if this is an issue that is currently being discussed or worked on?

Maybe a status update? Maybe this is fixed by a peripheral tool and I just didn't notice?

I'm not aware of any progress.

This can also be useful to optimize docker build.

Is there a decision or a guide on how to solve this in the meantime in order to have smaller images and not do a build on every change?

Is there a decision or a guide on how to solve this in the meantime in order to have smaller images and not do a build on every change?

@mghz

COPY ./Cargo.toml ./Cargo.lock ./

RUN mkdir src/

RUN echo "fn main() { }" > src/main.rs

RUN cargo build --release

RUN rm ./target/release/deps/project_name*

COPY . .

RUN cargo build --release

Don't forget to change project_name accordingly.

@KalitaAlexey wrote:

Why do you want it?

Because when building on continuous integration server I want to split the whole thing into a reasonable number of steps. This improves readability: I know which step is failing at the first glance, and the output from given step contains less clutter.

Sorry for hijacking the conversation.

While I agree this feature is worth implementing for the simplicity that it'll provide, at least for the CI caching purpose that lots of people are talking about here, wouldn't it better to use sccache now in this case? Although the Rust support is still experimental, it's been much more mature since this issue was opened in 2016. And compared with Docker which ditches the whole cache layer if Cargo.toml/lock is modified, sccache is capable of incrementally compiling the dependencies, so you don't have to wait for everything to be rebuilt after a cargo add or cargo update.

Another benefit of using sccache is that it's got various storage options. Once the local storage grows too large and your CI cache downloading/uploading gets slowed down, it's basically hassle-less to migrate to S3/Redis/etc. Not to mention that it's got distributed compilation now which might be useful for CI/CD.

I hope there could be a solution for workspace repos too. Use case:

ADD Cargo.* /app/

ADD rust/display-sim-build-tools/Cargo.* rust/display-sim-build-tools/

ADD rust/display-sim-core/Cargo.* rust/display-sim-core/

ADD rust/display-sim-native-sdl2/Cargo.* rust/display-sim-native-sdl2/

ADD rust/display-sim-opengl-render/Cargo.* rust/display-sim-opengl-render/

ADD rust/display-sim-stub-render/Cargo.* rust/display-sim-stub-render/

ADD rust/display-sim-testing/Cargo.* rust/display-sim-testing/

ADD rust/display-sim-web-error/Cargo.* rust/display-sim-web-error/

ADD rust/display-sim-web-exports/Cargo.* rust/display-sim-web-exports/

ADD rust/display-sim-webgl-render/Cargo.* rust/display-sim-webgl-render/

ADD rust/enum-len-derive/Cargo.* rust/enum-len-derive/

ADD rust/enum-len-trait/Cargo.* rust/enum-len-trait/

RUN echo "Faking project structure." \

&& mkdir -p rust/display-sim-build-tools/src && touch rust/display-sim-build-tools/src/lib.rs \

&& mkdir -p rust/display-sim-core/src && touch rust/display-sim-core/src/lib.rs \

&& mkdir -p rust/display-sim-native-sdl2/src && touch rust/display-sim-native-sdl2/src/lib.rs \

&& mkdir -p rust/display-sim-opengl-render/src && touch rust/display-sim-opengl-render/src/lib.rs \

&& mkdir -p rust/display-sim-stub-render/src && touch rust/display-sim-stub-render/src/lib.rs \

&& mkdir -p rust/display-sim-testing/src && touch rust/display-sim-testing/src/lib.rs \

&& mkdir -p rust/display-sim-web-error/src && touch rust/display-sim-web-error/src/lib.rs \

&& mkdir -p rust/display-sim-web-exports/src && touch rust/display-sim-web-exports/src/lib.rs \

&& mkdir -p rust/display-sim-webgl-render/src && touch rust/display-sim-webgl-render/src/lib.rs \

&& mkdir -p rust/enum-len-derive/src && touch rust/enum-len-derive/src/lib.rs \

&& mkdir -p rust/enum-len-trait/src && touch rust/enum-len-trait/src/lib.rs \

&& mkdir -p rust/display-sim-testing/benches && touch rust/display-sim-testing/benches/whole_sim_bench.rs \

&& echo "fn main() { }" > rust/display-sim-wasm.rs && echo "fn main() { }" > rust/display-sim-default-entrypoint.rs \

&& cargo fetch && cargo --offline build --release

ADD rust/ /app/rust/

RUN cargo --offline test --all \

&& cargo --offline build --release \

&& cargo --offline clean

See up there, that I was forced to create the whole folder structure, with its lib files, bin files and bench files.

My personal take on this problem would be to propose a new sort of lock file that contains the data of all the dependencies of all the workspace members, and a new build option that considers only that single file as input, thus precompiling all the workspace dependencies.

After that, you would be good for using cargo --offline, you would be able to add code changes without having to recompile dependencies in docker builds, and you wouldn't need to mantain those lines in Dockerfile which is quite error prone.

Just my two cents, the idea of adding this as a sub-command to cargo (similar to how stack does it) is annoying in at least one way: it means that if I want to do builds using different languages/compilers on the same machine I now have to pre-install all those tools (in addition to Docker) to the build machine. Ideally I'd like to have the build machinery (cargo, rustc, etc.) as part of the throw-away container and know that I can get a (more or less) identical build on a different machine without having to check if the build tool versions are the same.

Also it means that if the CI tool you're using already supports Docker you don't have to worry about it also supporting cargo.

If it's a cargo sub-command, it would want to be a subcommand within the throw-away container, I expect.

Replying to @theypsilon 's https://github.com/rust-lang/cargo/issues/2644#issuecomment-537637178 , here is a shorter example that I think does about the same thing. I'm new to rust, so correct me if I've misused cargo. It's a similar pattern I've used before to work around docker's current copy limitations https://github.com/moby/moby/issues/15858#issuecomment-532016362 . Though this approach is more flexible given arbitrary scripts can be used to condition what breaks the docker cache layer:

# Add prior stage to cache/copy from

FROM rust AS cache

WORKDIR /tmp

# Copy from build context

COPY ./ ./ws

# Filter or glob files to cache upon

RUN mkdir ./cache && cd ./ws && \

find ./ -name "Cargo.*" -o \

-name "lib.rs" -o -name "main.rs" | \

xargs cp --parents -t ../cache && \

cd ../cache && \

find ./ -name "lib.rs" | \

xargs -I% sh -c "echo > %" && \

find ./ -name "main.rs" | \

xargs -I% sh -c "echo 'fn main() { }' > %"

# Continue with build stage

FROM rust as build

WORKDIR /ws

# copy workspace dependency metadata

COPY --from=cache /tmp/cache ./

# fetch and build dependencies

RUN cargo fetch && cargo build

# copy rest of the workspace code

COPY --from=cache /tmp/ws ./

# build code with cached dependencies

RUN cargo build

You may also want to explicitly ignore some files and folders using the .dockerignore, e.g. like to avoid copying your large local target folders into the rest of the docker daemon's build context.

The lack of this is incredibly painful for docker builds and it seems (on the surface) to be a relatively simple thing to add to cargo. As per https://github.com/rust-lang/cargo/issues/2644#issuecomment-537637178, copying Cargo.toml around doesn't work if using the workspaces feature. To add to that, it also doesn't work if the Cargo.toml has a [[bin]] specified, because then Cargo errors out when it can't find a file at bin.path.

Personally, I think that Docker should do a better job of enabling build cache tools. But I also believe that this can be fixed more quickly from Cargo's side by adding a --dependencies-only flag.

If I added this to cargo, what are the chances that the patch would be accepted? What are the considerations _against_ adding this flag?

wouldn't it better to use sccache now in this case?

How do you imagine that sccache can be integrated into a docker build? You can't mount volumes or access the network during a docker build; the only option for getting data into the build is to copy the files (and there is no option for getting the data out of a build step, so no way to update sccache).

if you're using dockerkit (DOCKERKIT=1) you can try:

WORKDIR APP

RUN --mount=type=cache,id=cargo,target=/root/.cargo --mount=type=cache,id=target,target=/app/target cargo build --out-dir /app/build

I've not tried but this yet should keep downloaded and compiled packages from downloading / compiling again

if you're using dockerkit (

DOCKERKIT=1) you can try:WORKDIR APP RUN --mount=type=cache,id=cargo,target=/root/.cargo --mount=type=cache,id=target,target=/app/target cargo build --out-dir /app/buildI've not tried but this yet should keep downloaded and compiled packages from downloading / compiling again

Thanks, mathroc! I assume you mean DOCKER_BUILDKIT=1? I do think this is now the best way to solve the issue, but it took me a loooong time to go from concept to reality on this.

I learned about DOCKER_BUILDKIT soon after posting my previous comment, and I've pretty much been banging my head against my desk trying to get it to work for the last two days, at least 16 hours of continual banging.

But now, it does work!

The caching incantation is:

RUN --mount=type=cache,target=/usr/local/cargo,from=rust,source=/usr/local/cargo \

--mount=type=cache,target=target \

cargo build

I encountered multiple issues before it actually worked.

First, the rust image sets CARGO_HOME to /usr/local/cargo, so of course mounting /root/.cargo does nothing in that base image.

Second, --mount=type=cache,target=${CARGO_HOME} produces a fresh volume on every build, so you have to manually expand that to /usr/local/cargo. This seems like just due to immaturity in the buildkit backend.

Third, you can't cache just the parts of $CARGO_HOME suggested here, or else you'll get a fingerprinting issue and the target/ artifacts will rebuild even if you've exposed the previous artifacts through the second cache mount.

Oof, that took a lot of time to figure out. But it's so, so worth it.

I just skimmed this thread and didn't see https://github.com/denzp/cargo-wharf mentioned. That seems to be the correct approach for improved caching with docker.

wouldn't it better to use sccache now in this case?

How do you imagine that sccache can be integrated into a docker build? You can't mount volumes or access the network during a docker build; the only option for getting data into the build is to copy the files (and there is no option for getting the data _out_ of a build step, so no way to update sccache).

Of course you can access the network in a Docker build. That's how packages and stuff are installed.

Here's a full (!), working example using sccache. For multiple binaries/libraries, you'd have to create multiple dummy lib.rs/main.rs files.

Dockerfile:

FROM rust:1.37.0-buster as builder

WORKDIR /usr/src

RUN mkdir server

WORKDIR /usr/src/server

# Build dependencies as separately cached Docker step

# (not built into cargo: https://github.com/rust-lang/cargo/issues/2644)

RUN apt-get update \

&& apt-get install -y --no-install-recommends --no-install-suggests \

cmake=3.13.4-1 $(: "Needed for grpcio-sys build") \

&& apt-get clean \

&& rm -rf /var/lib/apt/lists/*

ARG USE_SCCACHE

ARG SCCACHE_VERSION

ENV SCCACHE_VERSION=${SCCACHE_VERSION:-0.2.12}

RUN \

if [ "${USE_SCCACHE}" = 1 ]; then \

set -x ;curl -fsSLo /tmp/sccache.tgz "https://github.com/mozilla/sccache/releases/download/${SCCACHE_VERSION}/sccache-${SCCACHE_VERSION}-x86_64-unknown-linux-musl.tar.gz" \

&& mkdir /tmp/sccache \

&& tar -xzf /tmp/sccache.tgz -C /tmp/sccache --strip-components 1; \

fi

COPY server/Cargo.lock server/Cargo.toml ./

RUN sed -i'' 's/build = "build.rs"/## no build.rs yet/' Cargo.toml

RUN mkdir src && echo 'fn main(){println!("Dummy");}' >src/main.rs

ARG SCCACHE_REDIS

RUN if [ "${USE_SCCACHE}" = 1 ]; then export RUSTC_WRAPPER=/tmp/sccache/sccache; fi \

&& cargo build --release

# keep dummy main.rs for later

RUN rm Cargo.toml

# Application dependencies

RUN apt-get update \

&& apt-get install -y --no-install-recommends --no-install-suggests \

cmake=3.13.4-1 \

protobuf-compiler \

&& apt-get clean \

&& rm -rf /var/lib/apt/lists/*

# Build build.rs dependencies (such as protoc-grpcio) and generate code from protos

COPY server/build.rs server/Cargo.toml ./

RUN mkdir ../proto

COPY proto/ ../proto/

RUN mkdir src/grpc

RUN if [ "${USE_SCCACHE}" = 1 ]; then export RUSTC_WRAPPER=/tmp/sccache/sccache; fi \

&& cargo build --release

RUN rm src/main.rs

COPY server/ ./

# Enforce re-building the source file

RUN touch src/main.rs

RUN if [ "${USE_SCCACHE}" = 1 ]; then export RUSTC_WRAPPER=/tmp/sccache/sccache; fi \

&& cargo build --release

FROM debian:buster-20190812

ENV DEBIAN_FRONTEND=noninteractive

# hadolint ignore=DL3008,SC2006,SC2046

RUN apt-get update \

&& apt-get install -y --no-install-recommends \

ca-certificates \

libssl-dev \

\

`: "Install debugging tools"` \

curl \

net-tools \

vim-tiny \

&& apt-get clean \

&& rm -rf /var/lib/apt/lists/*

COPY --from=builder /usr/src/server/target/release/supercoolapp /supercoolapp

CMD ["/supercoolapp"]

And in reasonable places of my bashrc:

# Start Redis LRU cache (make this run only once, e.g. through an alias!)

pkill redis-server && sleep 5; redis-server --protected-mode no --dir ~/ --dbfilename .sccache-redis-dump.rdb --save 900 1 --maxmemory 200mb --maxmemory-policy allkeys-lru

# Set sccache Redis host to my laptop's IP which is reachable via local Docker build

SCCACHE_REDIS="redis://$(ifconfig en0 | grep "inet " | awk '{print $2}')"

export SCCACHE_REDIS

This should only differ by the IP/host of the Redis server.

Thanks, mathroc! I assume you mean DOCKER_BUILDKIT=1? I do think this is now the best way to solve the issue, but it took me a loooong time to go from concept to reality on this.

@masonk, yes, that’s the one :+1:

thx for doing the headbanging and for sharing the results !

Second,

--mount=type=cache,target=${CARGO_HOME}produces a fresh volume on every build, so you have to manually expand that to/usr/local/cargo. This seems like just due to immaturity in the buildkit backend.

adding id=something to the --mount might solve that. I'm really unsure, again I’ve not tried yet

if you're using dockerkit (

DOCKERKIT=1) you can try:WORKDIR APP RUN --mount=type=cache,id=cargo,target=/root/.cargo --mount=type=cache,id=target,target=/app/target cargo build --out-dir /app/buildI've not tried but this yet should keep downloaded and compiled packages from downloading / compiling again

Thanks, mathroc! I assume you mean DOCKER_BUILDKIT=1? I do think this is now the best way to solve the issue, but it took me a loooong time to go from concept to reality on this.

I learned about DOCKER_BUILDKIT soon after posting my previous comment, and I've pretty much been banging my head against my desk trying to get it to work for the last two days, at least 16 hours of continual banging.

But now, it does work!

The caching incantation is:

RUN --mount=type=cache,target=/usr/local/cargo,from=rust,source=/usr/local/cargo \ --mount=type=cache,target=target \ cargo buildI encountered multiple issues before it actually worked.

First, the rust image sets CARGO_HOME to

/usr/local/cargo, so of course mounting/root/.cargodoes nothing in that base image.Second,

--mount=type=cache,target=${CARGO_HOME}produces a fresh volume on every build, so you have to manually expand that to/usr/local/cargo. This seems like just due to immaturity in the buildkit backend.Third, you can't cache just the parts of $CARGO_HOME suggested here, or else you'll get a fingerprinting issue and the target/ artifacts will rebuild even if you've exposed the previous artifacts through the second cache mount.

Oof, that took a lot of time to figure out. But it's so, so worth it.

Incase someone is wondering how to make the cache working with DOCKER_BUILDKIT, see this example here: https://stackoverflow.com/a/55153182/2302437

Specifically:

- Make sure you have latest Docker 19+

- Enable experimental syntax:

# syntax=docker/dockerfile:experimental - Use this line in your docker file to cache download/build

RUN --mount=type=cache,target=/usr/local/cargo,from=rust,source=/usr/local/cargo \ --mount=type=cache,target=target \ cargo build --release

This is pretty good, thx @masonk

Just a note, I just spent over an hour for debugging something that was very stupid little thing in the end: needing to do touch src/main.rs to ensure a fresh build, instead of the "placeholder main.rs" getting build, as mentioned earlier in this thread.

I think something should be done for this issue.

I think it's even questionable that Cargo apparently checks only if the file is newer than the old one. It should just check if has a different timestamp, and even do more involved fingerprinting (a quick checksum) for the main file.

I am trying to get a minimal version of this to work in accordance with @benmarten's comment.

This is my project:

❯ tree project

project

├── Cargo.toml

└── src

└── lib.rs

This is my Cargo.toml:

[package]

name = "project"

version = "0.1.0"

authors = ["Ethan Brooks <[email protected]>"]

edition = "2018"

# See more keys and their definitions at https://doc.rust-lang.org/cargo/reference/manifest.html

[dependencies]

rand = "0.7.3"

This is my Dockerfile:

# syntax=docker/dockerfile:experimental

FROM rustlang/rust:nightly

COPY project/ /root/project/

RUN --mount=type=cache,target=/usr/local/cargo,from=rust,source=/usr/local/cargo

\ --mount=type=cache,target=target \ cargo build --manifest-path /root/project/Cargo.toml

When I run I get

❯ DOCKER_BUILDKIT=1 docker build -t project .

[+] Building 5.1s (4/4) FINISHED

=> [internal] load .dockerignore 0.0s

=> => transferring context: 34B 0.0s

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 312B 0.0s

=> resolve image config for docker.io/docker/dockerfile:experimental 4.8s

=> CACHED docker-image://docker.io/docker/dockerfile:experimental@sha256:787107d7f7953cb2d95e 0.0s

failed to solve with frontend dockerfile.v0: failed to solve with frontend gateway.v0: rpc error: code = Unknown desc = Dockerfile parse error line 6: unknown instruction: \

@ethanabrooks your Dockerfile has a syntax error, and it's mentioned at the very end of the last line of output. Your line begins with a backslash \. It looks like that backslash should actually be at the end of the previous line.

Right. Thanks for the correction. The working version of the Dockerfile is

# syntax=docker/dockerfile:experimental

FROM rustlang/rust:nightly

COPY project/ /root/project/

RUN --mount=type=cache,target=/usr/local/cargo,from=rust,source=/usr/local/cargo \

--mount=type=cache,target=target \

cargo build --manifest-path /root/project/Cargo.toml

The problem that I am having is that if I make a change to src/lib.rs my Dockerfile still rebuilds the rand dependency. Or at least it appears to:

❯ DOCKER_BUILDKIT=1 docker build -t project .

[+] Building 5.1s (9/10)

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 37B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 34B 0.0s

=> resolve image config for docker.io/docker/dockerfile:experimental 0.7s

=> CACHED docker-image://docker.io/docker/dockerfile:experimental@sha256:787107d7f7953cb2d95e 0.0s

=> [internal] load metadata for docker.io/rustlang/rust:nightly 0.0s

=> CACHED [stage-0 1/3] FROM docker.io/rustlang/rust:nightly 0.0s

=> CACHED FROM docker.io/library/rust:latest 0.0s

=> => resolve docker.io/library/rust:latest 0.3s

=> [internal] load build context 0.0s

=> => transferring context: 321B 0.0s

=> [stage-0 2/3] COPY project/ /root/project/ 0.0s

=> [stage-0 3/3] RUN --mount=type=cache,target=/usr/local/cargo,from=rust,source=/usr/local/c 4.1s

=> => # Compiling getrandom v0.1.14

=> => # Compiling cfg-if v0.1.10

=> => # Compiling ppv-lite86 v0.2.6

=> => # Compiling rand_core v0.5.1

=> => # Compiling rand_chacha v0.2.2

Hi is there any progress with this issue? @alexcrichton @ehuss We should focus on permanent solutions and not workarounds presented in this thread. This issue is huge blocker and as it's almost 4 years old sends negative message to anyone wanted to start with rust these days. Is there any plan / roadmap? Can I help somehow? Is there anything which is blocking this issue to be resolved?

@elmariofredo It's not clear to me what you are asking for. It seems like this issue is mostly focused on "how to pre-cache in Docker", and AFAIK there isn't a single clear answer to that. Are you trying to cache with Docker? Does Docker Buildkit not work for you?

You can help by creating a project that solves the problem. It looks like several people have done that, but perhaps they don't fit your needs? It seems unlikely there will be a one-size-fits-all solution, but if there is general guidance, some documentation on how to properly use Docker sounds useful.

AFAICT, the original request of a --dependencies-only flag wouldn't help with Docker. If it would, it would help to write a clear design document of how that would work.

@ehuss I don't agree with this. A --dependencies-only would solve 99% of all docker caching issues.

This would be the minimal possible docker, if we had some way of expressing it.

FROM rust:latest

COPY Cargo.toml Cargo.lock /mything

RUN cargo build-deps --release # creates a layer that is cached

COPY src /mything/src

RUN cargo build --release # only rebuild this when src files changes

CMD ./target/release/mything

And it would work great with docker layer caching since on src changes, only the last RUN would have to run.

AFAICT, the original request of a

--dependencies-onlyflag wouldn't help with Docker. If it would, it would help to write a clear design document of how that would work.

It would help with docker's layer caching

It's also relevant for pre-caching for packaging. For instance, to package a .deb, we need to prepare a complete source tree to package and ship it as "source package", and do a dedicated build step to compose a "binary package". FYI, debian is currently packaging things differently, with custom logic to prepare the source packages, but, in general, it'd be extremely useful to be able to pre-download dependencies. Docker packaging is not the only use case.

To answer the caching question:

Right now this is common:

Step 5/14 : COPY . .

---> b5f0526a3fdd

Step 6/14 : RUN cargo build --release

---> Running in 8a7eef036c01

Updating crates.io index

Downloading crates ...

Downloaded serde_json v1.0.48

Downloaded log v0.4.8

Downloaded rand v0.7.3

Downloaded failure v0.1.7

Downloaded serde v1.0.104

Downloaded reqwest v0.10.4

Downloaded lazy_static v1.4.0

[... a long time later ...]

Finished release [optimized] target(s) in 31m 37s

Removing intermediate container 8a7eef036c01

---> 4d6f1f8935fb

Now if we touch _any_ file and we ask docker to build a container cargo is going to 1) redownload the whole index 2) compile all dependencies:

Step 5/14 : COPY . .

---> 6427ed267359

Step 6/14 : RUN cargo build --release

---> Running in 8d9ca24ca7

Updating crates.io index

Downloading crates ...

Downloaded serde_json v1.0.48

Downloaded log v0.4.8

Downloaded rand v0.7.3

Downloaded failure v0.1.7

Downloaded serde v1.0.104

Downloaded reqwest v0.10.4

Downloaded lazy_static v1.4.0

[... a long time later ...]

Finished release [optimized] target(s) in 31m 37s

Removing intermediate container 8d9ca24ca7

---> 227d4df84225

Instead I'd like to do something like this:

Step 5/14 : COPY Cargo.toml Cargo.lock .

---> 6427ed267359

Step 6/14 : RUN cargo build --release --dependencies-only

---> Running in 8d9ca24ca7

Updating crates.io index

Downloading crates ...

Downloaded serde_json v1.0.48

Downloaded log v0.4.8

Downloaded rand v0.7.3

Downloaded failure v0.1.7

Downloaded serde v1.0.104

Downloaded reqwest v0.10.4

Downloaded lazy_static v1.4.0

[... a long time later ...]

Finished release [optimized] target(s) in 31m 37s

Removing intermediate container 8d9ca24ca7

---> 227d4df84225

Step 7/14 : COPY . .

---> 6427ed267359

Step 8/14 : RUN cargo build --release

---> Running in 8d9ca24ca7

Finished release [optimized] target(s) in 3m 37s

Removing intermediate container 8d9ca24ca7

---> 227d4df84225

And after a minor edit to src/lib.rs building the container should look like this:

Step 5/14 : COPY Cargo.toml Cargo.lock .

---> Using cache

---> 6427ed267359

Step 6/14 : RUN cargo build --release --dependencies-only

---> Using cache

---> 227d4df84225

Step 7/14 : COPY . .

---> 6427ed267359

Step 8/14 : RUN cargo build --release

---> Running in 8d9ca24ca7

Finished release [optimized] target(s) in 3m 37s

Removing intermediate container 8d9ca24ca7

---> 227d4df84225

Most of the dockerfiles posted to achieve this don't look very maintainable and pulling 3rd party binaries that implement this is not possible due to security policies in a lot of companies. This should "just work" with a stock rust docker image.

@ehuss I have tried to create Pre-RFC here https://internals.rust-lang.org/t/pre-rfc-allow-cargo-build-dependencies-only/11987 please let me know if you have any suggestions how to improve it it's my first Pre-RFC 😉

Thanks answers from @lolgesten, @NilsIrl, @MOZGIII and @kpcyrd given the speed and amount of response I see there is real need for this to be solved. Please feel free to post on Pre-RFC thread to support or add more information. Thanks!

It seems like with that design, any change to Cargo.toml or Cargo.lock would completely invalidate the cache. Is that not a problem for you? Most projects I've been involved with, those files change fairly regularly. How would it work with a workspace? How would it handle path dependencies?

@ehuss personally I would have no problem with Cargo.toml/lock changes causing a cache invalidation. That is perfectly symmetric with how changes to package.json/lock affects nodejs builds.

@ehuss

those files change fairly regularly.