Caffe: Sparse convolutional neural networks

Anyone has interest to utilize the sparsity to accelerate DNNs?

I am working on the fork https://github.com/wenwei202/caffe/tree/scnn and currently, on average, achieve ~5x CPU and ~3x GPU layer-wise speedups of convolutional layers in AlexNet by off-the-shelf GEMM (after ~2% top1 accuracy loss).

http://papers.nips.cc/paper/6504-learning-structured-sparsity-in-deep-neural-networks.pdf

All 194 comments

@wenwei202 could you explain a bit further how to use your fork? Any example? I have convolution layers where 90% of the weights are zero if I use your version of caffe the computations will automatically take advantage of this sparsity? If I use a dense matrix will the computations be slower or will it use the normal way of computing? Thanks for sharing your work :+1:

@jpiabrantes You can use conv_mode in each conv layer to indicate which method be utilized to do the computation.

e.g.

layer {

name: "conv2"

type: "Convolution"

bottom: "norm1"

top: "conv2"

convolution_param {

num_output: 256

pad: 2

kernel_size: 5

group: 2

conv_mode: LOWERED_CSRMM # sparse weight matrix in CSR format * lowered feature maps

# conv_mode: LOWERED_GEMM # default original matrix multiplication

}

}

Thanks

I just tested on the Lenet network for the MNIST example. I was able to achieve the following sparse layers:

conv1 is 75.4 percent sparse

conv2 is 94.7 percent sparse

ip1 is 74.5 percent sparse

ip2 is 89.5 percent sparse

I used conv_mode: LOWERED_CSRMM and connectivity_mode: DISCONNECTED_GRPWISE. I used the GPU and the sparse network was not faster. Sometimes it was even slower, my batchsize is 1.

@jpiabrantes in CPU mode, you need to use mkl. LOWERED_CSRMM is only implemented by mkl sparse blas since sparseblas is not supported by openblas and atlas.

@wenwei202 I used the GPU mode.

@jpiabrantes it is normal to achieve very limited 'speedup' in GPU even you have sparsity higher than 90%. Because GPU is high-parallelism, and irregular sparse pattern will impact the performance. I am working on structured sparsity to achieve speedup in GPU.

@wenwei202 I am not able to complete compilation. 'make runtest' fails.

@RupeshD @wenwei202

When make runtest, use atlas instead of mkl ( seems mkl has some problems to pass some testcases) and export following variables if you have more than one GPU:

export CUDA_VISIBLE_DEVICES=0 # use one GPU

To stabilize the sparsity during training, I zero out weights whose absolute values are smaller than 0.0001 after each weight updating. So, the precision of RMSPropSolverTest may not be enough to pass the test. You can comment the following code if you do not want to zero out (but it is recommended during training to stabilize the sparsity).

template <typename Dtype>

void Net<Dtype>::Update() {

for (int i = 0; i < learnable_params_.size(); ++i) {

learnable_params_[i]->Update();

learnable_params_[i]->Zerout(); //comment this if you do not want to zerout.

}

}

The only failed (crashed) test case is "TYPED_TEST(ConvolutionLayerTest, Test0DConvolution)" of https://github.com/wenwei202/caffe/blob/scnn/src/caffe/test/test_convolution_layer.cpp#L311.

And, I don't know why. If your guys can figure out, that would be great. Temporarily, I commented codes with in and passed all other test cases. Test0DConvolution was not used for usual 2D or 3D convolution, so it might not be a concern.

Hope this helps.

-Wei

@wenwei202

Hello, I think you have implemented Liu.s CVPR Sparse Convolution Neural Network. But in your fork of caffe_scnn(https://github.com/wenwei202/caffe/tree/scnn), I can't find any procedure to implement that(I know you implemented group_lasso and so on, but how can your code implement methods described in Liu's Paper?? Can you give me a simple tutorial??)

Thank you in advance.

@wenwei202

Besides, I can see you wrote 'models/eilab_reference_sparsenet/deploy_scnn.prototxt' and so on in some python files, but i can't find anyone of them.How can I generate them or where can i find them??

@zhaishengfu The implementation was abandoned. Hardly it can achieve good speedup unless the sparse weights were hardcoded in the source code as the paper did. I didn't try hardcoding weights but you are free to try if you have interest. What the paper did was to convert each conv layer to three small layers. You can use this to generate the equivalent net prototxt and this to generate the corresponding decomposed caffemodel. But the code is deprecated.

@wenwei202 Thank you for your reply. But i don't understand your meaning of 'hardcoded'. I didn't see words describing about it in the paper. According to my understanding, you can get speed-up as long as your network is sparse and you implemented methods of sparse-dense matrix multiplication described in the paper. Am i wrong???

@zhaishengfu Please refer to section 4 in the paper, like "Therefore, the location of non-zero elements are known and can be encoded directly in the compiled multiplication code." The duplication of that work was abandoned because of that tricky scheme. Our speedup is achieved by structured sparsity to overcome the irregular memory access pattern suffered from random distribution of sparse weights in the memory space. Hopefully, we can release our related paper soon.

@wenwei202 Thank you very much. Really looking forward to your paper. Can you let me know when you realease your paper??(or can you tell me the name of your paper??)

Hi @zhaishengfu @jpiabrantes @RupeshD @pluskid @sergeyk , our paper related to this caffe fork is just accepted by NIPS 2016. You are welcome to contribute, in case you still have interest in sparse convolutional neural networks. [[paper](http://arxiv.org/abs/1608.03665)] [[Github code](https://github.com/wenwei202/caffe/tree/scnn) ]

@wenwei202 Thank you very much!! I will read it carefully!! I really enjoy your contribution to this fork

@wenwei202 hello, i have seen your paper and code roughly. is the code same with your original code?? i did't see any difference(or may be i should see more carefully)

besides, i have used your original code to train my model(regression problem). it is useful but i lose some accuracy. and if i set the learning rate to >10^-5, it will go to "nan". so i can only set it to small number and the convergence is very slow...

@zhaishengfu Please use the scnn fork, and I have updated tutorial. Help that will help.

@wenwei202 ok, indeed i have used your code already. i used all of your related parameter to generate my prototxt as following. I see that you don't use tensor decomposition.

layer {

name: "conv1_1"

type: "Convolution"

bottom: "image"

top: "conv1_1"

param {

lr_mult: 1

decay_mult: 1

breadth_decay_mult: 1.0

kernel_shape_decay_mult: 1.0

block_group_lasso {

xdimen: 9

ydimen: 64

block_decay_mult: 1.0

}

regularization_type: "L1"

}

param {

lr_mult: 1

decay_mult: 1

breadth_decay_mult: 0.0

kernel_shape_decay_mult: 0.0

regularization_type: "L1"

}

connectivity_mode: DISCONNECTED_ELTWISE

convolution_param {

num_output: 64

bias_term: true

pad: 1

kernel_size: 3

group: 1

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

}

}

}

For the setting:

block_group_lasso {

xdimen: 9

ydimen: 64

block_decay_mult: 1.0

}

what's the meaning of 964? Dose it mean that it will reserve 964 group of weights, and zero-out others?

@hiyijian the code says clearly, the xdimen and ydimen represents the column and row dimension respectively. For example, if you have A rows and ydimen is B, then you will have A/B groups and in each group you will use regularization

Thanks. Clear now.

Is there any guide to set proper xdimen and ydimen in order to achieve better performance in accuracy and speed?

@hiyijian Indeed i also want to know the answer. In my trial of traning(my problem is not classification but regression), when the sparsity gets about >60%, the accuracy will decrease apprently. I think the configuration of xdimen and ydimen is related to your network and question. Maybe you can set the configuration as the paper says(such as xdimen is equal to the columns of your convolution kernel and ydimen is equal to the rows of your convolutional kernel).

Thank you @zhaishengfu

Maybe the network could be fine-tuned without SSL to regain the accuracy as paper report. I will have a try

@zhaishengfu @hiyijian The setups of xdimen and ydimen are based on what kinds of structure sparsity you want. For example, if weight matrix with many all-zero columns are expected, then xdimen = 1 and ydimen = the number of rows. For the trade off between accuracy and sparsity, pls train nn without ssl first to get the baseline, then train it ssl, and finally finetune it without ssl. Make sure your training converges well at every phase.

Thanks @wenwei202 . It very helps.

You introduce 5 ways for group lasso:

1、filter-wise and channle-wise

2、shape-wise

3、depth-wise

4、2D-filter-wise

5、filter-wise and shape-wise

Would you like to make it more clear : How to put them into practice respectively via xdimen/ydimen control ?

Say we have a typical conv layer with nfilter* nchannel * nHeight * nWidth = 128 * 64 * 3 * 3

1、filter-wise and channle-wise: xdimen = 9 and ydimen >= 1

2 、shape-wise: xdimen != 9 and ydimen = 0

3、depth-wise: no idea

4、2D-filter-wise: xdimen = 9 and ydimem = 1

5、filter-wise and shape-wise: xdimen != 9 and ydimen >= 1

Did I do anything obivoius stupid?

@hiyijian In your example, as a filter is reshaped as a row in the weight matrix in Caffe, the setups would be be:

filter-wise: xdimen=64x3x3, ydimen=1

channel-wise: xdimen=3x3, ydimen=128

shape-wise: (1,128)

depth-wise: (64x3x3, 128)

2d-filter-wise: (3x3,1)

You can set up multiple block_group_lasso as you want.

@wenwei202 Cool. I got the point. Thanks

@wenwei202 clear

@wenwei202 hello, i have used your methods and train the model to get 90% sparsity. but when i run the model, it doesn't have any accleration. i have set the following parameters

conv_mode: LOWERED_CSRMM

and i compiled caffe with mkl. what is the problem do you think i have??

@zhaishengfu see https://github.com/wenwei202/caffe/blob/scnn/README.md#notes and try gpu mode in LOWERED_CCNMM

@wenwei202 I tried all three methods, and their speed is almost the same(0.22s). My network is almost all 3x3 convolutions and has 32 such layers, with no groups. But becuse my mkl can't compile well with your caffe, so i changed the mkl const variables to normal variables(that is , i removed the

const int M and so on to become

int M)

void caffe_cpu_sparse_dense2csr

float* A,

float* A_nonzero_buf, int* A_nonzero_idx_buf, int* A_idx_pointer_buf){

but i think this is not the reason of not speeding-up. I have no ideas. My gpu is nvidia gtx 770

@zhaishengfu try to profile and locate the bottleneck.

@wenwei202 I find a really strange problem. When i use LOWERED_CSRMM and LOWERED_CCNMM, the speed is slower than LOWERED_GEMM(and the LOWERED_CCNMM is far more slower!!!). now i run the model under 4 environments and the average time with the same model as described previously(the average time is the total time i use caffe forward()):

- cuda 7.5+ cudnn-v4-----------------------average time: 0.22s

- cuda 7.5 + LOWERED_GEMM(gpu mode)-----------------------average time: 0.18s

- cuda 7.5 + LOWERED_CCNMM(gpu mode)---------------------average time: 2.3s

- cuda 7.5 + LOWERED_CSRMM(gpu mode)--------------------------average time: 0.24s

but when I see the convolution time of each layer, it is strange!. I find the time of each is totally different from the overall time of the network!!!(you can see CCNMM is the fastest and CSRMM is the slowest, as depicted by the following picture, I tested the network multiple times, so you can see the regular time pattern. The x asix is my different convolution layer, the y axis is the time of each layer with the unit of time of us.)

I will see deeply why this happens. If you have any ideas, thank you for telling me!!

@wenwei202 I readed another paper of yours titled "Holistic SparseCNN: Forging the Trident of Accuracy,

Speed, and Size". It seems that the direct sparse conv technology introduced by this paper has already intergrated into scnn(by using DIRECT_SCONV).

I got following unclear:

1、“When sparsity is too high, direct sparse convolution becomes bandwidth bound, which leads to diminishing returns on the performance acceleration”. Why's that?

2、When should we use DIRECT_SCONV?

@hiyijian Please use our intel branch for the paper "Holistic SparseCNN", which is mainly contributed by @jspark1105. And I guess the sparsity is defined as the ratio of nonzeros in that paper.

@zhaishengfu Some comments:

- To profile, use

deploy.prototxtin Python and usetrain_val.prototxtincaffe time ..., otherwise, the there might be some bugs in original Caffe. - cuDNN is only for training, testing is profiled in Caffe default engine - cuBLAS

- https://github.com/wenwei202/caffe/blob/scnn/README.md#notes

@wenwei202 I used cublas in gpu mode and disable cudnn, but i find the time is still strange.I used caffe time and only test the forward() time, finding that GEMM is the fastest, only 100ms, and CSRMM and CCNMM are both about 1200ms .So, what's the problem do you think??(by the way, i tested the sparse model in normal caffe(not yours) and find that in GEMM GPU mode, your caffe is about 100ms and original caffe is about 130ms)

@wenwei202

Dose the scnn brach support DIRECT_SCONV mode ? I noticed that DIRECT_SCONV mode in the caffe,proto.

I failed to compile intel branch. My gun g++ could not recognize "-xhost" flag. I think it is hardware related. So what's the requrements for CPU?

@hiyijian it is not fully supported by scnn. You need intel compiler to use intel branch. Please ask Jongsoo Park for more details

@hiyijian If you're interested in DIRECT_SCONV, please try https://github.com/IntelLabs/SkimCaffe . We've created a new git repository for direct sparse convolution (also described in https://arxiv.org/abs/1608.01409)

@wenwei202 i want to know whether net_pruner.py in your caffe is used to extract the non-zero weights for further purpose, the cod you write is hard to understand for me because of lacking comments.

@Paseam No, that's not the one. You can use connectivity_mode to disconnect zero-weighted connections.

@wenwei202 When I'm testing ConvolutionLayerTest/1.TestGradient3D on CPU, there is always such an error: /test/test.testbin': malloc(): memory corruption: 0x000000000e48a3a0 *** . Do you have any idea about the possible problem? (I'm using Openblas) Thanks in advance!

@knsong Please find previous comment about make runtest, I did not have the issue of TestGradient3D. If you figured out it, also let us know. Thanks.

@wenwei202 In section 4.2, "Group Lasso regularization is only enforced on the convolutional layers between each pair of shortcut endpoints, excluding the first convolutional layer and all convolutional shortcuts." What do you mean "the convolutional layers between each pair of shortcut endpoints"? Could you give me an example? Thanks a lot!

For example, pool1->res1a->bn->res1b->bn->res1 (eltwise: res1b+pool1)-> res2a->bn->... In this example, which convolutional layer/layers we should enforce the group lasso regularization?

@wenwei202 In the experiment of ResNet-20, after the group lasso regularization is enforced, and ssl converges, the error is dropped or not compared to the original 8.82% (I know the final is 7.40%)? When you fine-tune, did you froze the connectivity? Thank you very much!

In this experiment, I was wondering which step, ssl step or fine-tune step, contributes more for the improvement. Of course, I think it is ssl, but how?

@xiyuyu We have an example of resnet. We use 1x1 conv layers as the shortcuts when the dimensions of feature maps do not match, those conv shortcuts are not enforced by group lasso. Moreover, the first conv layer is not between any shortcut, so it is not reasonable to add group lasso on this layer to remove it. The SSL step is essential to learn structured sparsity, and fine-tuning process is the step to recover accuracy a bit by disconnecting zero weights (DISCONNECTED_GRPWISE in the case of resnet).

@wenwei202 Got it! Thank you very much~~

@wenwei202 when you finetune the caffemodel after group-lasso regularisation on resnet_n3, did you zero out the parameters in batchnorm and scale layer? If not, after finetuning, these parameters will not be zero, and will produce output which makes the removal of the whole network unworkable.

@xiyuyu A all-zero conv layer produces all-zero feature maps, batchnorm on all-zero feature maps is trivial. At least, the outputs of those batchnorm layers are constant regardless of the input images. You can fairly add those constant values to bias values in the next layer.

@wenwei202 make sense~~

@wenwei202 Does structured sparsity support innerproduct layer, too?

@irwenqiang Yes, it does support.

make runtest failed , has anyone solved similar runtest problem?

ConvolutionLayerTest/1.TestGradient3D

*** Aborted at 1480994031 (unix time) try "date -d @1480994031" if you are using GNU date ***

PC: @ 0x7f4be7a7cc4e (unknown)

*** SIGSEGV (@0x7f4bfe0856c0) received by PID 7887 (TID 0x7f4bef483a80) from PID 18446744073676543680; stack trace: ***

@ 0x7f4be7dd53e0 (unknown)

@ 0x7f4be7a7cc4e (unknown)

@ 0x7f4be7a7e5d4 __libc_malloc

@ 0x7f4be8882f37 caffe::SyncedMemory::mutable_cpu_data()

@ 0x7f4be8819b2c caffe::Blob<>::InitializeConnectivity()

@ 0x7f4be8825be2 caffe::Blob<>::Reshape()

@ 0x488e4b caffe::GradientChecker<>::CheckGradientSingle()

@ 0x4899f3 caffe::GradientChecker<>::CheckGradientExhaustive()

@ 0x6d8fd7 caffe::ConvolutionLayerTest_TestGradient3D_Test<>::TestBody()

@ 0x91b043 testing::internal::HandleExceptionsInMethodIfSupported<>()

@ 0x91465a testing::Test::Run()

@ 0x9147a8 testing::TestInfo::Run()

@ 0x914885 testing::TestCase::Run()

@ 0x915b5f testing::internal::UnitTestImpl::RunAllTests()

@ 0x915e83 testing::UnitTest::Run()

@ 0x46e21d main

@ 0x7f4be7a1b830 __libc_start_main

@ 0x475c89 _start

@ 0x0 (unknown)

^CMakefile:541: recipe for target 'runtest' failed

Hello !@wenwei202

Would you like to tell me which files that you changed compared to the original caffe?I want to use your code on my Caffe. It's very kind of you! Thank you very much!

@mumaal A bunch of files were modified, pls use git diff between master branch and scnn branch to check the modifications. Thanks!

Hello @wenwei202

Thanks for the awesome concept. I was eager to know whether this would work in larger nets like VGG and Resnet-101?

@legolas123 We did not try VggNet and ResNets-101 in the NIPS paper, but applying it to VggNet is undergoing. I will update you when it's done. Please also update here if you get the results. Thanks!

@wenwei202 Sure, will let you know when I get the results.

Thanks

@legolas123 Here is the result of training vggnet-16 with SSL. We get small column sparsity even with ~2.7% improved top-1 accuracy after SSL without further fine-tuning . We are increasing the decay to explore how structurally sparse the VggNet can be, with small accuracy loss. stay in tuned.

@wenwei202 nice to hear that. I am also trying your sparsity code on detection with faster-rcnn framework, It is on VGG16 net. I used the weight decay you used in CIFAR data with the value 0.003. I guess this is a large weight decay factor. Lets see how the results look. Will let you know when it converges.

@wenwei202 I have the results on VGG for detection. While the sparsity is around 65% on all convolutional layers from conv3_1 onwards, accuracy (MAP) dropped from 69 to 64. But the interesting thing is that inference time has gone considerably slower which means I am doing something wrong in inference. Dont know what.

@legolas123 To speedup, you need to remove zero groups and concatenate non zeros to smaller matrix. For accuracy, make sure you did fine-tuning after sparsifying. Thanks!

Yeah I did that. conv_mode: LOWERED_CCNMM in test.prototxt right? Yes I did that. In that only it has become considerably slower. And I am using GPU mode. So no mkl problem either. Could it be that convolutions are getting faster but some other layers like spatial pooling getting slower. I dont see a reason. But still trying to profile with faster-rcnn python wrapper to see if that is the case.

@wenwei202 I used your profile display flag in Makefile.config. And yes convolutions on which sparsity has been done are more than 2 times faster than its dense counterpart. But since profiling does not show FC layers, I cannot find the layer which is slowing down the sparse network. Can you think of anything that might be causing this issue? Thanks!

@legolas123 The tricky part is, in the GPU mode with conv_mode: LOWERED_CCNMM, we temporarily use CPU code to do the lowering, which slows down the computation. If we can modify conv_im2col_gpu to concatenate feature matrix, then it wouldn't be an issue.

@wenwei202 so in every iteration, it does this lowering operation because it can me made to do the lowering operation in just first iteration right? Thanks!

@wenwei202 Will try to hack into that. My current concern is accuracy because with 90% sparsity the MAP has gone down by 10 points which further fine-tuning is improving just by 1 point. This is weird because you are improving the accuracy of imagenet classification by sparsifying(although you mentioned sparse intensity you had kept low right now) and I am seeing such a drop of accuracy in detection. Thanks!

@legolas123 Do you mind sending me your solver for both SSL and fine-tuning? weiwen.[email protected]

hi @wenwei202

i'm interested to try your scnn.

but i'm bit confused with the step of your tutorial.

from my understanding, the step are (based on your cifar-10 tutorial):

- train cifar-10

- finetune

but in your readme.md, you give an example using block_group_lasso which i didnt find inside the prototxt (cifar10_full_train_test, cifar10_full_train_test_ft or cifar10_full_train_test_kernel_shape) of your cifar-10.

any suggestion for me ? or the block_group_lasso automatically calculated during training ?

thanks

@marifnst You can add block_group_lasso based on what kinds of structured sparsity you want to learn. See an example for reducing layers in resnet, where all weights in the layer form a group.

@wenwei202 I have the results for the sparsification on detection network of faster-rcnn. The sparse training is carried out for 70k iterations at 0.001 learning rate and fine-tuning is performed on another 60k iterations at 0.001 learning rate. So I increased the learning rate for tuning suggested by you. Now the final MAP is 65.5 in comparison to original MAP of 68. Also the sparsity is more than 80% in all the convolutional layers about conv_3.

@legolas123 Great job, Koustubh! Looking forward to seeing you share it with the community.

hi @wenwei202

thank you for your sharing.

can you share which source or library that linked to vsDivCheckZero in math_functions.cpp ?

regards

@marifnst Here it is.

./include/caffe/util/mkl_alternate.hpp:20:DEFINE_VSL_BINARY_FUNC(DivCheckZero, y[i] = (b[i]==0 ? 0: a[i] / b[i]));

Hi @wenwei202

Thank you very much.

I hope can finish integration process and share a result to you.

Best regards

hi @wenwei202

Sorry for always interrupting you.

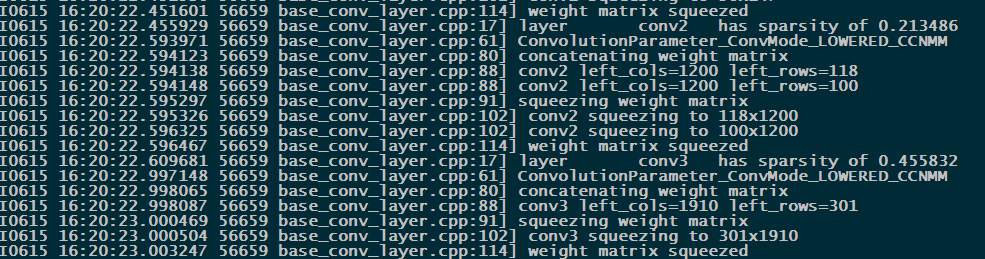

I have successfully integrated my algorithm with your layer.

Can you help me to explain the purpose of below output:

I0207 16:43:25.079854 8699 base_conv_layer.cpp:17] layer conv1 has sparsity of 0.00085034

I0207 16:43:25.082247 8699 base_conv_layer.cpp:171] ConvolutionParameter ConvMode: DEFAULT

I0207 16:43:25.083804 8699 base_conv_layer.cpp:17] layer conv2 has sparsity of 0.015612

I0207 16:43:25.132417 8699 base_conv_layer.cpp:171] ConvolutionParameter ConvMode: DEFAULT

I0207 16:43:25.134937 8699 base_conv_layer.cpp:17] layer conv3 has sparsity of 0.0147163

I0207 16:43:25.205013 8699 base_conv_layer.cpp:171] ConvolutionParameter ConvMode: DEFAULT

I0207 16:43:25.208621 8699 base_conv_layer.cpp:17] layer conv4 has sparsity of 0.00619092

I0207 16:43:25.311568 8699 base_conv_layer.cpp:171] ConvolutionParameter ConvMode: DEFAULT

I0207 16:43:25.313935 8699 base_conv_layer.cpp:17] layer conv5 has sparsity of 0.00529876

I0207 16:43:25.481933 8699 inner_product_layer.cpp:12] layer fc6 has sparsity of 0.0213456

I0207 16:43:29.525123 8699 inner_product_layer.cpp:12] layer fc7 has sparsity of 0.0181708

?

best regards

@marifnst

I0207 16:43:25.079854 8699 base_conv_layer.cpp:17] layer conv1 has sparsity of 0.00085034 means 0.00085034*100% weights of conv1 are zeros (element-wise sparsity).

I0207 16:43:25.082247 8699 base_conv_layer.cpp:171] ConvolutionParameter ConvMode: DEFAULT means, in layer conv1, convolution computation mode conv_mode is the default GEMM. See more modes here.

hi @wenwei202

I have prototxt like below:

layer {

name: "conv1"

type: "Convolution"

bottom: "data"

top: "conv1"

#connectivity_mode: DISCONNECTED_GRPWISE

param {

lr_mult: 1

decay_mult: 1

block_group_lasso {

xdimen: 7

ydimen: 1

block_decay_mult: 1.0

}

}

param {

lr_mult: 2

decay_mult: 1

}

convolution_param {

num_output: 48

kernel_size: 7

pad: 3

stride: 2

}

}

and no loss of accuracy without fine tuning .

Or maybe i have mistake on my configuration so your scnn doesn't work ? any comment ?

And i found from your code that:

- Your conv mode (CSR, CNM, etc) only for testing and not for training phase

- CNM is slower than CSR (the explanation from thread before, only implemented in CPU and i use GPU mode) in testing phase

So, any suggestion how to find that your layer faster than original layer ?

From my understanding from your paper, you compare execution time per layer (conv layer).

Sorry because i dont notice that you have updated https://github.com/wenwei202/caffe/blob/scnn/README.md. I will check it too based on your explanation there.

Best Regards

@marifnst You'd to configure block_group_decay in your solver to enable group Lasso, since block_decay_mult * block_group_decay is the hyper-parameter of lambda. Default block_group_decay is 0.

Open USE_PROFILE_DISPLAY := 1 in Makefile.config to plot timing results, which essentially include the time of matrix-matrix multiplication only.

Hi @wenwei202

Thank you very much for your responses.

Your suggestion successfully decrease my avg accuracy from 0.60 to 0.15 (without fine tuning) with 0.005 value of block_group_decay in solver & DISCONNECTED_GRPWISE of connectivity_mode.

No problem with that, still in progress to be more understood with your SSL and will be fine-tuned soon.

Best Regards

Hi @wenwei202

In section 4.3 (AlexNet on ImageNet) of your paper, you described the SSL method in 3 steps:

1) trained with structure regularization;

2) remove zero groups;

3) fine-tune without SSL.

Now, I can only find your caffemodels of step 1. Would you please share the fine-tuned model of step 3?

Thanks! :)

@Roll920 The caffemodels in the zoo were fine-tune ones.

@wenwei202 Thanks for your responses, but I find the size of your caffemodel is exactly the same as original AlexNet (232.6MB). Since zero groups are removed in step 2, it should be much smaller with a new structure. Is there anything wrong with my understanding?

@Roll920 I updated the tutorial regarding your concern here.

Hi @wenwei202 , I read your NIPS paper, It looked great! Just wondering what would be the performance if I start training from scratch using SSL. Would that model be accuracy be close enough to the original model trained from scratch without SSL? I cannot use a pretrained model for my project

Hi @madnavs , training from scratch is fine but fine-tuning can get better trade-off between accuracy and sparsity. Thanks!

Thanks for the reply @wenwei202 . Just want to know your insights on how bad would training from scratch be compared to fine tuning.

@madnavs We did't observe significant differences using small dataset, but we didn't try ImageNet. You should use smaller lambda_g if you train it from scratch.

hi @wenwei202

how if i set my prototxt below:

layer {

name: "conv1"

type: "Convolution"

bottom: "data"

top: "conv1"connectivity_mode: DISCONNECTED_GRPWISE

param {

lr_mult: 1

decay_mult: 1

block_group_lasso {

xdimen: 7

ydimen: 1

block_decay_mult: 1.0

}

}

param {

lr_mult: 2

decay_mult: 1

}

convolution_param {

num_output: 48

kernel_size: 7

pad: 3

stride: 2

}

}

but i dont set block_group_decay value in my solver ? so the training will be processed without SSL ? you commented before that parameter will be set to 0.

Thank you very much

@marifnst Yes, the default block_group_decay is 0.0 as caffe.proto sets up. Note that the number of columns (rows) must be divisible byxdimen(ydimen).

hi there @wenwei202 , here i have some detailed question about the configuration of your experiments on ResNet20 on cifar-10(related to depth-wise sparsity).

In your experiment , does a single group consist of 2 conv layers in a residual block , or you add 2 shortcuts in every block(so that single conv layer is a group)...

I tried the second scheme and find out that when a conv layer is removed , it is likely that another conv layer in this residual group is removed as well.I train the model from scratch with SSL on CIFAR-10 with the standard configuration of resnet20 but the accuracy can hardly be recovered by fine-tuning. I wonder if there is any mistake or trick that I missed in this experiments. Thanks!

@siberiamark Neither! In the paper, the residual block has the original structure, where there is only one shortcut across two layers. Group Lasso is separately enforced on each layer. Theoretically, if one layer in the residual block is regularized to all-zero, the other layer must also be all-zero so as to minimize the target function (because removing the remained layer will not affect data loss but reduce the regularization term). This is also the phenomenon I observed. If you train from scratch, please use smaller hyper-parameter lambda_g, but the trade-off may be better if you fine-tune by SSL.

Hi, @wenwei202 ,I have read your paper and code for some time. I don't kown the deference between breadth_decay and kernel_shape_decay in caffe.proto. What are their respective relations with block_group_decay? Could you give me further explanation please? Thanks a lot!

@ZouKaiwei Group Lasso regularization on each row or column can be specified by block_group_lasso with ydimen: 1 or xdimen: 1. However, we also implemented (breadth_decay_mult & kernel_shape_decay_mult in ParamSpec param) and (breadth_decay & kernel_shape_decay in SolverParameter) to simplify the configuration of group Lasso regularization on each row or column, respectively. For example, in conv1 of LeNet, kernel_shape_decay_mult: 1.5 is equivalent to

param { # weights

lr_mult: 1

block_group_lasso { # specify the group lasso regularization each column

xdimen: 1

ydimen: 20 # The size of each column is the number of filters

block_decay_mult: 1.5 # the same with kernel_shape_decay_mult

}

}

and breadth_decay_mult: 1.5 is equivalent to

param { # weights

lr_mult: 1

block_group_lasso { # specify the group lasso regularization each row

xdimen: 75 # The size of each row is the size of filter 5*5*3

ydimen: 1

block_decay_mult: 1.5 # the same with breadth_decay_mult

}

}

Got it! @wenwei202 Your reply really help me. Thank you very much!

Hi , @wenwei202 I'm trying to get the same result as yours using MLP. I have some doubts about your files. Suppose I need to run the MLP 2

(1) Which net file should I use?

(2) What values should I set for kernel_shape_decay and breadth_decay?

Besides, are the Neuron number per layer in MLP2: 469-294-166-10 been setted in mlp.prototxt ?or these values are just the results of sparse?

I'm looking forward to your reply.Thank you very much.

@ZouKaiwei The net file would be this, but I suggest you to create your own since those files are not maintained. You need to cross-validate kernel_shape_decay and breadth_decay, but you can begin from kernel_shape_decay: 0.0001 and breadth_decay: 0.0001. 469-294-166-10 is the learned result.

OK, I see. @wenwei202 Thank you very much!

Hi, @wenwei202 Sorry to trouble you again. I don't really kown the optimizational process of group lasso. How can we zero one row in weights matrix? This result is natual when every elements in that row is zerout by learning or we need to hand-actuated assign it? Actually, I don't understand this function. Could you give me further explanation please? Thanks a lot!

@ZouKaiwei Group Lasso can naturally push some groups to zeros, by adding extra gradients to the weights. The regularization gradient of group w is w/||w||_2, which basically pushes the vector of w to the origin step by step. The kernel implements this.

@wenwei202 I don't very understand your answer. I thought group lasso is to use L2 norm in every group after I read the section 3.1 of your paper . Am I right? Does ||w||_2 in your answer means L2 norm? Why should we use w to be divided by it? Actually, I don't kown the meaning of "pushes w to the origin" Could you explain a bit further?

@ZouKaiwei ||w||_2=sqrt(w_1*w_1+w_2*w_2+...), which is not L2 norm. Without sqrt, It is L2 norm. You will get the answer after some little derivation.

@wenwei202 ,Totally understand :). Thanks for your reply!

@wenwei202 hi~wen! May I ask a question about when to use SSL ? It works in training (I mean that a network is being trained and generated sparsity at same time)? or SSL works after training?

SSL works after training->meaning that we use SSL on dense neural network. sorry , I just want to explain more clearly :3

@s0606757

The whole process is:

training from scratch to obtain the baseline (most of which can downloaded directly from the caffe zoo)

-> retrain the originally-dense DNN with SSL to learning structured sparsity

-> freeze zero groups and fine-tune nonzero weights to recover some accuracy.

@siberiamark @wenwei202

I set

export CUDA_VISIBLE_DEVICES=0

and in the Makefile.config have the default ATLAS option chosen:

# BLAS choice:

# atlas for ATLAS (default)

# mkl for MKL

# open for OpenBlas

BLAS := atlas

# Custom (MKL/ATLAS/OpenBLAS) include and lib directories.

# Leave commented to accept the defaults for your choice of BLAS

# (which should work)!

# BLAS_INCLUDE := /path/to/your/blas

# BLAS_LIB := /path/to/your/blas

Still, the make runtest fails. What else could be done?

[ RUN ] DeconvolutionLayerTest/0.TestNDAgainst2D

*** Aborted at 1489736474 (unix time) try "date -d @1489736474" if you are using GNU date ***

PC: @ 0x2aec4c844086 caffe::im2col_nd_cpu<>()

*** SIGSEGV (@0x2aec8e880000) received by PID 25597 (TID 0x2aec461d0f40) from PID 18446744071805861888; stack trace: ***

@ 0x2aec4d536330 (unknown)

@ 0x2aec4c844086 caffe::im2col_nd_cpu<>()

@ 0x2aec4c7d679f caffe::BaseConvolutionLayer<>::conv_im2col_cpu()

@ 0x2aec4c7e0d51 caffe::BaseConvolutionLayer<>::forward_cpu_gemm()

@ 0x2aec4c7ffd9a caffe::DeconvolutionLayer<>::Backward_cpu()

@ 0x49f865 caffe::Layer<>::Backward()

@ 0x4b25bf caffe::DeconvolutionLayerTest_TestNDAgainst2D_Test<>::TestBody()

@ 0x8e7603 testing::internal::HandleExceptionsInMethodIfSupported<>()

@ 0x8de2e7 testing::Test::Run()

@ 0x8de38e testing::TestInfo::Run()

@ 0x8de495 testing::TestCase::Run()

@ 0x8e17d8 testing::internal::UnitTestImpl::RunAllTests()

@ 0x8e1a67 testing::UnitTest::Run()

@ 0x46d2ef main

@ 0x2aec4d765f45 (unknown)

@ 0x474f49 (unknown)

@ 0x0 (unknown)

make: *** [runtest] Segmentation fault (core dumped)

@wenwei202 thanks a lot. I got it!! ^_^

Hi, @wenwei202 In MLP 2, 40.18% of input neurons have zero connections, so the number of effective neurons is 469. Should the column sparsity of weights matrix(500,784) be 40.18% in experiment? I had run the code several times(changing ZEROUT_THRESHOLD), but the sparsity is usually 0, only the relu layers have row sparsity. What should I do to make all the connections of a neuron be zero?

@ZouKaiwei ZEROUT_THRESHOLD is a fixed very small value to stabilize sparsity and don't change it. ZEROUT_THRESHOLD will not affect accuracy. To enable column sparsity, you should configure kernel_shape_decay_mult for each layer and use nonzero kernel_shape_decay in the solver. Details are covered in tutorial.

Hi ~ everyone ! I have a problem about building caffe-scnn.When I make following steps:

cd CAFFE_ROOT

mkdir build

cmake ..

make all

.....

then I have problem about :

[ 1%] Built target proto

[ 90%] Built target caffe

Linking CXX executable caffe

../lib/libcaffe.so.1.0.0-rc3: undefined reference to cusparseSdense2csc'

../lib/libcaffe.so.1.0.0-rc3: undefined reference tocusparseSetMatType'

../lib/libcaffe.so.1.0.0-rc3: undefined reference to cusparseScsrmm'

../lib/libcaffe.so.1.0.0-rc3: undefined reference tocusparseDestroyMatDescr'

../lib/libcaffe.so.1.0.0-rc3: undefined reference to cusparseDcsrmm2'

../lib/libcaffe.so.1.0.0-rc3: undefined reference tocusparseDdense2csc'

../lib/libcaffe.so.1.0.0-rc3: undefined reference to cusparseDestroy'

../lib/libcaffe.so.1.0.0-rc3: undefined reference tocusparseSetMatIndexBase'

../lib/libcaffe.so.1.0.0-rc3: undefined reference to cusparseDnnz'

../lib/libcaffe.so.1.0.0-rc3: undefined reference tocusparseCreateMatDescr'

../lib/libcaffe.so.1.0.0-rc3: undefined reference to cusparseCreate'

../lib/libcaffe.so.1.0.0-rc3: undefined reference tocusparseDcsrmm'

../lib/libcaffe.so.1.0.0-rc3: undefined reference to cusparseSnnz'

../lib/libcaffe.so.1.0.0-rc3: undefined reference tocusparseScsrmm2'

collect2: error: ld returned 1 exit status

make[2]: * [tools/caffe] Error 1

make[1]: [tools/CMakeFiles/caffe.bin.dir/all] Error 2

make: ** [all] Error 2

How can I do to solve this problem?

(This is my result of cmake :

-- Boost version: 1.54.0

-- Found the following Boost libraries:

-- system

-- thread

-- filesystem

-- Found gflags (include: /usr/include, library: /usr/lib/x86_64-linux-gnu/libgflags.so)

-- Found glog (include: /usr/include, library: /usr/lib/x86_64-linux-gnu/libglog.so)

-- Found PROTOBUF Compiler: /usr/bin/protoc

-- Found lmdb (include: /usr/include, library: /usr/lib/x86_64-linux-gnu/liblmdb.so)

-- Found LevelDB (include: /usr/include, library: /usr/lib/x86_64-linux-gnu/libleveldb.so)

-- Found Snappy (include: /usr/include, library: /usr/lib/libsnappy.so)

-- CUDA detected: 7.5

-- Found cuDNN: ver. 5.0.5 found (include: /usr/local/cuda/include, library: /usr/local/cuda/lib64/libcudnn.so)

-- Added CUDA NVCC flags for: sm_52

-- OpenCV found (/usr/share/OpenCV)

-- Found Atlas (include: /usr/include, library: /usr/lib/libatlas.so)

Traceback (most recent call last):

File "

ImportError: No module named numpy

-- Could NOT find NumPy (missing: NUMPY_INCLUDE_DIR NUMPY_VERSION) (Required is at least version "1.7.1")

-- Boost version: 1.54.0

-- Found the following Boost libraries:

-- python

-- Could NOT find Doxygen (missing: DOXYGEN_EXECUTABLE)

-- Python interface is disabled or not all required dependencies found. Building without it...

-- ***** Caffe Configuration Summary *****

-- General:

-- Version : 1.0.0-rc3

-- Git : unknown

-- System : Linux

-- C++ compiler : /usr/bin/c++

-- Release CXX flags : -O3 -DNDEBUG -fPIC -Wall -Wno-sign-compare -Wno-uninitialized

-- Debug CXX flags : -g -fPIC -Wall -Wno-sign-compare -Wno-uninitialized

-- Build type : Release

-- BUILD_SHARED_LIBS : ON

-- BUILD_python : ON

-- BUILD_matlab : OFF

-- BUILD_docs : ON

-- CPU_ONLY : OFF

-- USE_OPENCV : ON

-- USE_LEVELDB : ON

-- USE_LMDB : ON

-- ALLOW_LMDB_NOLOCK : OFF

-- Dependencies:

-- BLAS : Yes (Atlas)

-- Boost : Yes (ver. 1.54)

-- glog : Yes

-- gflags : Yes

-- protobuf : Yes (ver. 2.5.0)

-- lmdb : Yes (ver. 0.9.10)

-- LevelDB : Yes (ver. 1.15)

-- Snappy : Yes (ver. 1.1.0)

-- OpenCV : Yes (ver. 2.4.8)

-- CUDA : Yes (ver. 7.5)

-- NVIDIA CUDA:

-- Target GPU(s) : Auto

-- GPU arch(s) : sm_52

-- cuDNN : Yes (ver. 5.0.5)

-- Documentaion:

-- Doxygen : No

-- config_file :

-- Install:

-- Install path : /home/s0606757/caffe-scnn/build/install

-- Configuring done

-- Generating done

-- Build files have been written to: /home/s0606757/caffe-scnn/build)

@s0606757 I add cusparse library in Makefile to support sparse blas in gpu mode. The fastest way to solve this issue is to use makefile instead of using cmake

make all

make pycaffe

If you want to use cmake, you should also configure cusparse for cmake.

Could anybody please provide an examplary prototxt for training and testing (separately, please, and preferably of a larger network e.g. AlexNet, VGG) that, when testing, shows actually the speed-up when using caffe time as opposed to the testing it without the structured sparsity? I do see some examples in the /models/bvlc_reference_caffenet/ directory but I'm not sure which way to use them. I would be very grateful for help.

Note:

I added to the train_val.protoxt in the convolutional layers parameters: conv_mode: LOWERED_CSRMM but when timing with caffe time, the time is the same as with the default setting. What can cause this lack of speed-up? Is it related that I only finetuned the original model for a few thousands iterations?

@kamadforge A vggnet example of ssl solver.

hi @wenwei202

may i know which fastest from your research between combination below:

- ELT connectivity

1.1 GEMM

1.2 CSR

1.3 CCNM

- GRP connectivity

2.1 GEMM

2.2 CSR

2.3 CCNM

?

i have tried to train with block group decay 0.0001 and ELT connectivity mode with computation result in test phase below:

GEMM result:

"I0328 12:03:39.923871 4843 base_conv_layer.cpp:855] conv1 group 0: 669.088 us (Dense Scheme Timing)"

CSR result:

"I0328 12:05:28.713330 4938 base_conv_layer.cpp:813] conv1 group 0: 5835.17 us (Compressed Row Storage Timing)"

CCNM result:

"I0328 12:02:15.266597 4739 base_conv_layer.cpp:833] conv1 group 0: 748.096 us (Concatenation Timing)"

? why DENSE faster than another mode ? any suggestion for me ?

fyi, i'm using GPU.

thank you very much

Thank you, @wenwei202. Along these lines, which prototxt file do you use for testing the acceleration (I assume that the prototxt file should have conv_mode specified, is that right?) with the Caffenet caffemodel provided to achieve the speed-ups?

@kamadforge The training only support LOWERED_GEMM which is the default one. After training, you can specify conv_mode in deploy.prototxt to measure the speed.

@marifnst the connectivity_mode is just for fine-tuning for accuracy recovering after SSL. We usually use the default CONNECTED during SSL, after which, in the followed fine-tuning for accuracy recovering, we use DISCONNECTED_GRPWISE to freeze all-zero rows/columns to maintain structured sparsity or use DISCONNECTED_ELTWISE to freeze zero weights to maintain non-structured sparsity. If you obtained structured sparsity properly, you should get fastest DNNs with DISCONNECTED_GRPWISE + LOWERED_CCNMM if this is what you asked for.

@marifnst May I ask how did you obtain the (Compressed Row Storage Timing) and (Concatenation Timing) information in the output in the GPU mode? For me, the GPU output contains only this sort of output:

I0329 17:07:27.924870 4353 caffe.cpp:405] Iteration: 47 forward-backward time: 2.48218 ms.

I0329 17:07:27.927325 4353 caffe.cpp:405] Iteration: 48 forward-backward time: 2.42358 ms.

I0329 17:07:27.929778 4353 caffe.cpp:405] Iteration: 49 forward-backward time: 2.41357 ms.

I0329 17:07:27.932199 4353 caffe.cpp:405] Iteration: 50 forward-backward time: 2.39309 ms.

I0329 17:07:27.932217 4353 caffe.cpp:408] Average time per layer:

I0329 17:07:27.932222 4353 caffe.cpp:411] data forward: 0.0118374 ms.

I0329 17:07:27.932235 4353 caffe.cpp:414] data backward: 0.0018432 ms.

I0329 17:07:27.932241 4353 caffe.cpp:411] conv1 forward: 0.0643283 ms.

I0329 17:07:27.932248 4353 caffe.cpp:414] conv1 backward: 0.0427885 ms.

When testing the provided caffemodel (caffenet_L1_0.4251.caffemodel) I also obtained similar outcomes as @marifnst where the timing of the dense scheme is about 10x faster. @wenwei202 , do you also obtain such results?

I0331 11:33:20.955344 11007 base_conv_layer.cpp:813] conv1 group 0: 595.968 us (Compressed Row Storage Timing)

I0331 11:17:40.856134 6980 base_conv_layer.cpp:855] conv1 group 0: 61.44 us (Dense Scheme Timing)

hi @wenwei202

this is my result following your suggestion (using GRP mode):

CCNMM Result

0401 12:49:56.667223 16752 base_conv_layer.cpp:833] conv1 group 0: 680.544 us (Concatenation Timing)

I0401 12:49:56.774369 16752 base_conv_layer.cpp:833] conv2 group 0: 2402.05 us (Concatenation Timing) --> still slower

I0401 12:49:56.806509 16752 base_conv_layer.cpp:833] conv3 group 0: 457.632 us (Concatenation Timing)

I0401 12:49:56.826230 16752 base_conv_layer.cpp:833] conv4 group 0: 701.184 us (Concatenation Timing) --> still slower

I0401 12:49:56.844141 16752 base_conv_layer.cpp:833] conv5 group 0: 503.168 us (Concatenation Timing)

GEMM Result

I0401 13:18:40.327471 17525 base_conv_layer.cpp:855] conv1 group 0: 684.768 us (Dense Scheme Timing)

I0401 13:18:40.337474 17525 base_conv_layer.cpp:855] conv2 group 0: 1663.71 us (Dense Scheme Timing)

I0401 13:18:40.340207 17525 base_conv_layer.cpp:855] conv3 group 0: 474.336 us (Dense Scheme Timing)

I0401 13:18:40.341575 17525 base_conv_layer.cpp:855] conv4 group 0: 699.104 us (Dense Scheme Timing)

I0401 13:18:40.342840 17525 base_conv_layer.cpp:855] conv5 group 0: 506.208 us (Dense Scheme Timing)

thank you for your suggestion and my avg speed of CCNMM is almost all of them faster than GEMM.

but is it possible to make it faster ? any suggestion from parameter value or configuration (currently my block_group_decay is 0.0001) ?

thanks

hi @wenwei202

finally i can use your SSL, my convolution calculation faster up to 2x with scenario below as your suggestion:

- training with CONNECTED mode with block group decay 0.00001

- re training with several mode [CONNECTED, GRP, ELT] with Convolution Mode [GEMM, CSRMM, CCNMM] with block group decay 0.001

my result:

GEMM

I0409 19:34:32.197564 16500 base_conv_layer.cpp:855] conv1 group 0: 679.968 us (Dense Scheme Timing)

I0409 19:34:32.203863 16500 base_conv_layer.cpp:855] conv2 group 0: 1232.96 us (Dense Scheme Timing)

I0409 19:34:32.205538 16500 base_conv_layer.cpp:855] conv3 group 0: 469.888 us (Dense Scheme Timing)

I0409 19:34:32.206542 16500 base_conv_layer.cpp:855] conv4 group 0: 691.36 us (Dense Scheme Timing)

I0409 19:34:32.207332 16500 base_conv_layer.cpp:855] conv5 group 0: 506.848 us (Dense Scheme Timing)

CSRMM

I0409 19:36:28.327288 16681 base_conv_layer.cpp:813] conv1 group 0: 5011.23 us (Compressed Row Storage Timing) --> problem with pooling layer i thought

I0409 19:36:28.333906 16681 base_conv_layer.cpp:813] conv2 group 0: 1407.3 us (Compressed Row Storage Timing) --> problem with pooling layer i thought

I0409 19:36:28.335819 16681 base_conv_layer.cpp:813] conv3 group 0: 336.256 us (Compressed Row Storage Timing)

I0409 19:36:28.336664 16681 base_conv_layer.cpp:813] conv4 group 0: 245.472 us (Compressed Row Storage Timing)

I0409 19:36:28.337345 16681 base_conv_layer.cpp:813] conv5 group 0: 206.592 us (Compressed Row Storage Timing)

CCNMM

I0409 19:39:06.890368 16883 base_conv_layer.cpp:833] conv1 group 0: 809.824 us (Concatenation Timing)

I0409 19:39:07.066095 16883 base_conv_layer.cpp:833] conv2 group 0: 1310.82 us (Concatenation Timing)

I0409 19:39:07.103081 16883 base_conv_layer.cpp:833] conv3 group 0: 436.128 us (Concatenation Timing)

I0409 19:39:07.130189 16883 base_conv_layer.cpp:833] conv4 group 0: 276.64 us (Concatenation Timing)

I0409 19:39:07.159718 16883 base_conv_layer.cpp:833] conv5 group 0: 366.528 us (Concatenation Timing)

but i have several notes:

- if my conv has pooling layer, it will decrease the acceleration

Thank you very much.

Hope my paper can be published ASAP.

Best Regards

I wanted to ask what is the best way to compare the results between two different runs? Is it the way @marifnst compared the group 0? What about group 1, say? And what about the average time per layer given at the end of the run? I was wondering then, for example, if we have two groups, group 0 and group 1 for a conv layer, should the average time per a conv layer to be the sum of the two)?

Hi, I am working on SSL on Lenet. According to your paper, the accuracy doesn't drop, however, my results on kernel shape ssl can only reach 98.6%,which drops 0.5% compared to the original level. I did finetune the net without ssl, and my column sparsity is 16-89.2% (conv1-conv2) . I don't know what I could do to increase the accuracy, could you please give me some suggestions?

Best regards.

@lemonrr The sparsity looks reasonable. You can finetune it with connectivity_mode: DISCONNECTED_GRPWISE and should get some accuracy back.

@wenwei202 I did finetune it with connectivity_mode: DISCONNECTED_GRPWISE in my experiment, but accuracy was 98.6% as I just said. I noticed your tips

1、Set the base learning rates of both SSL and fine-tuning to 0.1x of the base learning rate of training original DNNs from stratch.

2、Set the maximum iteration K of SSL to about half of the max iteration M of training original DNNs from stratch (K=M/2); set max iteration N of finetuning to around M/3.

but I tried it and found my results got even worse. So my 98.6% was got with the iterations M and fine-tuning lr the same as the lr of original DNN.

Any suggestions?

Best regards

@lemonrr During SSL, did you start from the weights of baseline using caffe train --solver yourssl.solver --weights yourbaseline.caffemodel? Training from scratch during SSL may not give the best tradeoff.

@wenwei202 I did start from the weights of baseline during ssl, and got the accuracy of 98.6%. I don't know what's wrong.

By the way, I am using the GPU mode, if I want to measure the speedup, I use DISCONNECTED_GRPWISE + LOWERED_CCNMM, so I should use openblas or atlas defaut ?

Hello, @wenwei202

I am a bit confused about the parameter block_group_decay, kernel_shape_decay and breadth_decay.

Currently i am setting them like this in the solver:

block_group_decay: 0.0001

kernel_shape_decay: 1.5

breadth_decay: 1.5

And i did not set the block_decay_mult parameter.

But now, during my training the Block sparsity is 0 all along.

Thanks in advance!

@PureDiors You need to set like block_decay_mult: 1.0 to enable block-wise group Lasso on the layer. kernel_shape_decay and breadth_decay are special cases of block_group_decay. Please see here for more.

Hello, @wenwei202

We are trying to profile the sparse DNNs on CPU and GPU. We used the Alexnet-SSL trained model from model zoo and observed the following behavior in CPU for classification/inference.

Execution time of CSR > Execution time of Default > Execution time of LOWERED_CCNMM

Here execution time refers to average time across convolutional layers and not the total execution time of inference.

We expect CSR type to be at-least better than Default right ? We have used MKL libirary.

Please let me know if these results are fine.

Thanks.

Hello, @sab0991

mkl_?csrsmm (sparse-matrix times dense-matrix multiplication) is optimized for large sparse matrices with banded sparsity pattern often found in scientific computing and hence doesn't perform optimally for sparse matrices found in sparse CNNs. Also, the lowering approach (that translates convolution to dense matrix multiplications by replicating input activation elements) used in Caffe incurs much bigger overhead when number of floating-point operations significantly reduced by sparse models.

You may want to try an alternative approach (https://github.com/IntelLabs/SkimCaffe) that uses a routine specifically optimized for sparse CNN that directly applies sparse convolutions without lowering. Also, compared to SSL, we're not trying to impose group-wise sparsity and allow more general patterns of element-wise sparsity, so the sparsity is in general higher (~90% in AlexNet and GoogLeNet). Our github page has pre-pruned models of AlexNet and GoogLeNet (we also have ResNet pruned models that we haven't uploaded yet in case interested). You can also find our ICLR'17 paper from our github page if you're interested in more details.

@sab0991 The result is normal. mkl_?csrsmm is NOT optimized by considering the regular pattern in weight matrix with structured sparsity. The issue is more serious in platforms with high parallelism (like GPU). That was why we worked on structured sparsity.

As noted by @jspark1105, the lowering in caffe CPU mode is very inefficient, implemented in a way of nested loops (for { for { for { for{ ... }}}} ). You can parallel the lowering to reduce the overhead. Like, the lowering overhead is much smaller in GPU because of high parallelism. To go further, you can also skips lowering and perform direct sparse convolution, which is an alternative way to harness non-structured sparsity.

Hello @jspark1105,

Thanks for the response. We will profile the direct sparse convolution mode in SkimCaffe with the pre-pruned models.

Hello @wenwei202,

Thanks for the response. We will try to parallel the lowering routine for CPU and GPU.

@wenwei202 Hello,I am confused about how to test the forward time. In GPU mode, I use cuBLAS, should I use CSRMM or CCNMM for conv_mode? I noticed CCNMM is only implemented in CPU mode . And I apply these three modes to lenet, GEMM>CSRMM>CCNMM. Why this happens?

In CPU mode, no matter what conv_mode I use, I need to use Mkl?

Best regards

@lemonrr CSRMM and CCNMM were implemented in both CPU and GPU mode.

In CPU mode, mkl is required only when CSRMM is used.

In CCNMM, we first need to concatenate nonzero columns and nonzero rows of the feature map matrix to a dense matrix. The concatenation is temporally using the CPU routine. I currently do not get time to substitute it with a GPU routine, but this would not affect the timing of CCNMM itself. Please pull request https://github.com/wenwei202/caffe/issues/3 if you implement a GPU routine.

@wenwei202 Hello,

I came across your paper and I really liked it. Thank you for sharing your work.

I am trying to use your code but I am not able to build your scnn branch repository.

mkdir build

cmake ..

make all -j8

and then I am getting below error.

Do you know where I am doing wrong in my makefile.config

Thank you

@ananddb90 See issue here https://github.com/wenwei202/caffe/issues/6

@wenwei202 Thank you for your reply. I am able to do make all but my make runtest fails

I am using atlas blas in makefile.config file. Do you know what could be reason :)

I am using your branch for my semantic segmentation problem. Please correct me if my understanding is right.

- Initially I must train my model (train.prototxt) without any sparse feature enabled in train.prototxt and solver.txt

- After training, I should modify my model (train.prototxt) with group_lasso option and

layer {

name: "fire2/expand1x1"

type: "Convolution"

bottom: "concat_initial"

top: "fire2/expand1x1"

param {

lr_mult: 1.0

decay_mult: 1.0

breadth_decay_mult: 1.0

kernel_shape_decay_mult: 1.0

}

param {

lr_mult: 2

decay_mult: 1

breadth_decay_mult: 0

kernel_shape_decay_mult: 0

}

convolution_param {

num_output: 64

kernel_size: 1

weight_filler {

type: "msra"

}

}

}

accordingly in solver.txt

lr_policy: "poly"

power: 1

momentum: 0.9

weight_decay: 0.0001 #0.0002

kernel_shape_decay: 0.0004

breadth_decay: 0.0003

- After completion of 2nd step training, I must fine tune my model to accelerate the inference and to do so I make changes in my model (train.prototxt) with connectivity_mode: DISCONNECTED_GRPWISE

and remove group lasso from both solver and train.prototxt

Please correct me if my understanding is wrong.

Waiting for your reply.

Thank you

@ananddb90 Correct. Step 3 basically is to recover some accuracy without decreasing the sparsity. You can simply set kernel_shape_decay: 0.0 and breadth_decay: 0.0 to reuse your prototxts in step 2.

@wenwei202 I have a similar question to this one. In step 2, which is training with group lasso, I saw in your CIFAR10 sample code that you commented out connectivity mode, while in step 3, which is finetuning, you uncommented connectivity mode in order to freeze the zero elements. I wonder if this is intentional? If I use connectivity mode to freeze zero elements during the group lasso training phase (step 2), will it make a lot difference?

Thank you!

@Jarvistonychen It will NOT make much different since there are likely no all-zero rows and columns before SSL, and enabling DISCONNECTED_GRPWISE likely will not freeze any weight. But, we cannot grantee. So we uncomment DISCONNECTED_GRPWISE to use the default CONNECTED in step 2 to let SSL dynamically learn structured sparsity.

@wenwei202 Thanks a lot for the quick reply! This really helps! When I am training my network, I see 100 in the "Row Sparsity %". I guess it means that all rows in that layer are pruned. Does it mean that my deep network is disconnected since the weights of one layer are all zeros?

Thanks

@Jarvistonychen The displayed sparsity also include the ones for biases. Very likely that's for a bias blob? During SSL, the connections will NOT be permanently removed, zeros can still go back to nonzeros if they get a large update by the gradients of cross entropy.

@wenwei202 Thank you very much for the prompt reply! I see. It's probably a bias blob... I wonder if you have posted anything explaining how to read the printout, e.g., what those numbers mean, which is weight blob, which is bias blob and for which layer?

Thanks

@Jarvistonychen During training, you will see some sparsity statistics. The sparsity is shown in the order of layers, and in each layer, in the order of weights and then biases. Basically, it plots sparsity for all parameter blobs in caffe, like parameters for a batch normalization layer. We usually care only about the sparsity of weights.

@wenwei202 Got it! This is very helpful! Really appreciated!

@wenwei202 I am working with SSL in my object detection DNN. Unfortunately, i have trouble in the first step in which high sparsity net is training. The training error is always higher than the original DNN. You suggest that low error should be gotten in each step. How can I get the same training error with original DNN?

@yangzhibo450 You should tune the hyper-parameter (lambda) to make the trade-off between accuracy and sparsity. More specific, tuning breadth_decay, kernel_shape_decay or block_group_decay. Higher those numbers, higher sparsity but lower accuracy. Those hyper-parameters are different for different nets. You can do the first try of the hyper-parameter which gives you the some level of regularization value as the loss value at the very beginning.

@wenwei202 Thanks. I'd better test more to get a balance between sparsity and accuracy. Another question to bother you, have you ever test the gpu memory usage in testing phase? I can see the log like these:

Although I squeezed the weights matrix, the GPU memory usage is still higher than my original network's. Do you have and good suggestions?

In my work:

- conv_mode are setted to LOWERED_CCNMM in deploy.prototxt.

- conv layers are so lightly squeezed that the sparsity varies from 0.2 to 0.5.

- there are no layers in my networks.

@yangzhibo450 In my code, after squeezing/concatenating nonzero rows/columns, I did not release the original one. You can if you want to save memory. The squeezed ones are stored in squeezed_weight_buffer_, after concatenation into which, you can release the original blob.

hi @wenwei202

can you share how to measure FLOP value to check SSL effect ?

Thank you very much

@RupeshD Cool!

Thanks for your great work!

I came across an issue while profiling trained net.

I have added conv_mode : LOWERED_CSRMM to convolutional layer as you wrote.

In addition, I have used train_val.prototxt as I used caffe time

However, I have run into this kind of error

`lee@jbmpark-server:~/caffe_workspace/caffe$ ./build/tools/caffe time -model

examples/cifar10/cifar10_full_train_test_ft.prototxt -weights

examples/cifar10/SSL_RESULT2/cifar10_SSL_iter_150000.caffemodel -gpu 1 -iterations 10

I0721 10:31:16.504832 31889 caffe.cpp:349] Use GPU with device ID 1

[libprotobuf ERROR google/protobuf/text_format.cc:245] Error parsing text-format caffe.NetParameter: 206:15: Message type "caffe.LayerParameter" has no field named "conv_mode".

F0721 10:31:17.383092 31889 upgrade_proto.cpp:88] Check failed: ReadProtoFromTextFile(param_file, param) Failed to parse NetParameter file: examples/cifar10/cifar10_full_train_test_ft.prototxt

* Check failure stack trace: *

@ 0x7ffeee14cdaa (unknown)

@ 0x7ffeee14cce4 (unknown)

@ 0x7ffeee14c6e6 (unknown)

@ 0x7ffeee14f687 (unknown)

@ 0x7ffeee855d1e caffe::ReadNetParamsFromTextFileOrDie()

@ 0x7ffeee8bb831 caffe::Net<>::Net()

@ 0x408d8f time()

@ 0x405e0c main

@ 0x7ffeed149ec5 (unknown)

@ 0x40667b (unknown)

@ (nil) (unknown)

Aborted (core dumped)`

I have checked that conv_mode is defined in caffe.proto.(It works fine without conv_mode by the way)

What could be causing this kind or error?

Also, I would like to ask your opinion of applying your work to object detection algorithm such as SSD.

Would it be good idea to apply it?

Thanks for your help in advance.

@HyunJaeLee2 Did you put conv_mode outside of ConvolutionParameter? It should be inside of it. One example:

convolution_param {

num_output: 256

pad: 2

kernel_size: 5

group: 2

conv_mode: LOWERED_CSRMM # sparse weight matrix in CSR format * lowered feature maps

engine: CAFFE # Those features are available in CAFFE cuBLAS mode instead of CUDNN

}

I think you could apply it to detection. You may connect with @legolas123 who had got very good result on faster-rcnn for detection. Please 'Ctrl + F' legolas123 in this thread for previous discussions.

We also put efforts to train DNNs to lower rank DNNs, which can be combined with sparsity methods. Paper to appear in ICCV 2017 and code is in the master branch. One challenge we are facing is, we can very aggressively push filters to very low rank space, but after aggressive low-rank decomposition, it becomes more challenging to restore the accuracy. Appreciate it if anyone could further solve this issue.

I looked at your NIPS paper and looks really interesting. I am trying to implement group lasso for my project in Theano. I could not figure out how you form the groups. If you apply channel sparsity. How you form the groups among channels. Eg. If there are 64 channels, should if be 8 groups or 4 groups. Looking at your code I could not find where is this implemented. I am new to caffe and hence it is taking time to figure out where is this implemented in code. If you can guide me on this or refer me to the part of code which implements this.

Thanks for your help.

I have followed all the instructions to build scnn branch. However, when I do make test, I get below errors:

CXX src/caffe/test/test_convolution_layer.cpp

In file included from src/caffe/test/test_convolution_layer.cpp:3:0:

./src/gtest/gtest.h:17003:24: error: expected template-name before ‘<’ token

: public CaseName

^

src/caffe/test/test_convolution_layer.cpp:352:1: note: in expansion of macro ‘TYPED_TEST’

TYPED_TEST(ConvolutionLayerTest, TestSimple3DConvolution) {

^

./src/gtest/gtest.h:17003:24: error: expected ‘{’ before ‘<’ token

: public CaseName

^

src/caffe/test/test_convolution_layer.cpp:352:1: note: in expansion of macro ‘TYPED_TEST’

TYPED_TEST(ConvolutionLayerTest, TestSimple3DConvolution) {

If you can guide where I am going wrong

@srivastavag89 The implementation of group Lasso in here, which adds additional regularization gradients to push groups to zeros. In Caffe, we form groups by configuring caffe.proto as covered in tutorial. In Caffe format, weights are stored as matrixes. One channel of weights are in the adjacent columns in the weight matrix, and one filter is one row in the weight matrix.

For Theano, does it support automatic differentiation? If it does, you only need to add the group Lasso regularization to the total cost function, then any SGD optimizer will optimize it for you automatically.

An example of adding group Lasso regularization on rows/columns in TensorFlow is:

def add_dimen_grouplasso(var, axis=0):

with tf.name_scope("DimenGroupLasso"):

t = tf.square(var)

t = tf.reduce_sum(t, axis=axis) + tf.constant(1.0e-8)

t = tf.sqrt(t)

reg = tf.reduce_sum(t)

return reg

glasso_reg = add_dimen_grouplasso(weights, axis=0) # column group lasso

...

tf.gradients(cross_entropy + glasso_reg * wd_coef, all_parameters)

...

We do have some issues for the make runtest, but we didn't get this error before. Some details in https://github.com/wenwei202/caffe/issues/2 , please let us know if you fix it.

Thanks for the explanation. I get the intuition now and can relate with the theory in paper. However, I am not sure about the line in the code above

t = tf.reduce_sum(t, axis=axis)

should not this be, t = tf.reduce_sum(t, axis=(1,2,3))

as for a row regularization, we should be taking the squared sum of all the weights in a filter. Please, let me know where I am going wrong in my interpretation.

Regarding the issue, it is coming during make test, if I resolve it, I will post my fix here.

@srivastavag89 you are right, you should reduce along three axes if it is a 3D filter. My error.

@wenwei202

Thanks for your kind answer!

As a matter of fact, there was a slight problem with my configuration.

I would like to ask few more questions if you don't mind.

I've read your ICCV 2017 paper(nice work by the way), and would like to apply it with SSL.

However, SSD, which is also implemented in caffe has many newly-implemented layers.

Do you think the best of applying your work to SSD is to analyse your code then merging it with SSD code? or do you have any other idea??SSD uses feature extractor net (VGG as a default) pre-trained with ImageNet, then fine-tune with VOC with newly added layers at the back. Do you think the network will converge well only with fine-tuning procedure with VOC?(with pre-trained feature extractor that SSL has been applied) or do you think pre-trained network should be re-trained with SSL then applied to SSD?

I've read your comment about implementing your work in TensorFlow. However, I have found that it doesn't support cuSPARSE at the moment but simple sparse matrix acceleration(https://www.tensorflow.org/api_docs/python/tf/sparse_tensor_dense_matmul).

Do you think the performance will improve in TensorFlow without support of cuSPARSE?I have run tests on ConvNet with cifar-10 and came up with results as below.

Baseline (GEMM) : 1657.76 ms

Baseline (CSRMM) : 1594.99 ms

After SSL (GEMM) : 1638.37 ms

After SSL (CSRMM) : 1361.54 ms

After Fine-tuning(GEMM) : 1678.58 ms

After Fine-tuning(CSRMM) : 1183.33 ms

and Sparsities of each case are

Baseline : (0.430833, 0.655312, 0.659883)

After SSL : (0.603333, 0.845117, 0.659688)

After Fine-tuning : (0.624167, 0.860391, 0.708887)

-in conv1, conv2, conv3 order

What I'm interested is that inference speed has changed a lot after fine-tuning even though there wasn't dramatic difference of sparsity. From what I understand, fine tuning is about regaining accuracy. Is it naturally to get such a gain?

Sorry for so much question. I wish I could get your intuition before applying to detection.

I hope I could share nice result of applying your work to SSD.

Thanks a lot!

@HyunJaeLee2

- merging is a good way since everything is trackable;

- It is a safe way to first use SSL to get a sparsified VggNet and then fine-tune the back by VOC, but it is also doable or even better to train the whole net using SSL and VOC. SSL is a kind of regularization, and if it would not overfit, then you may get higher sparsity because VOC is a smaller dataset and the model can be compressed more? This is just my thought, the best way is to try both ways.

cuSPARSEis still slow if you checked the figure here. I recommendLOWERED_CCNMM. TensorFlow is good for training, for inference, you may need to optimize your inference code based on the sparsity you obtained.- Finetuning is for accuracy regaining while maintaining the sparsity trained by SSL. It can get ~1.0-2.0% accuracy back. I do not know how you measured the speed, but the code is not the best one for final deployment because some code hacking is required.

@wenwei202 Thanks for your work.

I am trying to implement structured sparsity in Thenao/Lasagne. I have very similar network architecture as yours. But when I run the test, the sparsity for filters and channels change from 0% to 100% within an epoch. If you can suggest or give some pointers based on your experience where I could be going wrong in my implementation. Also, I wanted to confirm regarding the hyperparamters, did you use the filter and channel sparsity hyperparameters, as .003, as given on your github.

@srivastavag89 may be the hyper-parameter of structured sparsity regularization is too large such that it only optimizes the sparsity?

The hyper-parameter depends on the network architecture and dataset. It is easier to tune the hyper-parameter by retraining the trained DNNs , instead of training from scratch.

One good hyper-parameter to start from is the one such that the value of cross-entropy and the value of regularization are close. Another good value is the one of L2 weight decay.

Thanks for the reply. I will try taking a trained network and then retrain it adding structured sparsity to it this time. Also, I will try hyperparameters as per your suggestions.

Another suggestion I needed, would it be fine if I start with another network in theano which gives similar baseline accuracy without SSL applied to it. But this network is not same but similar to your baseline network with convolution, max pooling and batch normalization layers. Would you suggest trying with this network or constructing a network exactly same as yours? Just trying to get your views, if there is anything specific in the network you took or you would expect SSL to work on other networks as well,

@srivastavag89 SSL is a universal method, it would work for versatile networks not just for those in the paper.

@wenwei202 I try to train SSL with CPU mode

but there has a problem " "Deprecated in CPU mode: breadth and kernel shape decay (use block group decay instead)""

I'm appreciated you could answer me!

@zlheos Please use block_group_lasso in net protobuf and block_group_decay in solver protobuf instead. You may check the tutorial here.

FYI, Structured Sparsity Learning (SSL) approach is now also implemented in TensorFlow (code). We also extend and advance SSL to Recurrent Neural Networks to reduce the hidden dimension of LSTMs, i.e., learning the number of hidden/cell states/neurons in RNNs. Missing details (e.g. training method) are included in Section 3.2 here.

Theory

begin Comments

IF I use fft(Fast Fourier Transformations) in exponential convolutions for video filters, the system implementation breaks crashes. While I wait for the solutions to these video filters and their stream applications by finding non-identity type third-order differential derivatives in some Banach Lp singular integral operators with non-convolution type manifolds I have found it is easier with Gauge systems and solitions yet neural network systems of a gaugian types might only be theory at this point.

end Comment

When going beyond the theories and applications we can simplify all kinds of identities into one type or another.

@wenwei202 Great job on this paper! It is very impressive! I wonder where I can find your prototxt for training the alexnet for imagenet (the one shown in paper)? Because of the need of my experiment, I would like to make sure that I am using the correct method to compress the network in the correct way (which should reproduce your results).

Much appreciated!

@Jarvistonychen Took a while to look around the logs, and find the the hyperparameter of 0.0005 for entry 5 in table 4.

@wenwei202 Thanks a lot for spending time answering my question! Since there is only column sparsity in entry 5 of table 4, I think 0.0005 is for kernel_shape_decay? If I also wanna do breadth_decay, is 0.0005 also the right value to use?

Thanks again!

@Jarvistonychen Yes, you may start from there.

@wenwei202

for conv layer with dimension = nfilter x nchannel x nheight x nwidth (64x128x3x3)

how can I combine filter wise and channel wise sparsity on one prototxt of ResNet?

Do I have to write

block_group_lasso{ #filterwise

xdim: 128x3x3

ydim: 1

}

block_group_lasso{ #channelwise

xdim: 3x3

ydim: 64

}

Correct. More precisely:

block_group_lasso{

xdim: 1152

ydim: 1

}

block_group_lasso{

xdim: 9

ydim: 64

}

@wenwei202

_In the log file, what is the meaning of_

- I1024 15:28:53.767140 10494 sgd_solver.cpp:120] Element Sparsity %:

- I1024 15:28:53.776428 10494 sgd_solver.cpp:130] Column Sparsity %:

- I1024 15:28:53.777498 10494 sgd_solver.cpp:139] Row Sparsity %:

- I1024 15:28:53.777539 10494 sgd_solver.cpp:153] Block Sparsity %:

I1024 15:29:05.849658 10494 solver.cpp:231] Iteration 4420, loss = 0.494514

I1024 15:29:05.849678 10494 solver.cpp:247] Train net output #0: accuarcy = 0.920354

I1024 15:29:05.849684 10494 solver.cpp:247] Train net output #1: loss_bbox = 0.142928 (* 1 = 0.142928 loss)

I1024 15:29:05.849689 10494 solver.cpp:247] Train net output #2: loss_cls = 0.347726 (* 1 = 0.347726 loss)

I1024 15:29:05.849691 10494 solver.cpp:247] Train net output #3: rpn_cls_loss = 0.0584648 (* 1 = 0.0584648 loss)

I1024 15:29:05.849695 10494 solver.cpp:247] Train net output #4: rpn_loss_bbox = 0.0126774 (* 1 = 0.0126774 loss)

I1024 15:29:05.849699 10494 sgd_solver.cpp:106] Iteration 4420, lr = 0.0001

I1024 15:29:06.022259 10494 sgd_solver.cpp:120] Element Sparsity %:

0.28699 100 0 0 0 0 0 1.58691 0 1.17188 0 0 0 0.366211 0 0 0 0 0 0.496419 0 0 0 0 0 1.55029 0 1.17188 0 0 0 1.82495 0 0 0 0 0 0.311957 0 0 0 0 0 0.57373 0 0 0 0 0 7.91092 0 0 0 0 0 7.32727 0 0 0 0 0.78125 6.30493 0 0 0 0 0 16.4474 0 0 0 0 0 11.7126 0 0.78125 0 0 0 6.78982 0 0 0 0 0 21.0297 0 0 0 0.195312 0 7.89948 0 0 0 0 0 7.76062 0 0 0 0 0 6.10775 0 0 0 0 0 8.77342 0 0 0 0 0 11.3316 0 0 0 0 0 6.27865 0 0 0 0 0 8.37975 0 0 0 0 0 8.17313 0 0 0 0 0 7.22427 0 0 0 0 0 5.82258 0 0 0 0 0 7.35607 0.146484 0 0 0 0 7.47519 0 0 0 0 0 5.42183 0 0 0 0 0 7.55234 0.0488281 0 0 0 0 3.44215 21.0938 2.3112 0 10.9375 19.4444 3.55883 14.9414 3.04452 28.5714 77.5074 87.5

I1024 15:29:06.031704 10494 sgd_solver.cpp:130] Column Sparsity %:

0 100 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1.17188 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1.5625 0 0 0 0 0 0.78125 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0.976562 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0.0976562 0

I1024 15:29:06.032747 10494 sgd_solver.cpp:139] Row Sparsity %: