Caddy: compress/flate.NewWriter causes large heap growth when compression is unnecessary

Recently I ran into an issue:when I run a benchmark test with my service in production environment, compress/flate.NewWriter causes large heap memory growth , even if the number of bytes in every response is less than min_length in the config of http.gzip directive.

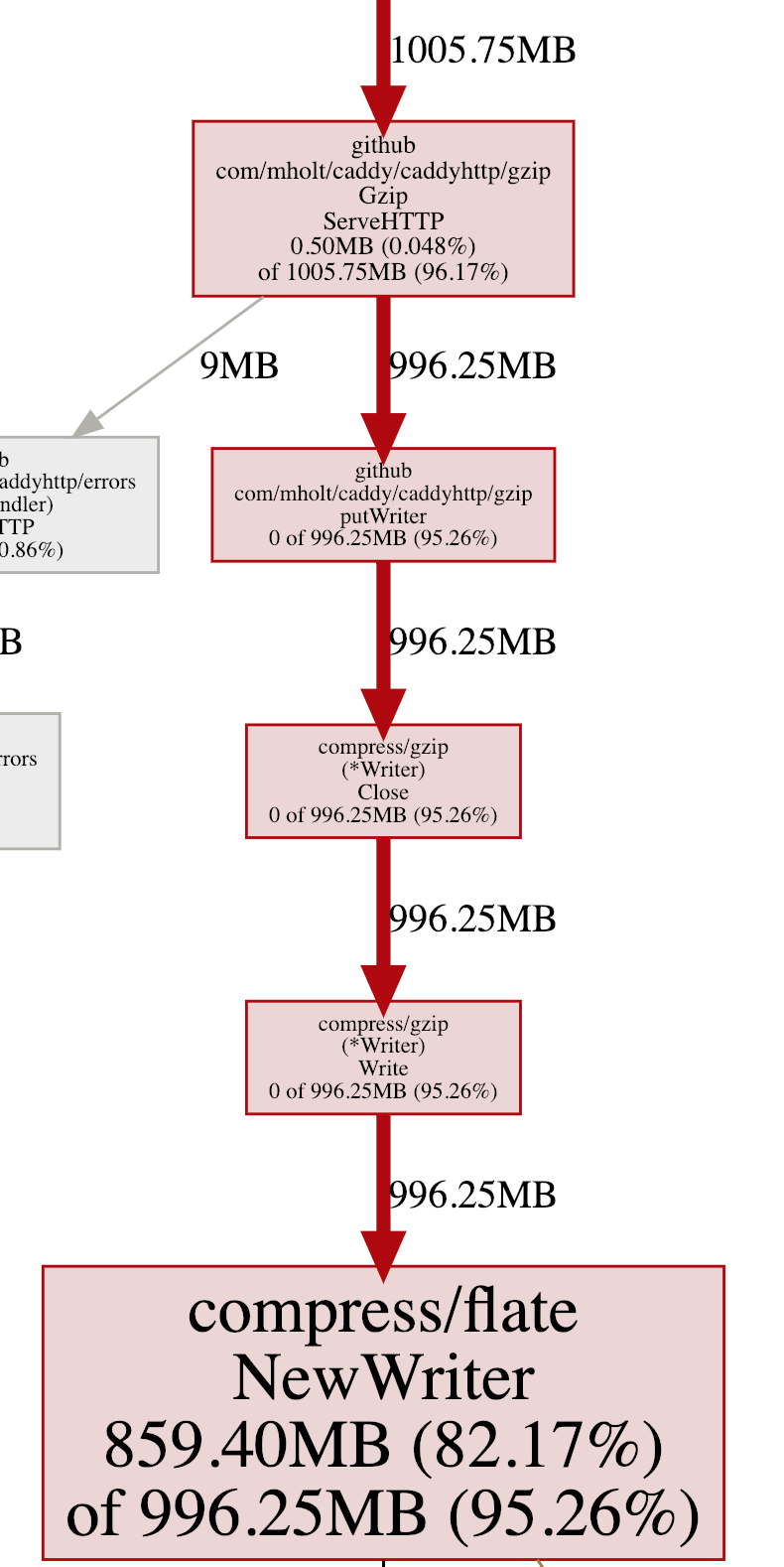

then pprof help me found the key information

(venv37) gairui@gairui: go tool pprof -inuse_space http://localhost:2015/debug/pprof/heap\?gc

Fetching profile over HTTP from http://localhost:2015/debug/pprof/heap?gc=

Saved profile in /Users/gairui/pprof/pprof.alloc_objects.alloc_space.inuse_objects.inuse_space.062.pb.gz

Type: inuse_space

Time: Dec 18, 2018 at 11:34pm (CST)

Entering interactive mode (type "help" for commands, "o" for options)

(pprof) top

Showing nodes accounting for 1011.30MB, 96.70% of 1045.81MB total

Dropped 105 nodes (cum <= 5.23MB)

Showing top 10 nodes out of 26

flat flat% sum% cum cum%

859.40MB 82.17% 82.17% 996.25MB 95.26% compress/flate.NewWriter

131.84MB 12.61% 94.78% 131.84MB 12.61% compress/flate.(*compressor).initDeflate (inline)

11.04MB 1.06% 95.84% 11.04MB 1.06% bufio.NewWriterSize (inline)

5.52MB 0.53% 96.37% 5.52MB 0.53% bufio.NewReaderSize (inline)

1.50MB 0.14% 96.51% 1014.75MB 97.03% github.com/mholt/caddy/caddyhttp/httpserver.(*Server).ServeHTTP

1MB 0.096% 96.60% 7.01MB 0.67% net/http.(*conn).readRequest

0.50MB 0.048% 96.65% 5.50MB 0.53% runtime.systemstack

0.50MB 0.048% 96.70% 1005.75MB 96.17% github.com/mholt/caddy/caddyhttp/gzip.Gzip.ServeHTTP

0 0% 96.70% 5.52MB 0.53% bufio.NewReader

0 0% 96.70% 136.85MB 13.09% compress/flate.(*compressor).init

the heap.svg show that compress/gzip.(*Writer).Close cause compress/flate.NewWriter to allocate memory,and github.com/mholt/caddy/caddyhttp/gzip.putWriter would be called no matter whether gzip compression happened or not.

https://github.com/mholt/caddy/blob/1570bc5d03bd4423625505ca4fa45c27b691d19e/caddyhttp/gzip/gzip.go#L68-L77

*gzip.Writer is managed by pool, and its number is related to the number of concurrent requests

so I think the more reasonable way is just call and gzip.putWriter methods only when gzip compression happened.

This is an easy way I used to reproduce this issue.

- start service Caddyfile

localhost {

gzip {

min_length 2048

level 4

}

root /home

pprof

}

- benchmark

hey -z 10s -c 1500 -q 15000 http://localhost:2015/

- pprof

go tool pprof -inuse_space http://localhost:2015/debug/pprof/heap

All 2 comments

Nice find.

Think you could open a PR to fix it?

Nice find.

Think you could open a PR to fix it?

Thanks for your reply, I will do it soon

Most helpful comment

Thanks for your reply, I will do it soon