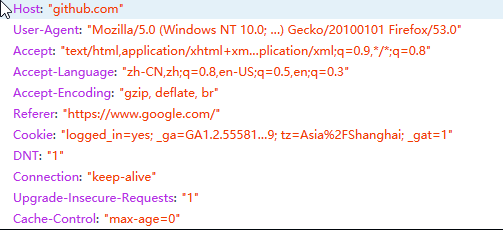

Caddy: proxy: Add header and uri load balancing policies

Can caddy support more load balancing algorithms such as haproxy.

round-robinfor short connections, pick each server in turnleastconnfor long connections, pick the least recently used of the servers

with the lowest connection countsourcefor SSL farms or terminal server

farms, the server directly depends on the client's source addressurifor

HTTP caches, the server directly depends on the HTTP URIhdrthe server

directly depends on the contents of a specific HTTP header fieldfirstfor short-lived virtual machines, all connections are packed on the

smallest possible subset of servers so that unused ones can be powered

down

All 57 comments

Anyone can propose a new policy. The requirement really is adding the implementation here https://github.com/mholt/caddy/blob/master/caddyhttp/proxy/policy.go#L21-L24.

Caddy already supports round robin, least conn, and source (IP hash).

I think implementing first would also satisfy #1103. Anyone is welcome to implement that and submit a pull request, of course!

Hey,

i would implement these feature. But i've a question. Where do we want to get the number of "firsts" machine?

I think we could make it in a static way, or about questioning an url for it.

Greetings.

@pbeckmann Good question... I'm not sure how haproxy decides that. Anyone know?

@mholt

I looked in the documentation of haproxy and found

first The first server with available connection slots receives the

connection. The servers are chosen from the lowest numeric

identifier to the highest (see server parameter "id"), which

defaults to the server's position in the farm. Once a server

reaches its maxconn value, the next server is used. It does

not make sense to use this algorithm without setting maxconn.

The purpose of this algorithm is to always use the smallest

number of servers so that extra servers can be powered off

during non-intensive hours. This algorithm ignores the server

weight, and brings more benefit to long session such as RDP

or IMAP than HTTP, though it can be useful there too. In

order to use this algorithm efficiently, it is recommended

that a cloud controller regularly checks server usage to turn

them off when unused, and regularly checks backend queue to

turn new servers on when the queue inflates. Alternatively,

using "http-check send-state" may inform servers on the load.

Source: http://cbonte.github.io/haproxy-dconv/1.8/configuration.html#4.2-balance

So it's seems pretty easy. I'll look now into the testing and send you then a pull request :)

Greetings

I would lovely implement hdr and and uri too.

- For hdr we definitely have to change the syntax in the configuration file, as there we have to give the header field as a parameter to the algorithm.

- For uri, i could implement a basic version, where it's not possible to specify the len and depth of the evaluated uri.

Anyway, first @mholt should decide, whether this could be useful, or does we wait for a issue and close this one?

@pbeckmann We can add another argument to policy for the header (don't call it hdr) policy, sure.

Let me give you collaborator status so you can push your changes to a branch instead of a fork, and help review pull requests from others, etc.

I would like to see this implemented in Caddy: http://blog.haproxy.com/2013/04/22/client-ip-persistence-or-source-ip-hash-load-balancing

What exactly do you mean with "this"? The ip hash algorithm already exists in caddy.

It's not clear from this issue what exactly how uri and hdr would work, or whether they're even good ideas for Caddy; since we have 4/6 requested policies now, I'm going to close this issue, but am still open to adding uri and hdr policies (although I hate the name "hdr") in the future if anyone would find it a useful feature; please just describe exactly how it will work first. :)

@mholt I use caddy like this: one caddy -> two varnishs (HTTP Cache Server, usually based on uri).

- I use one of

round-robin,leastconnandsource, the cache will be duplicate in two the varnishs. - I use

first, the cache will be fixed in the first varnish. Supposed the first varnish crash, the second varnish will start working. There is no cache in the second varnish, so all cache will be recreated. Too many requests to the back-end lead to poor performance, even crash.

Please support uri requested policy.

@vicanso Sure, but is uri just a case-sensitive hash of the URI then?

@mholt Yes.

Fair enough -- somebody who is new to this project or new to Go can add the uri policy.

How exactly does hdr load balancing policy work?

I'll have a look at URI policy. I'm thinking simple version to start with, and then extend with length and depth settings. Sounds OK?

Sounds great!

Don't hesitate to ask if you have questions or so.

but maybe you should wait until @mholt finishes https://github.com/mholt/caddy/issues/1639 as he want to rewrite the proxy middleware.

@pbeckmann @cez81 No, it's fine -- go ahead, these are small and modular components that I can copy over to the new proxy middleware. I'm not too far into it yet.

Cookies are also common for session affinity, but Caddy currently wouldn't be able to support that method without imposing a globally defined (or even hard-coded 😨 ) cookie name. I think the header proposal suffers from a similar shortcoming.

I think an ideal solution would be to slightly change the way the policy directive works, such that the first argument is the policy name and the following arguments are passed into the policyCreateFunc(). This would be backwards (and to a degree, forwards)-compatible, since subsequent arguments are variadic.

Example:

mysite.com/somepage {

proxy {

policy cookie my-cookie-name

}

}

The existing function signatures would need minimally updated, e.g.

func(args ...string) Policy { return &URIHash{} }

but that would also allow for

func(args ...string) Policy { return &Header{ HeaderName: args[0] } }

and

func(args ...string) Policy { return &Cookie{ CookieName: args[0] } }

The existing hash function can be used for the value of the named header/cookie.

@zikes Great idea; Sounds like a good plan to me, although maybe we should limit it to just one string argument passed into the policy maker.

I gave the Header policy a go at #1751. I would also like to implement Cookie, but I ran into some difficulties with that. In the nginx implementation, if the cookie is not present then nginx will generate the cookie and return it on the response. Unfortunately the response is currently not available in the Policy.Select() method, which is where this would need to happen.

A somewhat inconvenient alternative would be to create the cookie outside of the proxy policy, in the Caddyfile, so that it is available before the policy is evaluated (a cookie subdirective, maybe?)

Having the cookie (or at least the cookie's value) generated and available prior to the policy being evaluated is important, otherwise the policy may route to a different host on subsequent requests.

Conveniently, I'm rewriting that part of the proxy middleware right about now. I will take this into consideration! For my reference, what is the value of the cookie set to?

Offhand I can't find any documentation on what value nginx sets the value to. Since it's hashed any vaguely unique and random value would work, so maybe a UUID?

Hm, yeah, the nginx blog just describes the cookie describing which server handled it "in an encoded fashion" - but NGINX Plus is closed source so I am not sure we can inspect. We could choose some innocuous value that won't reveal anything secret about the infrastructure.

FWIW, HAProxy also does sticky sessions using cookies for persistence. Relevant post about it: https://www.haproxy.com/blog/load-balancing-affinity-persistence-sticky-sessions-what-you-need-to-know/, maybe it would help you implement it.

Salient bit - they take the cookie and prepend a bit to the value when proxying a response with Set-Cookie to specify the server to use, then on the next request, it grabs the prefix to determine which server to proxy to, and strips it off so the application just sees the cookie without the prefix.

Is still have any policy that can be done?

As far as I can tell, all the ones in OP have been covered now. I think source in OP is ip_hash

Sticky sessions using cookies is not yet supported, but as @zikes mentioned it would probably need some other changes to the proxy middleware, which @mholt was working on (are you still?).

So I figure this can stay open until a cookie policy is added.

Adding head and cookie load balancing strategies is a good idea

But in fact the http header usually contains a cookie

I hope this configuration

proxy / http://localhost:6800/jsonrpc {

policy hdr Upgrade websocket

websocket

}

or

proxy / http://localhost:6800 {

policy hdr cookie my-cookie-name

}

@mholt Can you provide any progress updates on this issue? I would also like to use cookies for session affinity.

I have no updates to report -- someone else will have to contribute this I think.

I would like to try this out either if no one against this:)

Go for it, no one else is working on it!

@mholt I looked at code and cookie policy can be implemented similiar to header policy(with hashing of value) and it's pretty easy task(actually i already write the sample code, need to test it so...). But reading comments to this issue i found out that you are expecting some additional properties/behaviour for this policy, right?

So how should i do this? With simple hashing(we expect that our upstream server sets cookie for user)? Or we want to set cookie from caddy to user? Or somehow mixed of this two?

@SmilingNavern I'm not sure, since I'm not using this feature. Maybe someone else in this thread with an interest in this feature can give you some feedback.

@jonesnc could you please elaborate on the cookie feature? What do you like to see in this feature?

@mholt I was thinking about this issue. Part of the problem that if we use hash of cookie to route to specific upstream, than we need somehow to deal with initial empty request which gets cookie. And that initial request should be sticky to this upstream.

I believe that haproxy/nginx solve this problem with setting cookie to client(this is not possible with current policy implementation). I will look into source code of haproxy, but maybe you have some thoughts on this.

I would like to see Caddy implement the same behavior that HAProxy offers. In my particular use-case, I proxy a web application with 3 nodes, and when I log in to one of those nodes a cookie gets created (JSESSIONID). So, I want Caddy to load balance based on 1) least connections if JSESSIONID is not set, and 2) if JSESSIONID is set, proxy to the node that created the session (e.g., the node that included the SET-COOKIE HTTP header). Basically a typical cookie-based session persistence setup.

More info on this behavior in haproxy can be found here:

@jonesnc yea, thank you:) i already read this article. I understand what you want. Will try to propose some MVP solution and discuss with mholt about implementation.

Currently the most hard part is setting cookie value from caddy policy.

@SmilingNavern great! I'm glad there's someone smarter than me working on this feature 👍

@SmilingNavern @zikes

It seems that the value of the http request header cannot be judged by the Header policy. Can only determine the presence or absence of the http request header?

Is this the case?

The cookie header is usually only used to determine whether to access the site for the first time. It seems that there is no other use, so it seems that there is no problem in generating a standard cookie.

There seems to be an HTML5 project that only uses LocalStorage to store user state.

It seems that no web server can modify LocalStorage.

Maybe in the future, you need to be able to modify the LocalStorage web server.😊

@daiaji yeah, the main problem is to add possibility to modify user cookies within the policy plugin.

I am not sure what is the best way to implement this feature:) i hope @mholt can provide some guidance to me on this one.

Main problem is that you want cookie sticky sessions and you want that user which comes to your backend for the first time will be there second time.

Client -> caddy -> backend1

backend1 sets some backend specific cookies

and you want the second request of this client performed agains backend1 again.

@SmilingNavern

Part of the problem that if we use hash of cookie to route to specific upstream, than we need somehow to deal with initial empty request which gets cookie. And that initial request should be sticky to this upstream.

Are you sure? Why would the hash change regardless of whether the cookie is set? The source IP will be the same regardless of cookies, no?

@mholt

Okay, maybe i missing something but here is how i see it.

We want that new clients without cookie will go to any backend and then stick to this specific backend. If we just use hash of cookie following would happen:

- Client with empty cookie hashes to backend bck1

- Backend bck1 sets specific cookie to this client

- Client makes second request and now we proxy it depends on specific cookie and hash is differs from step1, so it can go to another backend

In haproxy case they write backend number to cookie and parse it later. So it becomes really sticky to first chosen backend.

I am not aware of another approaches or how to do it differently without loading caddy too much.

If we want implement it in haproxy way then we need to access in policy plugin to write cookies. Or design something better)

@SmilingNavern

If we just use hash of cookie

No, I mean use the ip_hash policy. It hashes the client IP address, not the cookies. It _should_ guarantee (as long as a client's IP doesn't change, anyway) that they always hit a certain backend.

However, if the client's IP changing during a session is a concern, and you don't want to re-auth in that case, then there's probably a not-hard way to add the ResponseWriter to the RegisterPolicy function so you can set cookies.

I believe people who want sticky sessions with cookies want it for mobile devices which can change their mobile devices.

Oh, okay. I would try out to add ResponseWriter to policy if that's okay with you

@SmilingNavern @zikes The Header policy should increase the value of the http header.

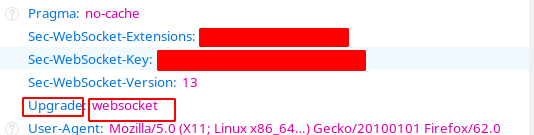

I don't know if the current Header policy can be used to determine if the request is a websocket.

Both nginx and apache are sufficient to determine the value of http Header and then load balance.😃

@daiaji sorry, but i didnt get your last point. What do you propose? What do you mean by "increase the value"?

@SmilingNavern Http Header has value.

And the value is not fixed.

The current Header policy can only determine whether the http header of the request exists, and cannot determine whether the request http header is a certain value.

Almost all http headers have several different values.

Header policy should increase the value of the http header, like this.

proxy / http://localhost:6800/jsonrpc {

policy header Upgrade websocket

websocket

header_upstream -Origin

}

Sorry, or should I modify the configuration file like this now?

policy header Upgrade: websocket

Policy is used only for balancing between multiple backends with preconfigured rules. So if you want to modify headers you should use something else. If i understood you correctly.

This issue is about only balancing policies

@SmilingNavern I don't need to modify the http header. I just want to determine the content of the http header and load balance.

proxy / web1.local:80 web2.local:90 web3.local:100 {

policy header X-My-Header

}

Can the X-My-Header in the header Policy example be replaced with Upgrade: websocket?

@daiaji what do you expect? How do you want it to work?

The current header policy implementation is based on hashing of header content. So you can use:

policy header Upgrade

and it will be load balanced based on hashed content of this header. Is this what you want?

@SmilingNavern This is the problem, the header policy does not check the specific content of the http header.

I want the header policy to check the specifics of the http header.

Like this

policy header Upgrade websocket

@daiaji If I'm understanding what you're asking, I don't think your case will work here, because policy does not make proxy conditional. i.e. it will not decide to not proxy to a backend based on the policy directive.

If what you want is to only proxy based on some condition, then what you'll want to do is the following: Set a rewrite rule to change the path of a websocket connection to something special (as a placeholder), then proxy on that path, and strip the special string with the without directive:

rewrite / {

if {>Connection} is Upgrade

if {>Upgrade} is websocket

to /special-websocket-url

}

proxy /special-websocket-url web1.local:80 web2.local:90 web3.local:100 {

without /special-websocket-url

transparent

websocket

}

I posted a question about that in the forums a couple years ago: https://caddy.community/t/websocket-proxy-condition/512

@francislavoie I really hope this is done.

And I think the proxy component should also add the IF statement function like the rewrite component.

This will make the configuration file more concise and increase the availability of the proxy component.

At some point, https://github.com/mholt/caddy/pull/1948 will be completed, so you'll be able to use that. For now, what I wrote is probably your best option. I don't see more conditional logic being added to proxy, it would make things too complex. Currently the path is what makes proxy conditional, and I think that fine (until we have the if directive`)

@SmilingNavern Just wondering, what's your status on the cookie policy? I think it might be okay to close this issue and open a new one for that, because this one is getting pretty long with discussion.

@francislavoie This is great! It's looking forward to it!

@francislavoie i am at early stage on this) actually it would be good as a separate issue.

Load balancing policies have been implemented into v2; closing. If new ones are needed, open a new issue or submit a PR!

Most helpful comment

Cookies are also common for session affinity, but Caddy currently wouldn't be able to support that method without imposing a globally defined (or even hard-coded 😨 ) cookie name. I think the

headerproposal suffers from a similar shortcoming.I think an ideal solution would be to slightly change the way the

policydirective works, such that the first argument is the policy name and the following arguments are passed into thepolicyCreateFunc(). This would be backwards (and to a degree, forwards)-compatible, since subsequent arguments are variadic.Example:

The existing function signatures would need minimally updated, e.g.

but that would also allow for

and

The existing

hashfunction can be used for the value of the named header/cookie.