Azure-functions-host: Multipart/form-data processing via HttpTrigger doesn't work for Azure Functions (Javascript)

Investigative information

I try to create Multipart parser for Azure Functions node.

When I try to post mulitipart image data, the binary part seems forcefully converted to the string.

Please provide the following:

- Timestamp: Sun, 08, Oct 2017 03:24:40 GMT

- Function App name: carreviewver2

- Function name(s) (as appropriate): HttpTriggerMulti

- Invocation ID:

- Region: Japan East

I'm also using the Azure Functions CLI (core) on my Mac as Local experience, the result is the same.

Repro steps

Provide the steps required to reproduce the problem:

- Create an Azure Functions with node and functions.json

NOTE: this sample hasn't parse multipart data. However, Once I tried to get the binary part, the result was the same.

_index.js_

module.exports = function (context, req) {

context.log('JavaScript HTTP trigger function processed a request.');

context.log('****************** context start');

context.log(context);

context.log('****************** start');

context.bindings.outputBlob = context.bindings.req.body;

context.log('****************** end');

context.res = {

// status: 200, /* Defaults to 200 */

body: "Hello " + (req.query.name || req.body.name)

};

context.done();

};

Also, specify dataType = binary to function.json

_function.json_

{

"disabled": false,

"bindings": [

{

"authLevel": "function",

"type": "httpTrigger",

"direction": "in",

"name": "req",

"dataType": "binary"

},

{

"type": "http",

"direction": "out",

"name": "res"

},

{

"type": "blob",

"name": "outputBlob",

"path": "outcontainer3/some.png",

"connection": "carreviewstr_STORAGE",

"direction": "out"

}

]

}

- throw a multi part access to the Azure Fucntions (node, httptrigger and blob bindings)

If you like, you can use this.

Expected behavior

The binary data is uploaded and I can get it from req.body or context.bindings.req.body if we specify "dataType = binary" on function.json .

Actual behavior

Multipart binary data seems converted into String and losing information.

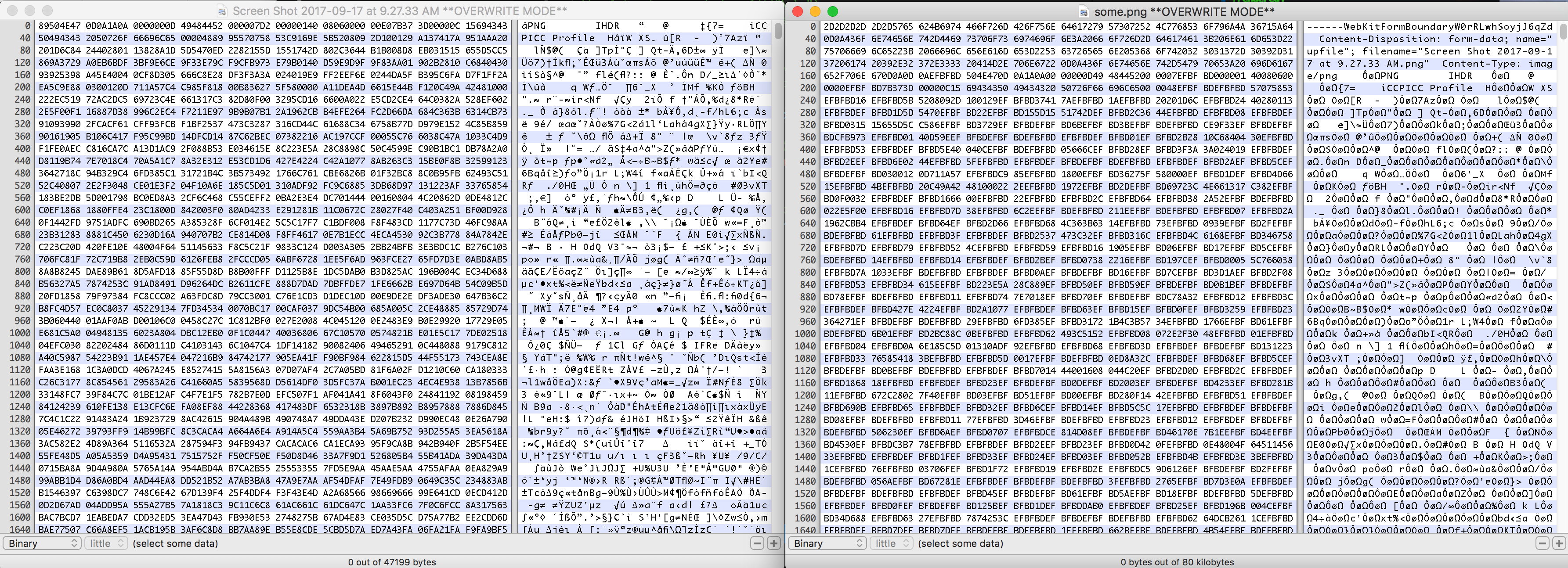

Left: Original

Right: Uploaded data ( I don't remove header, however, binary part is converted)

Known workarounds

I tried on my Azure Fuctions (Azure) 2.0.11276.0 (beta), Runtime version: 1.0.11247.0 (~1), and Azure Functons CLI (Core). Also I specified output bindings to the "dataType=binary" the result was the same.

The same. result. I also noticed , this code.

if (content is Stream)

{

// for script language functions (e.g. PowerShell, BAT, etc.) the value

// will be a Stream which we need to convert to string

ConvertStreamToValue((Stream)content, DataType.String, ref content);

}

Related information

Provide any related information

- Programming language used javascript

- Links to source (I wrote above.)

- Bindings used

HttpBindings, BobBindings.

All 10 comments

@TsuyoshiUshio thanks for the detailed report, this is a known issue. I'll investigate if I can enable the dataType: binary workaround in the meantime.

Long term, I plan to modify http so that we pass a byte[] or a byte stream to node & other non C# languages so that 1. we don't lose any binary information and 2. we give control of content parsing to the language (so you could use body-parser in node, etc)

Thank you @mamaso! I'm grad to hear that. :) It clarify the issue! Actually, I struggled this known issue for whole day. Finally I go with Base64. It works. https://blogs.technet.microsoft.com/livedevopsinjapan/2017/10/07/image-uploading-with-azure-functions-node-js-and-angular-4/ I guess a lot of guys might the same experience. Do you mind if I contribute the Azure Documentation and mention about this known issue and how to avoid it?

Yeah, I think that sounds like a great idea!

I'll do it. :)

Hi all, any update on this issue?

Hello @mamaso

Any updates on this issue? [2]

The issue appears to be that we convert bodies to UTF8 for multipart headers. https://github.com/Azure/azure-functions-host/blob/dev/src/WebJobs.Script/Rpc/MessageExtensions/RpcMessageConversionExtensions.cs#L119

The fix I'll do is to pass the body as bytes.

Understand, that this does not parse the files/etc. I don't believe it makes sense for Functions to add direct support for multipart/form-data, at this time, but we should stop converting to UTF-8 for multipart. This will be the scope of my fix. I'm scoping this to V2 only, for now.

For Node.js, you'll still need to parse body with a multipart reader in your nodejs code.

For example, this is my test which writes the file to disk (do not do this in a real scenario!!!!!!!!!!, it's just to validate the contents are equivalent):

const fs = require('fs');

const path = require('path');

const multer = require('multer');

const streams = require('memory-streams');

const upload = multer({ storage: multer.memoryStorage() });

module.exports = function (context, req) {

context.log('JavaScript HTTP trigger function processed a request.');

context.log('****************** context start');

context.log(context);

var stream = new streams.ReadableStream(req.body);

for (const key in req) {

if (req.hasOwnProperty(key)) {

stream[key] = req[key];

}

}

context.stream = stream;

context.log('****************** start');

const next = (err) => {

fs.writeFileSync(path.join(__dirname, "../test.png"), context.stream.files[0].buffer);

context.log('****************** end');

context.res = {

// status: 200, /* Defaults to 200 */

body: "Hello " + (req.query.name || req.body.name)

};

context.done();

};

upload.any()(stream, null, next);

};

Is this fix in Azure Functions v2 for Node,js? If so, I'm still getting a body that would need to parse the multipart.

This is one of the solution for uploading image using _createBlockBlobFromText_:

https://github.com/nemanjamil/azure-functions/blob/master/azure_upload_image.js

I will appreciate if someone has a solution for uploading the image using createBlockBlobFromStream and without express library.

https://github.com/nemanjamil/azure-functions/blob/master/azure_upload_image_strem.js

In this solution uploaded image is not parsed as expected. Try to open uploaded image with notepad and you will see the first two additional rows are shown before "data:image/jpeg;base64..."

I am facing this exact same issue issue in C# (>net core 2.0). Kindly help?

Most helpful comment

I'll do it. :)