Azure-docs: Not able to Execute Notebook in a Pipeline in Synapse

I'm trying to follow the guide but I cannot execute in a pipeline.

I created a notebook with these statements:

%%spark

import org.apache.spark.sql.DataFrameReader

spark.sql("CREATE DATABASE IF NOT EXISTS nyctaxi")

val df = spark.read.sqlanalytics("devoteamnlwsSQL.dbo.Trip")

df.write.mode("overwrite").saveAsTable("nyctaxi.trip")

The notebook works fine but if I try to debug or trigger a pipeline with a Synapse Notebook action that calls the notebook I get the following error:

error: value sqlanalytics is not a member of org.apache.spark.sql.DataFrameReader

Thanks

Document Details

⚠ Do not edit this section. It is required for docs.microsoft.com ➟ GitHub issue linking.

- ID: 63469eed-3276-1c40-77be-9c3c5541ef1e

- Version Independent ID: a9d9c43e-e4df-0904-334d-343c431dc5bf

- Content: Tutorial: Get started orchestrate with pipelines - Azure Synapse Analytics

- Content Source: articles/synapse-analytics/get-started-pipelines.md

- Service: synapse-analytics

- Sub-service: pipeline

- GitHub Login: @saveenr

- Microsoft Alias: saveenr

All 4 comments

@zincob - Thanks for posting the issue. We are looking into it and will get back to you shortly.

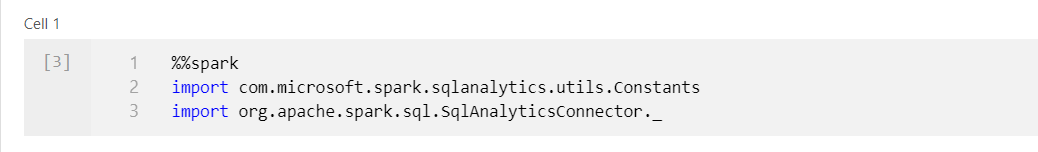

@zincob - As per this document, while running sqlanalytics command from pipeline, importing the modules step needs to be included in the notebook as below.

Thanks for the feedback, the pipeline is working!

I strongly suggest to include the document that you shared in the tutorial.

IMHO the tutorial doesn't contain all the required information to successfully follow it.

@zincob - Thanks for your contribution, I've addressed this issue in PR: MicrosoftDocs/azure-docs-pr#134761. The changes should be live EOD or tomorrow.

Most helpful comment

@zincob - As per this document, while running sqlanalytics command from pipeline, importing the modules step needs to be included in the notebook as below.