Azure-docs: Recurring issue with aks sample app -- external IP pending after 1 day

After building an aks cluster, while attempting to expose an external IP via the service type load balancer, the external ip was still pending after a day:

$ kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

azure-vote-back ClusterIP 10.0.138.4 <none> 6379/TCP 1d

azure-vote-front LoadBalancer 10.0.7.206 <pending> 80:30732/TCP 1d

I've deleted the app from kubernetes and redeployed with similar results:

kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

azure-vote-back ClusterIP 10.0.125.97 <none> 6379/TCP 6m

azure-vote-front LoadBalancer 10.0.157.13 <pending> 80:31329/TCP 5m

Are there other things I can do to troubleshoot this situation?

I am running in westus2 using k8s v1.10.5.

Document Details

⚠ Do not edit this section. It is required for docs.microsoft.com ➟ GitHub issue linking.

- ID: 6115d555-45a1-6539-4af6-fbc20a99f008

- Version Independent ID: 21f80c83-ba10-9b7e-c54f-51a9c140517c

- Content: Quickstart - Azure Kubernetes cluster for Linux

- Content Source: articles/aks/kubernetes-walkthrough.md

- Service: container-service

- GitHub Login: @iainfoulds

- Microsoft Alias: iainfou

All 13 comments

Thanks for the feedback! We are currently investigating and will update you shortly.

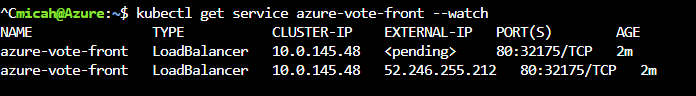

@mrbobfrog have you tried canceling the watch action and starting it again?

kubectl get service azure-vote-front --watch

In previous cases where I felt it was taking a long time to allocate a public IP I did a Control+C to stop the command and after running it again I saw the public IP was there

@MicahMcKittrick-MSFT I have run multiple times with and without the --watch option and it's still pending:

$ kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

azure-vote-back ClusterIP 10.0.125.97 <none> 6379/TCP 15h

azure-vote-front LoadBalancer 10.0.157.13 <pending> 80:31329/TCP 15h

...

Thanks for that. I am working on a repo right now. Will update shortly.

In case it's of help, here is yaml from the service:

kubectl get service azure-vote-front -o yaml

apiVersion: v1

kind: Service

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"name":"azure-vote-front","namespace":"default"},"spec":{"ports":[{"port":80}],"selector":{"app":"azure-vote-front"},"type":"LoadBalancer"}}

creationTimestamp: 2018-07-27T02:58:16Z

name: azure-vote-front

namespace: default

resourceVersion: "170778"

selfLink: /api/v1/namespaces/default/services/azure-vote-front

uid: e8f68c5e-9148-11e8-916a-763b8bf97ac6

spec:

clusterIP: 10.0.157.13

externalTrafficPolicy: Cluster

ports:

- nodePort: 31329

port: 80

protocol: TCP

targetPort: 80

selector:

app: azure-vote-front

sessionAffinity: None

type: LoadBalancer

status:

loadBalancer: {}

@mrbobfrog I followed this doc with the exception of deploying to West US 2 as you have rather than East US

here is the output of my yaml file

kubectl get service azure-vote-front -o yaml

apiVersion: v1

kind: Service

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"name":"azure-vote-front","namespace":"default"},"spec":{"ports":[{"port":80}],"selector":{"app":"azure-vote-front"},"type":"LoadBalancer"}}

creationTimestamp: 2018-07-27T19:14:56Z

name: azure-vote-front

namespace: default

resourceVersion: "3610"

selfLink: /api/v1/namespaces/default/services/azure-vote-front

uid: 58f7966d-91d1-11e8-a013-126155076dd2

spec:

clusterIP: 10.0.145.48

externalTrafficPolicy: Cluster

ports:

- nodePort: 32175

port: 80

protocol: TCP

targetPort: 80

selector:

app: azure-vote-front

sessionAffinity: None

type: LoadBalancer

status:

loadBalancer:

ingress:

- ip: 52.246.255.212

I am assuming something just got hung up with your deployment. Can you try removing your cluster and following the doc again to see if you get the same issue? I have tried this twice in West US 2 and once in East US and each time the IP is allocated between 2-3 mins

I'll redeploy and let you know

Same results, though I realized I added some complexity.

kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

azure-vote-back ClusterIP 10.0.97.18 <none> 6379/TCP 14m

azure-vote-front LoadBalancer 10.0.82.8 <pending> 80:30092/TCP 14m

Here is my set of commands:

owner=engineering.devops

environment=dev

color=blue

prefix=omega-$environment

region="westus2"

resource_group=${prefix}-${region}-${color}-group

network_name=${prefix}-${region}-${color}-network

supernet="<rfc-1819-private-ipv4>16"

subnet="<rfx-1819-private-ipv4>/20"

az group create --name "${resource_group}" --location "${region}"

az network vnet create \

-g "${resource_group}" -n "${network_name}" \

--address-prefix "${supernet}" --subnet-name kubernetes \

--subnet-prefix "${subnet}" -l ${region} \

--tags owner=$owner project=omega environment=$environment

az ad sp create-for-rbac \

--role="Contributor" \

--scopes="/subscriptions/<redacted>/resourceGroups/${resource_group}"

sp_name=<redacted>

sp_password=<redacted>

sp_appid=<redacted>

az aks create \

--kubernetes-version 1.10.5 \

--resource-group ${resource_group} \

--name ${prefix}-${region}-cluster \

--enable-rbac \

--network-plugin azure \

--aad-server-app-id $sp_appid \

--aad-server-app-secret $sp_password \

--aad-client-app-id <redacted> \

--aad-tenant-id <redacted> \

--node-count 3 \

--vnet-subnet-id /subscriptions/${subscription_id}/resourceGroups/${resource_group}/providers/Microsoft.Network/virtualNetworks/${network_name}/subnets/kubernetes \

--service-principal $sp_name \

--client-secret <redacted> \

--tags owner=$owner project=omega environment=${environment} application=kubernetes deploy=${color}

I'll try a simpler setup again and see if I get the same results -- I'll try in eastus as well.

@MicahMcKittrick-MSFT, I realize that this issue is likely implementation related and not documentation. If I can isolate which setting triggers the failure to provision a public ip, I'll open another issue in the appropriate area (and reference it here for the next person).

I do, however, believe the documentation can be improved by adding some other commands to help the user gain familiarity with the azure platform. Some examples could be az group list and az aks list once the objects have been created.

Thanks @mrbobfrog for the update. It seems that it is something with the custom settings you are adding that is causing the issue. Please do let us know once you have a solution. That way we can consider adding even more to the docs.

As per your second comment, I will assign this to the content author to review this doc and see if we can add any additional information or commands to the doc to make the setup easier.

@iainfoulds is currently on vacation so it might take a few days to hear back on it so just FYI

The issue was a mismatched client_id which I found in https://github.com/MicrosoftDocs/azure-docs/blob/master/articles/aks/kubernetes-service-principal.md under "Additional considerations":

When specifying the service principal Client ID, use the value of the appId.

On our Azure account I could not get the external IP to assign without the AD integration.

I am encountering something very like what is described above, when following https://docs.microsoft.com/en-us/azure/aks/ingress-static-ip. I run:

helm install stable/nginx-ingress --set controller.service.loadBalancerIP="<redacted>" --set controller.replicaCount=2

This is all as specified in the docs, yet, almost an hour later, I still see:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dandy-mule-nginx-ingress-controller LoadBalancer <redacted> <pending> 80:31563/TCP,443:32498/TCP 52m

I'm at a loss for how to proceed. I tried looking at the nginx-ingress pod logs, and assigned the AKS SP Contributor rights to the entire resource group so far, all to no avail. Any help would be appreciated!