Awx: Job scheduled on unreachable Isolated Node is stuck in running state and doesn't fail

ISSUE TYPE

- Bug Report

COMPONENT NAME

- API

- UI

SUMMARY

When you run a job through an isolated node which is unreachable, it remains in running status indefinitely.

ENVIRONMENT

- AWX version: latest

STEPS TO REPRODUCE

- Install Tower + Isolated node

- On the Isolated node, add the IP address of the Tower node in the "/etc/hosts.deny" file to deny connections.

sshd: <IP TOWER NODE> - Run a job through the Isolated node.

EXPECTED RESULTS

The job should fail since the isolated node is unreachable.

ACTUAL RESULTS

The job remains in running status indefinitely instead of fails.

ADDITIONAL INFORMATION

Attached:

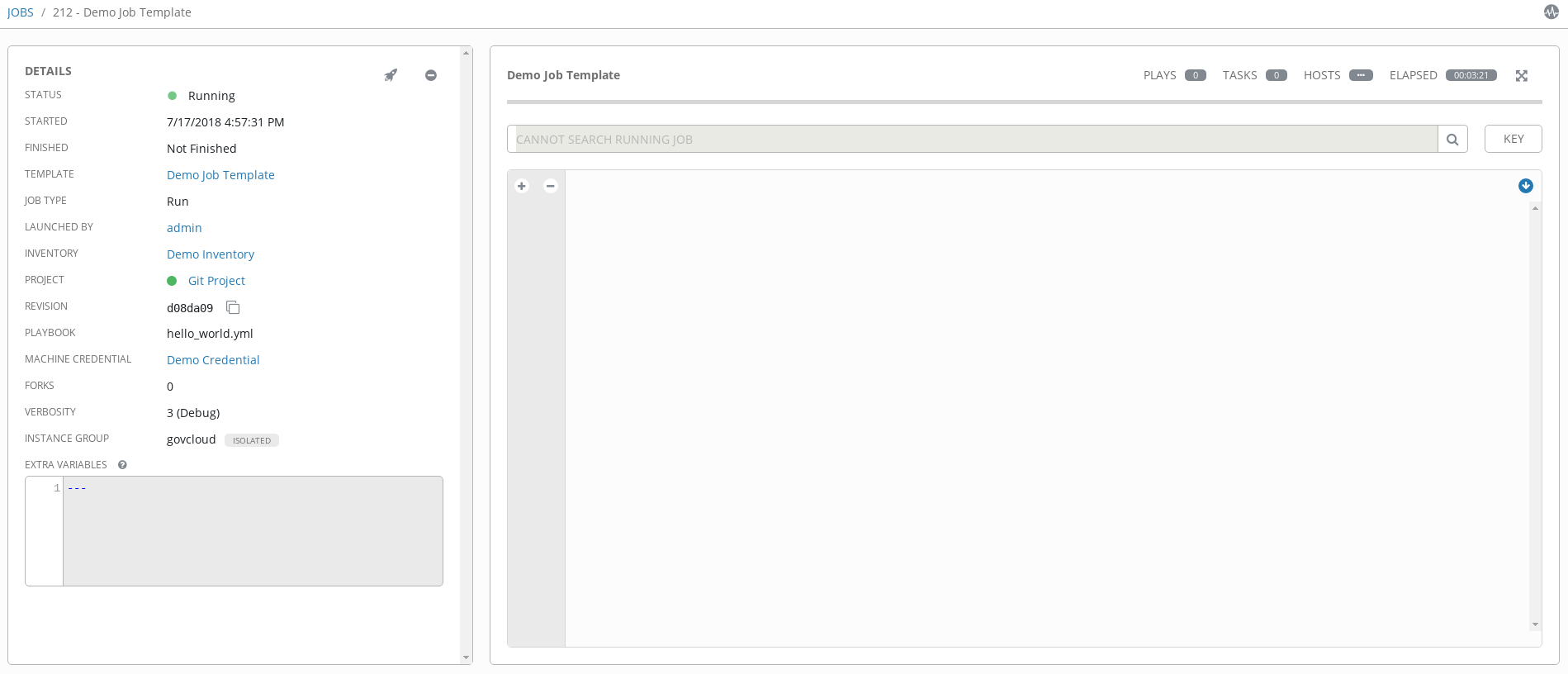

- Screenshot of the job page.

- Log files (management_playbooks.log, tower.log) and Job API output (job_212.txt).

management_playbooks.log

tower.log

job_212.txt

All 5 comments

I'd consider this somewhat debatable - one of the intentional design decisions we made with isolated nodes is that they are robust against _very_ unreliable connections and can continue to work if e.g., you lose the SSH connection for a minute or two. If you set up /etc/hosts.deny and then _restore_ connectivity a minute into the job run, does the playbook finish? If so, I'd argue that this is _normal, intentional_ behavior for this feature.

If you want configure isolated node execution to _fail_ if the job doesn't start within a certain timeframe (maybe because it's just plain unreachable, and _never_ becomes reachable), there's settings.AWX_ISOLATED_LAUNCH_TIMEOUT (which defaults to 10 minutes):

https://github.com/ansible/awx/blob/devel/awx/main/conf.py#L214

I think that whether it is an intentional design to keep the connection opened or to do do otherwise is not the main issue here. Indeed, on the screenshot, you can see that the output on the right side is completely empty although the verbosity level is 3.

At this level, it is legit to expect some information about what is going on and why nothing has started yet, right ?

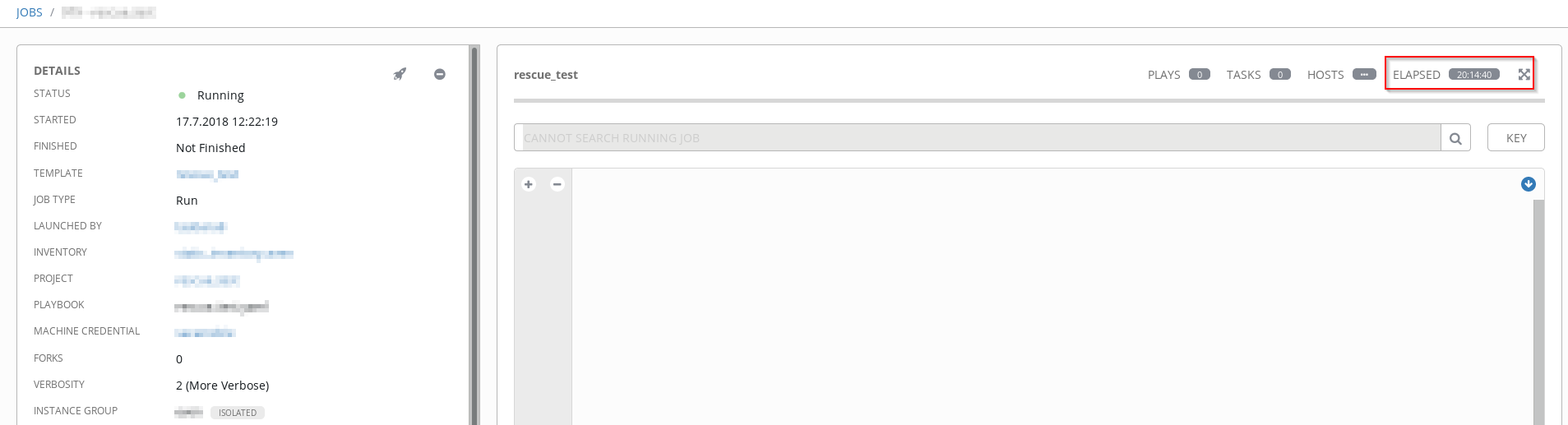

Even if you remove the tower from the host.deny of the isolated node few minutes later, it does not retry and it does not run into an timeout:

It's running now for 20hours ;-) so if settings.AWX_ISOLATED_LAUNCH_TIMEOUT is by default 10minutes, it doesn't work as intended.

@piroux the output in the panel is intended to be what the remote ansible-playbook is printing - since we can't reach the host, there's no recorded output yet. /var/log/tower/ _does_ have a log file (management_playbooks.log) that shows what _awx_ is doing under the hood synchronization-wise, but that's an implementation detail of awx's isolated execution model, and isn't exposed to the user in the UI currently. Maybe there are certain classes of connection issues like this that we could expose in the UI?

@unkaputtbar112 okay, that's good to know. I'll have to try this out myself and see if I'm able to reproduce. The isolated sync process _should_ be redundant to periodic unreachable states between the controller and the isolated node, like the one described in this ticket; if that's not true, then it's likely a bug.

settings.AWX_ISOLATED_LAUNCH_TIMEOUT is the timeout for launching the job - in other words, how long we'll wait to make initial contact with the remote system and start ansible_playbook. If you want to set a _cumulative_ job timeout for the entire run, you can do so via settings.DEFAULT_JOB_TIMEOUT (as you've seen, jobs will _never_ time out by default).

@cesarfn4 @piroux @unkaputtbar112 we've just released 1.0.7, which we believe resolves the underlying issue here. You can try out here: https://github.com/ansible/awx/releases/tag/1.0.7

Let us know if you're still seeing this issue after installing the latest awx - thanks!

Most helpful comment

@piroux the output in the panel is intended to be what the remote

ansible-playbookis printing - since we can't reach the host, there's no recorded output yet./var/log/tower/_does_ have a log file (management_playbooks.log) that shows what _awx_ is doing under the hood synchronization-wise, but that's an implementation detail of awx's isolated execution model, and isn't exposed to the user in the UI currently. Maybe there are certain classes of connection issues like this that we could expose in the UI?@unkaputtbar112 okay, that's good to know. I'll have to try this out myself and see if I'm able to reproduce. The isolated sync process _should_ be redundant to periodic

unreachablestates between the controller and the isolated node, like the one described in this ticket; if that's not true, then it's likely a bug.settings.AWX_ISOLATED_LAUNCH_TIMEOUTis the timeout for launching the job - in other words, how long we'll wait to make initial contact with the remote system and startansible_playbook. If you want to set a _cumulative_ job timeout for the entire run, you can do so viasettings.DEFAULT_JOB_TIMEOUT(as you've seen, jobs will _never_ time out by default).