Awx: Updates in Roles are not consistently brought into awx

ISSUE TYPE

- Bug Report

COMPONENT NAME

- API

SUMMARY

Updates in Roles are not consistently brought in to awx

STEPS TO REPRODUCE

Primary Repo: Junk repo with only playbook calling one test role. It also contained (2) naming variations of the rolesfile with (3) roles declared in it (files where identical bases the name, but didn't have to be).

Roles: (2) random roles, not really used for testing. (1) test role just echoing a variable.

1 - Create project, inventory, job template using the above. Set project to update on launch and delete on update. Cache timeout = 0

2 - Trigger job template, view/record echo statement

3 - Repeat step # 2 for a total of (5) attempts.

4 - Modify the variable in the test role and push the changes.

5 - Repeat steps # 2 and # 3.

6 - Repeat step # 4 (5) times.

In all my tests, this always failed within the (25) job runs. Failure = the output in Ansible Tower console regarding the echoed variable did not match what was pushed to the test role. NOTE - in all cases the project update output showed that the ansible-galaxy command ran and was "changed" for each of the "rolesfile" and appeared to be correct on the filesystem.

The interesting observation that we found was that if we remove the "when" statement noted above (ie. trigger the find and ansible-galaxy tasks on repo change), the above mentioned failures disappeared. The project update output still showed the same "changed" for the ansible-galaxy task, as these always triggered due to delete on project update.

EXPECTED RESULTS

Correct roles being pulled into awx.

ACTUAL RESULTS

Rolls remain unchanged.

ADDITIONAL INFORMATION

We've done the following already:

- Changed the ansible stat detect requirements.yml to a find looking for "rolesfile", "rolesfile.yml" or "requirements.yml" within the entire repository.

- Update the ansible-galaxy command to iterate over all of those paths and extract the roles.

- Both of these tasks are blocked off and only trigger when a change was detected in the primary repo or obviously if the project was deleted on update.

related #106

All 33 comments

Are any of the tasks running in parallel?

"Enable concurrent jobs" is not checked in their JT.

I am having the problem reported on #1559.

We have a roles/requirements.yml file but roles are not being updated.

Looking at the playbook that handles this update I see a strange logi when the cluster has only one worker.

Some Project has "Update on Launch" checked and branch configured to master.

We run a JT using that project.

AWX will do:

- Run an update job on the project, using

scm_full_checkout=falseandscm_branch=master

1.1. This is going to executeproject_update.ymland run the taskupdate project using git, updating the repo.

1.2. After updating the repo, the last commit will be saved toscm_version(for the example lets set that value toABCDE)

1.3. ansible-galaxy will not run becausescm_full_checkout=false

1.4. To sum up, this update will just move the repo to the latest commit, but do nothing with the roles defined inroles/requirements.yml - Run the job

2.1. As stated here, with the two-stage SCM process, before running the job, the worker will run againproject_update.yml, but this time withscm_full_checkout=trueandscm_branch=ABCDE

2.2. Taskcheck repo using gitwill get the local latest commit,ABCDE.

2.3. Compare that value withscm_branchand skip the first play, soscm_resultwill not be defined.

2.4. Go to the second play and executeansible-galaxywithout the--forceparameter, becausescm_resultis undefined. If we already have some roles installed by ansible-galaxy previosly and they need an update, because it is not using--forcethey will never be updated.

2.5. Sum up, this second execution ofproject_update.ymlwill never update the repo (because it has been updated in the previousupdate job) and will just run ansible-galaxy to download not-previously seen roles.

Probably related with #1827 and #2077

I'm experiencing the same issue with awx 3.0.0 where no roles are downloaded, even right after the project creation.

If I remove the unnecessary lines 132-136 and the conditional check 151 (ex 156) when: scm_full_checkout|bool and roles_enabled|bool in the playbook project_update.yml directly inside the container with:

docker exec -it docker-compose_task_1 /bin/bash

sed -i '132,136d' /var/lib/awx/venv/awx/lib/python3.6/site-packages/awx/playbooks/project_update.yml

sed -i '151d' /var/lib/awx/venv/awx/lib/python3.6/site-packages/awx/playbooks/project_update.yml

and relaunch the project with a 'Delete on Update' SCM update option to erase the playbook folder and start on a clean slate, the roles are finally downloaded, but, as I've already pointed out in a previous post, the roles folder tree is incorrect: instead of having all the roles downloaded inside the roles folder, I get:

├── roles

│ ├── git-Roles ------------------> name of the parent folder hosting the git roles repository

│ │ ├── git-Roles

│ │ │ ├── role-name-1

│ │ │ ├── role-name-2

│ │ │ ├── ...

│ │ ├── handlers --------------> from one of the roles

│ │ │ └── main.yml

│ │ ├── meta ------------------> from one of the roles

│ │ │ └── main.yml

│ │ ├── README.md -------------> from one of the roles

│ │ ├── tasks -----------------> from one of the roles

│ │ │ └── main.yml

│ │ ├── tests -----------------> from one of the roles

│ │ │ ├── inventory

│ │ │ └── test.yml

│ │ └── vars ------------------> from one of the roles

│ │ └── main.yml

│ └── requirements.yml

when I should get:

├── roles

│ ├── role-name-1

│ ├── role-name-2

│ ├── ...

│ └── requirements.yml

I can also, confirm this issue existing on AWX.

Stdout error without modifying the project_update.yml.

{

"changed": false,

"cmd": [

"ansible-galaxy",

"install",

"-r",

"requirements.yml",

"-p",

"/var/lib/awx/projects/_23__PROJECT_NAME/roles/"

],

"delta": "0:00:07.396695",

"end": "2019-02-12 22:40:19.839704",

"msg": "non-zero return code",

"rc": 1,

"start": "2019-02-12 22:40:12.443009",

"stderr": "[WARNING]: - ROLE_NAME was NOT installed successfully: the specified role. ROLE_NAME appears to already exist. Use --force to replace it. ERROR! - you can use --ignore-errors to skip failed roles and finish processing the list.",

"stderr_lines": [

"[WARNING]: - ROLE_NAME was NOT installed successfully: the specified role",

"ROLE_NAME appears to already exist. Use --force to replace it.",

"",

"ERROR! - you can use --ignore-errors to skip failed roles and finish processing the list."

],

"stdout": "- extracting ROLE_NAME to /var/lib/awx/projects/_23__PROJECT_NAME/roles/ROLE_NAME",

"stdout_lines": [

"- extracting ROLE_NAME to /var/lib/awx/projects/_23__PROJECT_NAME/roles/ROLE_NAME"

]

}

After adding --force to this line. the Job Template worked without any issues.

This issue however, does not appear within our Ansible Tower setup.

See https://github.com/ansible/awx/pull/3177. You will to manually update project_update.yml (or just build a new AWX from devel) until a new AWX release is cut.

@wenottingham

The Fix project updates to properly pull in role requirements. #3177 commit does not fix this issue:

Running a project sync ("Get latest SVN revision") with "Delete on Update" leads to:

...

TASK [detect requirements.yml] *************************************************

skipping: [localhost]

TASK [fetch galaxy roles from requirements.yml] ********************************

skipping: [localhost]

TASK [fetch galaxy roles from requirements.yml (forced update)] ****************

skipping: [localhost]

The following roles/requirements is present in the playbooks project root:

- src: <user>@<IP on the local host>:<roles repository folder on the local host>

scm: git

Deleting & recreating the same project does not change the outcome.

TASK [detect requirements.yml] *************************************************

skipping: [localhost]

The only conditional on that block after the change is when: roles_enabled|bool. Check your settings?

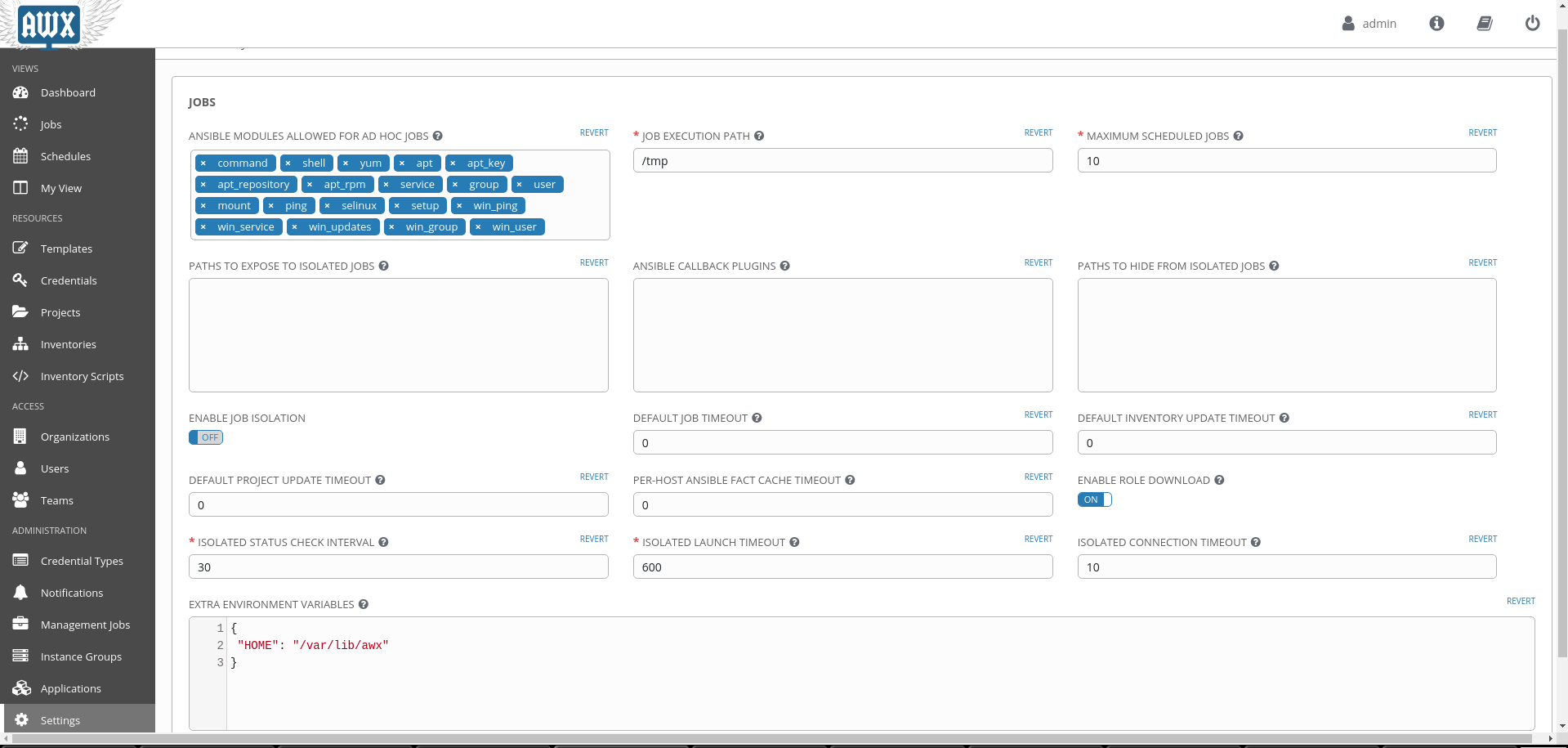

Roles download is enabled:

Hello

Same here, with 3.0.1 requirements is never used even if i delete & recreate and roles download is enabled in admin section.

issue must be re-opened ?

scm_full_checkout is never setted to True even in task.py :

'scm_full_checkout': True if project_update.job_type == 'run' else False,

Exemple :

Added :

- name: delete project directory before update

debug:

msg: "Debug: scm_full_checkout: {{ scm_full_checkout }}"

To project_update.yml and it's ALWAYS false (update / run a job etc)

Is there any update on this issue? I've started seeing similar behavior in Ansible tower since we upgraded to latest a few weeks ago. I believe it could be the same root cause though not 100% sure yet...

- 1 - AWX 3.0.1.0

What are you guys using before upgrading/using the AWX v4.0.0.0 (ansible 2.7.9)?

I am new to AWX and is experiencing the same. What was the previous version that was working?

2019/10/18 AWX 7.0.0 still not work with roles/requirements.yml :-(

This issue is occurring for us, as well on v7.0.0, ansible v2.8.4.

Project tree pulled looks like:

# ls -l /var/lib/awx/projects/_10__<project>/roles/ |grep req

-rw-r--r-- 1 root root 746 Oct 22 16:22 requirements.yml

Project update (with delete/clean options checked):

TASK [detect requirements.yml] *************************************************

skipping: [localhost,] => {"changed": false, "skip_reason": "Conditional result was False"}

TASK [fetch galaxy roles from requirements.yml] ********************************

skipping: [localhost,] => {"changed": false, "skip_reason": "Conditional result was False"}

TASK [detect collections/requirements.yml] *************************************

skipping: [localhost,] => {"changed": false, "skip_reason": "Conditional result was False"}

TASK [fetch galaxy collections from collections/requirements.yml] **************

skipping: [localhost,] => {"changed": false, "skip_reason": "Conditional result was False"}

project_update.yml section (modified to fix #4750 and attempt at using --force)

- hosts: all

gather_facts: false

tasks:

- block:

- name: detect requirements.yml

stat: path={{project_path|quote}}/roles/requirements.yml

register: doesRequirementsExist

- name: fetch galaxy roles from requirements.yml

command: ansible-galaxy install -r requirements.yml --force -p {{roles_destination|default('.')|quote}}

args:

chdir: "{{project_path|quote}}/roles"

register: galaxy_result

when: doesRequirementsExist.stat.exists

changed_when: "'was installed successfully' in galaxy_result.stdout"

when: roles_enabled|bool

delegate_to: localhost

Manual attempt:

# ansible-galaxy install -r requirements.yml -p .

[WARNING]: -<role_name> was NOT installed successfully: - command /usr/bin/git clone git@<domain>.com:<org>/<project>.git <role_name> failed in directory /home/awx/.ansible/tmp/ansible-local-16288Rpz1Wz/tmp1tqJpc (rc=128)

ERROR! - you can use --ignore-errors to skip failed roles and finish processing the list.

(same result with --force; this works with no problem on our ansible-core node running v2.7.8)

requirements.yml content:

- src: git@<domain>.com:<org>/<role_name>.git

name: <role_name>

scm: git

requirements.yml processing/updating has been super buggy since we started using AWX. Can somebody recommend a better method for handling roles? Is one flat repo the only way devs are testing?

Why is this closed? This is apparently not fixed in #3177. I can confirm that that "fix" is applied to our installation.

Edit:

Just for kicks, I modified project_update.yml to remove the conditional and the project update task now fails with:

TASK [detect requirements.yml] *************************************************

fatal: [localhost,]: UNREACHABLE! => {"changed": false, "msg": "Failed to connect to the host via ssh: ssh: Could not resolve hostname localhost,: Name or service not known", "unreachable": true}

That... doesn't make sense to me.

@wenottingham Can you please reopen this and take another look? We also have "roles_enabled" enabled in our settings. #3177 is obviously not fixing this for people.

Fixed it by changing project_update.yml:

FROM:

- hosts: all

gather_facts: false

tasks:

- block:

- name: detect requirements.yml

stat: path={{project_path|quote}}/roles/requirements.yml

register: doesRequirementsExist

- name: fetch galaxy roles from requirements.yml

command: ansible-galaxy install -r requirements.yml --force -p {{roles_destination|default('.')|quote}}

args:

chdir: "{{project_path|quote}}/roles"

register: galaxy_result

when: doesRequirementsExist.stat.exists

changed_when: "'was installed successfully' in galaxy_result.stdout"

when: roles_enabled|bool

delegate_to: localhost

TO:

- hosts: 127.0.0.1

gather_facts: false

tasks:

- name: detect requirements.yml

stat: path={{project_path|quote}}/roles/requirements.yml

register: doesRequirementsExist

- name: fetch galaxy roles from requirements.yml

command: ansible-galaxy install -r requirements.yml --force -p {{roles_destination|default('.')|quote}}

args:

chdir: "{{project_path|quote}}/roles"

register: galaxy_result

when: doesRequirementsExist.stat.exists

changed_when: "'was installed successfully' in galaxy_result.stdout"

This successfully let ansible-galaxy detect and install requirements.yml roles.

I have no idea why this worked.

Project update (with delete/clean options checked):

Roles are no longer checked out when updating a project, only when checking out a project for running against.

Project update (with delete/clean options checked):

Roles are no longer checked out when updating a project, only when checking out a project for running against.

Can you clarify this, please? I don't understand what this means.

With the configuration of my last comment, I went into the UI > Projects >

Well, you also noted that you modified the playbooks and are running on an older release, so I can't be sure about which behavior you're seeing, but this was a change fully implemented in 8.0.

The behavior I'm seeing is identical to the behavior reported in this issue initially and that was also a much older release. This issue has been prevalent for over a year now. The behavior I'm seeing is that requirements.yml is _not_ reliably pulled.

Can you please clarify this statement?

Roles are no longer checked out when updating a project, only when checking out a project for running against.

When are they pulled? Because they are not being pulled on project update. They are not being pulled when a job is run. This is on a brand new, fresh install of 8.0, by the way. The only customizations done for this test were to point to an existing external postgres database.

What do we need to do to update roles in requirements.yml?

In our fresh install of 8.0, to get it working, I just made the exact same change detailed in my prior comment here: https://github.com/ansible/awx/issues/1632#issuecomment-545064169

I also had to re-implement the fix for #4750 to change role destination to: {{roles_destination|default('.')|quote}}

And now roles are updating as expected. I don't know what the intended behavior is, but at least for our workflows, the intended behavior is not functional.

I won't discount user error here, but I feel like we aren't doing anything unusual with our setup. Tree structure is typical. requirements lives in /roles/requirements.txt. AWX install is a fresh install with 8.0. These manual fixes I'm applying should not be necessary. Am I just doing something wrong in configuration?

@Nascentes so I need to edit project_update.yml to get this workaround?

- docker exec -it awx_container bash

- cd /var/lib/awx/venv/awx/lib64/python3.6/site-packages/awx/playbooks

- vim project_update.yml

- hosts: 127.0.0.1

gather_facts: false

tasks:

- name: detect requirements.yml

stat: path={{project_path|quote}}/roles/requirements.yml

register: doesRequirementsExist

- name: fetch galaxy roles from requirements.yml

command: ansible-galaxy install -r requirements.yml --force -p {{roles_destination|default('.')|quote}}

args:

chdir: "{{project_path|quote}}/roles"

register: galaxy_result

when: doesRequirementsExist.stat.exists

changed_when: "'was installed successfully' in galaxy_result.stdout"

Do not have root access in awx container ...

crap ...

@zx1986 That's what worked for me, at least. Odd that you don't have root access, though. Default build for me puts me into a root shell with 'docker exec -it awx_task bash' no problem.

If you can rebuild the containers (by re-running install.yml playbook after removing old containers), you could modify the project_update.yml file in the cloned repo at: ./awx/playbooks/project_update.yml

I have not done it this way, but I would expect it to work the same.

Though if you rebuild containers and are using the default configuration with postgres running in a container, you will lose your data most likely. I could never get the containerized version of postgres working with persistent data. Maybe you'll have better luck, though?

- I was able to get the roles downloaded with 6.1.0 out of the box, with another issue depicted here.

- since 7.0.0, including 8.0.0, it is not possible anymore.

I confirm that it is necessary to hack/var/lib/awx/venv/awx/lib/python3.6/site-packages/awx/playbooks/project_update.ymlas root by only removing the linewhen: roles_enabled|booland adding the|default('.')tocommand: ansible-galaxy install -r requirements.yml -p {{roles_destination...to bring the roles back to life, although I still get the issue depicted here.

@zx1986: you need to type su when you first land into the awx_task container.

@jean-christophe-manciot thanks, I am running AWX with helm chart in Kubernetes.

what I got was:

❯ kubectl exec --namespace awx -it awx-5d5d4b499-qz92g -c web -- /bin/bash

bash-4.2$ whoami

awx

bash-4.2$ su

su: user root does not exist

@Nascentes thank you so much! I am trying to use configmaps to patch this monkey into my AWX 8.0.0

I'm currently testing awx 9.0.1, I first had the roles_enabled issue. Adding a debug statement shows :

TASK [debug] *********************

task path: /var/lib/awx/venv/awx/lib/python3.6/site-packages/awx/playbooks/project_update.yml:125

ok: [localhost,] => {

"msg": "roles_enabled: False"

}

Even if set in the configuration. One needs to remove the always false conditional.

Next, this error :

'roles_destination' is undefined. The |default('.') corrects it.

Using awx 7.x.x and hitting this issue.

Here below is how I changed the project_update.yml file:

BEFORE:

`

- block:

- name: detect requirements.yml

stat: path={{project_path|quote}}/roles/requirements.yml

register: doesRequirementsExist

- name: fetch galaxy roles from requirements.yml

command: ansible-galaxy install -r requirements.yml -p {{roles_destination|quote}}{{ ' -' + 'v' * ansible_verbosity if ansible_verbosity else '' }}

args:

chdir: "{{project_path|quote}}/roles"

register: galaxy_result

when: doesRequirementsExist.stat.exists

changed_when: "'was installed successfully' in galaxy_result.stdout"

environment:

ANSIBLE_FORCE_COLOR: False

when: roles_enabled|bool

delegate_to: localhost

`

AFTER:

`

- block:

- name: find roles directories

find:

path: "{{project_path|quote}}"

patterns: 'roles'

file_type: directory

recurse: yes

register: rolesDirs

- name: detect requirements.yml

stat: path="{{rolesDirs.files[0].path|quote}}/requirements.yml"

register: doesRequirementsExist

- name: fetch galaxy roles from requirements.yml

command: ansible-galaxy install -r requirements.yml -p {{roles_destination|default('.')|quote}}{{ ' -' + 'v' * ansible_verbosity if ansible_verbosity else '' }}

args:

chdir: "{{rolesDirs.files[0].path|quote}}"

register: galaxy_result

when: doesRequirementsExist.stat.exists

changed_when: "'was installed successfully' in galaxy_result.stdout"

environment:

ANSIBLE_FORCE_COLOR: False

delegate_to: localhost

`

I use a find to know where are the various roles directories and I use the first ocurence found. I have to do that because we are running projects that have roles directories in several nested sub-directories of their root path.

Please note few points about this issue:

- When you just go to Projects - [Project] - Get latest SCM revision, it actually skips detection of

roles/requirements.ymland as mentioned by @wenottingham it is expected behavior. - However when you have a Template that uses this Project, it detects the requirements and downloads them if you run that job (and when Update revision on launch is ticked, prior to starting the job each time, it downloads the roles as well).

- The above mentioned error (even with ansible-galaxy -vvv) does not provide many details:

[WARNING]: -<role_name> was NOT installed successfully: - command /usr/bin/git clone git@<domain>.com:<org>/<project>.git <role_name> failed in directory /home/awx/.ansible/tmp/ansible-local-123456abcde/tmp123abc (rc=128)

But keep in mind it can also simply happen, because one or more of the Git repositories specified in requirements.yml cannot be cloned due to permissions - e.g. does not have the correct SSH key. Imagine a scenario:

# Correct SSH key in Tower

- src: [email protected]:org/role1.git

version: master

name: role1

scm: git

# Correct SSH key in Tower

- src: [email protected]:org/role2.git

version: master

name: role2

scm: git

# Incorrect SSH key in Tower

- src: [email protected]:org/role3.git

version: master

name: role3

scm: git

You will get the exact same error. Confirmed on Tower 3.6.4/Ansible 2.9.1.

Running AWX 15.0.0 / Ansible 2.9.14 and having the same issue with both roles & collections. The "detect requirement.yml" task gets skipped both on project update & when at template runs (yes, Update revision on launch is ticked). The previously mentioned fix worked and similarly removed "when: collections_enabled|bool". from the collections block to fix collections download.

@chazzly I think what you're experiencing is a change we made to AWX's default behavior in AWX 15.0.0:

https://github.com/ansible/awx/issues/8560#issuecomment-724071498

Honestly, we had so many issues with trying to populate roles via requirements.yml that it just wasn't worth it. The logic for importing/populating/updating roles in AWX is obscenely suboptimal for being the "best practice". We ended up flattening our repos by merging all role repositories into the primary project repo. It's gross and monolithic, but it solved ALL of our issues with AWX pulling roles. Took a basic playbook down from a ~45 second run-time (due to forced project updates and slow requirements.yml pulls, despite having them disabled. Apparently this is intended as a FEATURE!), down to ~7 seconds. Crazy. Not worth it to use requirements.yml in my estimation.

Most helpful comment

Is there any update on this issue? I've started seeing similar behavior in Ansible tower since we upgraded to latest a few weeks ago. I believe it could be the same root cause though not 100% sure yet...