ISSUE TYPE

- Bug Report

COMPONENT NAME

- UI

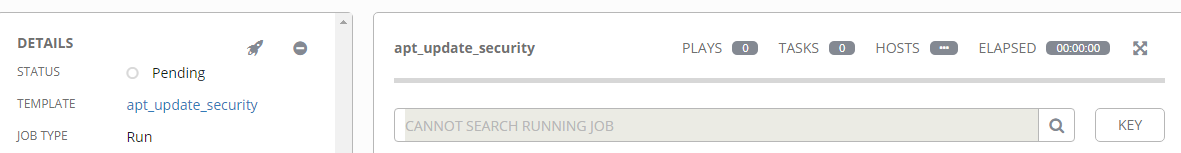

SUMMARY

SCM update keeps pending infinitely

ENVIRONMENT

- AWX version: 1.0.4.64

- AWX install method: docker on linux

- Ansible version: 2.4.3.0

- Operating System: Ubuntu 16.04 Xenial

- Web Browser: Chrome, Firefox, Edge

STEPS TO REPRODUCE

Projects > Get latest SCM version > Stuck in pending mode infinitely

Templates > Job > Launch job > Stuck in pending mode infinitely (probably because the job is triggering the SCM update process)

EXPECTED RESULTS

SCM update succesful

ACTUAL RESULTS

Stays in pending mode, no stdout logs are generated in the UI

ADDITIONAL INFORMATION

All 23 comments

if you refresh the page does it update? are there any logs from the containers specifically the task container?

A refresh doesn't work. Also after recreating the containers the running job comes back in the same pending state. It seems that the pending state of the job is stored in the database.

Could you let me know what specific logs you need?

This is a dump of the awx_task console log, which might show some relevant information. I initated a SCM update and got this back:

64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36"

[pid: 28|app: 0|req: 61/318] <client IP> () {48 vars in 2438 bytes} [Fri Mar 9 15:51:24 2018] POST /api/v2/projects/6/update/ => generated 1721 bytes in 177 msecs (HTTP/1.1 202) 9 headers in 280 bytes (1 switches on core 0)

2018/03/09 15:51:25 [error] 26#0: *452 connect() failed (111: Connection refused) while connecting to upstream, client: <client IP>, server: _, request: "GET /api/v2/dashboard/graphs/jobs/?period=month&job_type=all HTTP/1.1", upstream: "uwsgi://[::1]:8050", host: "<,server IP>", referrer: "http://<,server IP>/"

[pid: 30|app: 0|req: 75/319] <client IP> () {42 vars in 2353 bytes} [Fri Mar 9 15:51:25 2018] GET /api/v2/dashboard/graphs/jobs/?period=month&job_type=all => generated 1040 bytes in 42 msecs (HTTP/1.1 200) 8 headers in 227 bytes (1 switches on core 0)

<client IP> - - [09/Mar/2018:15:51:25 +0000] "GET /api/v2/dashboard/graphs/jobs/?period=month&job_type=all HTTP/1.1" 200 1040 "http://<server IP>/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36"

postgres log, probably not related to this issue?

LOG: database system was shut down at 2018-03-09 10:16:18 UTC

LOG: MultiXact member wraparound protections are now enabled

LOG: database system is ready to accept connections

LOG: autovacuum launcher started

LOG: incomplete startup packet

ERROR: duplicate key value violates unique constraint "auth_user_username_key"

DETAIL: Key (username)=(<secret>) already exists.

STATEMENT: INSERT INTO "auth_user" ("password", "last_login", "is_superuser", "username", "first_name", "last_name", "email", "is_staff", "is_active", "date_joined") VALUES ('pbkdf2_sha256$<secret>=', NULL, true, 'admin', '', '', '<secret>@<secret>', true, true, '2018-03-09T10:18:28.918537+00:00'::timestamptz) RETURNING "auth_user"."id"

Please take the time to properly format your logs using markdown. Surround them using 3 backticks.

```

Like this

```

fyi, The task container is the one that usually will have details on running jobs. The logs you showed most likely came from the web container logs.

Sorry, my mistake.. I chose the wrong container. Here is the log of the task container:

[2018-03-09 20:46:05,133: INFO/MainProcess] Scaling down 2 processes.

[2018-03-09 20:46:08,423: INFO/Beat] Scheduler: Sending due task task_manager (awx.main.scheduler.tasks.run_task_manager)

[2018-03-09 20:46:08,424: DEBUG/Beat] awx.main.scheduler.tasks.run_task_manager sent. id->10be21fe-2480-4150-a0fd-9cf610080068

[2018-03-09 20:46:08,424: DEBUG/Beat] beat: Waking up in 19.95 seconds.

[2018-03-09 20:46:28,393: INFO/Beat] Scheduler: Sending due task tower_scheduler (awx.main.tasks.awx_periodic_scheduler)

[2018-03-09 20:46:28,396: DEBUG/Beat] awx.main.tasks.awx_periodic_scheduler sent. id->69f809be-827e-464b-8206-72ac50969fc0

[2018-03-09 20:46:28,398: INFO/Beat] Scheduler: Sending due task cluster_heartbeat (awx.main.tasks.cluster_node_heartbeat)

[2018-03-09 20:46:28,400: DEBUG/Beat] awx.main.tasks.cluster_node_heartbeat sent. id->499b647c-45d0-437d-b348-719c7dfddc3f

[2018-03-09 20:46:28,402: DEBUG/Beat] beat: Waking up now.

[2018-03-09 20:46:28,403: INFO/MainProcess] Received task: awx.main.tasks.cluster_node_heartbeat[499b647c-45d0-437d-b348-719c7dfddc3f] expires:[2018-03-09 20:47:18.399746+00:00]

[2018-03-09 20:46:28,404: INFO/MainProcess] Scaling up 1 processes.

[2018-03-09 20:46:28,423: INFO/Beat] Scheduler: Sending due task task_manager (awx.main.scheduler.tasks.run_task_manager)

[2018-03-09 20:46:28,428: DEBUG/Beat] awx.main.scheduler.tasks.run_task_manager sent. id->c8ba4b03-4b02-4a8f-8cbb-285a04ad2bb2

[2018-03-09 20:46:28,429: DEBUG/Beat] beat: Waking up in 19.99 seconds.

[2018-03-09 20:46:28,548: DEBUG/MainProcess] TaskPool: Apply <function _fast_trace_task at 0x2f35488> (args:(u'awx.main.tasks.cluster_node_heartbeat', u'499b647c-45d0-437d-b348-719c7dfddc3f', [], {}, {u'utc': True, u'is_eager': False, u'chord': None, u'group': None, u'args': [], u'retries': 0, u'delivery_info': {u'priority': 0, u'redelivered': False, u'routing_key': u'awx', u'exchange': u'awx'}, u'expires': u'2018-03-09T20:47:18.399746+00:00', u'hostname': 'celery@awx', u'task': u'awx.main.tasks.cluster_node_heartbeat', u'callbacks': None, u'correlation_id': u'499b647c-45d0-437d-b348-719c7dfddc3f', u'errbacks': None, u'timelimit': [None, None], u'taskset': None, u'kwargs': {}, u'eta': None, u'reply_to': u'919b39da-9079-3162-819a-1ad12ae9720f', u'id': u'499b647c-45d0-437d-b348-719c7dfddc3f', u'headers': {}}) kwargs:{})

[2018-03-09 20:46:28,549: DEBUG/MainProcess] basic.qos: prefetch_count->48

[2018-03-09 20:46:28,740: DEBUG/MainProcess] Task accepted: awx.main.tasks.cluster_node_heartbeat[499b647c-45d0-437d-b348-719c7dfddc3f] pid:226

2018-03-09 20:46:28,756 DEBUG awx.main.tasks Cluster node heartbeat task.

[2018-03-09 20:46:28,789: DEBUG/Worker-12] Start from server, version: 0.9, properties: {u'information': u'Licensed under the MPL. See http://www.rabbitmq.com/', u'product': u'RabbitMQ', u'copyright': u'Copyright (C) 2007-2017 Pivotal Software, Inc.', u'capabilities': {u'exchange_exchange_bindings': True, u'connection.blocked': True, u'authentication_failure_close': True, u'direct_reply_to': True, u'basic.nack': True, u'per_consumer_qos': True, u'consumer_priorities': True, u'consumer_cancel_notify': True, u'publisher_confirms': True}, u'cluster_name': u'rabbit@bb4036770c43', u'platform': u'Erlang/OTP 19.2.1', u'version': u'3.6.12'}, mechanisms: [u'AMQPLAIN', u'PLAIN'], locales: [u'en_US']

[2018-03-09 20:46:28,792: DEBUG/Worker-12] Open OK!

[2018-03-09 20:46:28,793: DEBUG/Worker-12] using channel_id: 1

[2018-03-09 20:46:28,795: DEBUG/Worker-12] Channel open

[2018-03-09 20:46:28,798: INFO/MainProcess] Received task: awx.main.tasks.handle_ha_toplogy_changes[5847563e-2fa9-442d-a1dd-f8767d9b3378]

[2018-03-09 20:46:28,799: INFO/MainProcess] Scaling up 1 processes.

[2018-03-09 20:46:28,802: DEBUG/Worker-12] Closed channel #1

[2018-03-09 20:46:28,978: DEBUG/MainProcess] TaskPool: Apply <function _fast_trace_task at 0x2f35488> (args:(u'awx.main.tasks.handle_ha_toplogy_changes', u'5847563e-2fa9-442d-a1dd-f8767d9b3378', [], {}, {u'utc': True, u'is_eager': False, u'chord': None, u'group': None, u'args': [], u'retries': 0, u'delivery_info': {u'priority': None, u'redelivered': False, u'routing_key': u'', u'exchange': u'tower_broadcast_all'}, u'expires': None, u'hostname': 'celery@awx', u'task': u'awx.main.tasks.handle_ha_toplogy_changes', u'callbacks': None, u'correlation_id': u'5847563e-2fa9-442d-a1dd-f8767d9b3378', u'errbacks': None, u'timelimit': [None, None], u'taskset': None, u'kwargs': {}, u'eta': None, u'reply_to': u'41a51de0-f712-35f2-bfa8-8489298559a4', u'id': u'5847563e-2fa9-442d-a1dd-f8767d9b3378', u'headers': {}}) kwargs:{})

[2018-03-09 20:46:28,980: DEBUG/MainProcess] basic.qos: prefetch_count->52

[2018-03-09 20:46:28,982: INFO/MainProcess] Task awx.main.tasks.cluster_node_heartbeat[499b647c-45d0-437d-b348-719c7dfddc3f] succeeded in 0.242809028998s: None

[2018-03-09 20:46:28,984: DEBUG/MainProcess] Task accepted: awx.main.tasks.handle_ha_toplogy_changes[5847563e-2fa9-442d-a1dd-f8767d9b3378] pid:226

2018-03-09 20:46:28,997 DEBUG awx.main.tasks Reconfigure celeryd queues task on host celery@awx

[2018-03-09 20:46:29,023: DEBUG/Worker-12] Start from server, version: 0.9, properties: {u'information': u'Licensed under the MPL. See http://www.rabbitmq.com/', u'product': u'RabbitMQ', u'copyright': u'Copyright (C) 2007-2017 Pivotal Software, Inc.', u'capabilities': {u'exchange_exchange_bindings': True, u'connection.blocked': True, u'authentication_failure_close': True, u'direct_reply_to': True, u'basic.nack': True, u'per_consumer_qos': True, u'consumer_priorities': True, u'consumer_cancel_notify': True, u'publisher_confirms': True}, u'cluster_name': u'rabbit@bb4036770c43', u'platform': u'Erlang/OTP 19.2.1', u'version': u'3.6.12'}, mechanisms: [u'AMQPLAIN', u'PLAIN'], locales: [u'en_US']

[2018-03-09 20:46:29,024: DEBUG/Worker-12] Open OK!

[2018-03-09 20:46:29,025: DEBUG/Worker-12] using channel_id: 1

[2018-03-09 20:46:29,026: DEBUG/Worker-12] Channel open

[2018-03-09 20:46:29,032: DEBUG/MainProcess] pidbox received method active_queues() [reply_to:{u'routing_key': u'd1016149-d90e-3cf1-a60b-e030d60cffd9', u'exchange': u'reply.celery.pidbox'} ticket:502a1b38-a7a5-492b-a748-4330d0b5d7a2]

[2018-03-09 20:46:29,037: DEBUG/Worker-12] Closed channel #1

2018-03-09 20:46:29,040 INFO awx.main.tasks Workers on tower node 'awx' removed from queues [] and added to queues []

2018-03-09 20:46:29,043 INFO awx.main.tasks Worker on tower node 'awx' updated celery routes {'awx.main.tasks.purge_old_stdout_files': {'queue': u'awx', 'routing_key': u'awx'}, 'awx.main.tasks.cluster_node_heartbeat': {'queue': u'awx', 'routing_key': u'awx'}} all routes are now {'awx.main.tasks.purge_old_stdout_files': {'queue': u'awx', 'routing_key': u'awx'}, 'awx.main.tasks.cluster_node_heartbeat': {'queue': u'awx', 'routing_key': u'awx'}}

[2018-03-09 20:46:29,049: INFO/MainProcess] Task awx.main.tasks.handle_ha_toplogy_changes[5847563e-2fa9-442d-a1dd-f8767d9b3378] succeeded in 0.0664528639973s: None

[2018-03-09 20:46:48,440: DEBUG/Beat] beat: Synchronizing schedule...

[2018-03-09 20:46:48,448: INFO/Beat] Scheduler: Sending due task task_manager (awx.main.scheduler.tasks.run_task_manager)

[2018-03-09 20:46:48,450: DEBUG/Beat] awx.main.scheduler.tasks.run_task_manager sent. id->6af500cf-39ed-4394-8d3e-36a4a1143640

[2018-03-09 20:46:48,453: DEBUG/Beat] beat: Waking up in 9.93 seconds.

[2018-03-09 20:46:58,395: INFO/Beat] Scheduler: Sending due task tower_scheduler (awx.main.tasks.awx_periodic_scheduler)

[2018-03-09 20:46:58,397: DEBUG/Beat] awx.main.tasks.awx_periodic_scheduler sent. id->5aab5ead-5516-405a-9c75-614008bfbfc4

[2018-03-09 20:46:58,398: DEBUG/Beat] beat: Waking up in 10.04 seconds.

RESULT 2

OKREADY

[2018-03-09 20:47:05,137: INFO/MainProcess] Scaling down 2 processes.

[2018-03-09 20:47:08,448: INFO/Beat] Scheduler: Sending due task task_manager (awx.main.scheduler.tasks.run_task_manager)

[2018-03-09 20:47:08,454: DEBUG/Beat] awx.main.scheduler.tasks.run_task_manager sent. id->7d820a6c-f114-4bff-ae25-23ef070ba59d

[2018-03-09 20:47:08,456: DEBUG/Beat] beat: Waking up in 19.93 seconds.

[2018-03-09 20:47:28,401: INFO/Beat] Scheduler: Sending due task cluster_heartbeat (awx.main.tasks.cluster_node_heartbeat)

[2018-03-09 20:47:28,404: DEBUG/Beat] awx.main.tasks.cluster_node_heartbeat sent. id->209629b0-1a51-4f01-b04f-a45c6ddad13e

[2018-03-09 20:47:28,405: INFO/Beat] Scheduler: Sending due task tower_scheduler (awx.main.tasks.awx_periodic_scheduler)

[2018-03-09 20:47:28,407: DEBUG/Beat] awx.main.tasks.awx_periodic_scheduler sent. id->53bfbc22-2c46-45d0-a14a-bdd3adf3d835

[2018-03-09 20:47:28,408: DEBUG/Beat] beat: Waking up now.

[2018-03-09 20:47:28,411: INFO/MainProcess] Received task: awx.main.tasks.cluster_node_heartbeat[209629b0-1a51-4f01-b04f-a45c6ddad13e] expires:[2018-03-09 20:48:18.402983+00:00]

[2018-03-09 20:47:28,411: INFO/MainProcess] Scaling up 1 processes.

[2018-03-09 20:47:28,449: INFO/Beat] Scheduler: Sending due task task_manager (awx.main.scheduler.tasks.run_task_manager)

[2018-03-09 20:47:28,456: DEBUG/Beat] awx.main.scheduler.tasks.run_task_manager sent. id->19dc8f19-5679-4d43-8c6c-ca80dcb55b8b

[2018-03-09 20:47:28,457: DEBUG/Beat] beat: Waking up in 19.99 seconds.

[2018-03-09 20:47:28,570: DEBUG/MainProcess] TaskPool: Apply <function _fast_trace_task at 0x2f35488> (args:(u'awx.main.tasks.cluster_node_heartbeat', u'209629b0-1a51-4f01-b04f-a45c6ddad13e', [], {}, {u'utc': True, u'is_eager': False, u'chord': None, u'group': None, u'args': [], u'retries': 0, u'delivery_info': {u'priority': 0, u'redelivered': False, u'routing_key': u'awx', u'exchange': u'awx'}, u'expires': u'2018-03-09T20:48:18.402983+00:00', u'hostname': 'celery@awx', u'task': u'awx.main.tasks.cluster_node_heartbeat', u'callbacks': None, u'correlation_id': u'209629b0-1a51-4f01-b04f-a45c6ddad13e', u'errbacks': None, u'timelimit': [None, None], u'taskset': None, u'kwargs': {}, u'eta': None, u'reply_to': u'919b39da-9079-3162-819a-1ad12ae9720f', u'id': u'209629b0-1a51-4f01-b04f-a45c6ddad13e', u'headers': {}}) kwargs:{})

[2018-03-09 20:47:28,571: DEBUG/MainProcess] basic.qos: prefetch_count->56

[2018-03-09 20:47:28,780: DEBUG/MainProcess] Task accepted: awx.main.tasks.cluster_node_heartbeat[209629b0-1a51-4f01-b04f-a45c6ddad13e] pid:228

2018-03-09 20:47:28,794 DEBUG awx.main.tasks Cluster node heartbeat task.

[2018-03-09 20:47:28,828: DEBUG/Worker-14] Start from server, version: 0.9, properties: {u'information': u'Licensed under the MPL. See http://www.rabbitmq.com/', u'product': u'RabbitMQ', u'copyright': u'Copyright (C) 2007-2017 Pivotal Software, Inc.', u'capabilities': {u'exchange_exchange_bindings': True, u'connection.blocked': True, u'authentication_failure_close': True, u'direct_reply_to': True, u'basic.nack': True, u'per_consumer_qos': True, u'consumer_priorities': True, u'consumer_cancel_notify': True, u'publisher_confirms': True}, u'cluster_name': u'rabbit@bb4036770c43', u'platform': u'Erlang/OTP 19.2.1', u'version': u'3.6.12'}, mechanisms: [u'AMQPLAIN', u'PLAIN'], locales: [u'en_US']

[2018-03-09 20:47:28,831: DEBUG/Worker-14] Open OK!

[2018-03-09 20:47:28,833: DEBUG/Worker-14] using channel_id: 1

[2018-03-09 20:47:28,834: DEBUG/Worker-14] Channel open

[2018-03-09 20:47:28,837: INFO/MainProcess] Received task: awx.main.tasks.handle_ha_toplogy_changes[9f6d46de-0555-4c08-9213-26f2cdb243ac]

[2018-03-09 20:47:28,837: INFO/MainProcess] Scaling up 1 processes.

[2018-03-09 20:47:28,839: DEBUG/Worker-14] Closed channel #1

[2018-03-09 20:47:29,015: DEBUG/MainProcess] TaskPool: Apply <function _fast_trace_task at 0x2f35488> (args:(u'awx.main.tasks.handle_ha_toplogy_changes', u'9f6d46de-0555-4c08-9213-26f2cdb243ac', [], {}, {u'utc': True, u'is_eager': False, u'chord': None, u'group': None, u'args': [], u'retries': 0, u'delivery_info': {u'priority': None, u'redelivered': False, u'routing_key': u'', u'exchange': u'tower_broadcast_all'}, u'expires': None, u'hostname': 'celery@awx', u'task': u'awx.main.tasks.handle_ha_toplogy_changes', u'callbacks': None, u'correlation_id': u'9f6d46de-0555-4c08-9213-26f2cdb243ac', u'errbacks': None, u'timelimit': [None, None], u'taskset': None, u'kwargs': {}, u'eta': None, u'reply_to': u'41a51de0-f712-35f2-bfa8-8489298559a4', u'id': u'9f6d46de-0555-4c08-9213-26f2cdb243ac', u'headers': {}}) kwargs:{})

[2018-03-09 20:47:29,017: DEBUG/MainProcess] basic.qos: prefetch_count->60

[2018-03-09 20:47:29,020: INFO/MainProcess] Task awx.main.tasks.cluster_node_heartbeat[209629b0-1a51-4f01-b04f-a45c6ddad13e] succeeded in 0.241227557002s: None

[2018-03-09 20:47:29,022: DEBUG/MainProcess] Task accepted: awx.main.tasks.handle_ha_toplogy_changes[9f6d46de-0555-4c08-9213-26f2cdb243ac] pid:228

2018-03-09 20:47:29,034 DEBUG awx.main.tasks Reconfigure celeryd queues task on host celery@awx

[2018-03-09 20:47:29,054: DEBUG/Worker-14] Start from server, version: 0.9, properties: {u'information': u'Licensed under the MPL. See http://www.rabbitmq.com/', u'product': u'RabbitMQ', u'copyright': u'Copyright (C) 2007-2017 Pivotal Software, Inc.', u'capabilities': {u'exchange_exchange_bindings': True, u'connection.blocked': True, u'authentication_failure_close': True, u'direct_reply_to': True, u'basic.nack': True, u'per_consumer_qos': True, u'consumer_priorities': True, u'consumer_cancel_notify': True, u'publisher_confirms': True}, u'cluster_name': u'rabbit@bb4036770c43', u'platform': u'Erlang/OTP 19.2.1', u'version': u'3.6.12'}, mechanisms: [u'AMQPLAIN', u'PLAIN'], locales: [u'en_US']

[2018-03-09 20:47:29,056: DEBUG/Worker-14] Open OK!

[2018-03-09 20:47:29,057: DEBUG/Worker-14] using channel_id: 1

[2018-03-09 20:47:29,058: DEBUG/Worker-14] Channel open

[2018-03-09 20:47:29,064: DEBUG/MainProcess] pidbox received method active_queues() [reply_to:{u'routing_key': u'234a19e3-ac2b-3ad7-88e6-65f19e7e1dd5', u'exchange': u'reply.celery.pidbox'} ticket:7139c603-578a-4a07-bfb0-a060bd3501e5]

[2018-03-09 20:47:29,069: DEBUG/Worker-14] Closed channel #1

2018-03-09 20:47:29,074 INFO awx.main.tasks Workers on tower node 'awx' removed from queues [] and added to queues []

2018-03-09 20:47:29,078 INFO awx.main.tasks Worker on tower node 'awx' updated celery routes {'awx.main.tasks.purge_old_stdout_files': {'queue': u'awx', 'routing_key': u'awx'}, 'awx.main.tasks.cluster_node_heartbeat': {'queue': u'awx', 'routing_key': u'awx'}} all routes are now {'awx.main.tasks.purge_old_stdout_files': {'queue': u'awx', 'routing_key': u'awx'}, 'awx.main.tasks.cluster_node_heartbeat': {'queue': u'awx', 'routing_key': u'awx'}}

[2018-03-09 20:47:29,085: INFO/MainProcess] Task awx.main.tasks.handle_ha_toplogy_changes[9f6d46de-0555-4c08-9213-26f2cdb243ac] succeeded in 0.0638312849987s: None

[2018-03-09 20:47:48,468: INFO/Beat] Scheduler: Sending due task task_manager (awx.main.scheduler.tasks.run_task_manager)

[2018-03-09 20:47:48,472: DEBUG/Beat] awx.main.scheduler.tasks.run_task_manager sent. id->df1e1d01-c377-422f-9b88-a49d327e730d

[2018-03-09 20:47:48,474: DEBUG/Beat] beat: Waking up in 9.92 seconds.

[2018-03-09 20:47:58,407: INFO/Beat] Scheduler: Sending due task tower_scheduler (awx.main.tasks.awx_periodic_scheduler)

[2018-03-09 20:47:58,409: DEBUG/Beat] awx.main.tasks.awx_periodic_scheduler sent. id->2b718a7e-8f28-449b-96bf-e1f689819e04

[2018-03-09 20:47:58,410: DEBUG/Beat] beat: Waking up in 10.05 seconds.

RESULT 2

OKREADY

[2018-03-09 20:48:05,141: INFO/MainProcess] Scaling down 2 processes.

[2018-03-09 20:48:08,473: INFO/Beat] Scheduler: Sending due task task_manager (awx.main.scheduler.tasks.run_task_manager)

[2018-03-09 20:48:08,478: DEBUG/Beat] awx.main.scheduler.tasks.run_task_manager sent. id->597ada1d-b44d-42e8-a712-52d6b1d8a67f

[2018-03-09 20:48:08,480: DEBUG/Beat] beat: Waking up in 19.92 seconds.

I think I do have the same error, and can reproduce it. I wasn't abled to get this going again, but I've got a backup of this instance, and so went back to my database backup from 2018-02-24 and tried restoring it onto awx 1.0.2. There, I was abled to run maintenance tasks and project updates, which did not succeed with today's database and latest awx. After I verified this database snapshot to be working with awx 1.0.2, I tried upgrading to latest. This migration did not finish, webinterface kept the "upgrade in progress" screen, awk_task logs continued showing background activity, but the system was mostly idle:

2018-03-12 11:45:46,211 ERROR awx.main.consumers Request user is not authenticated to use websocket.

2018-03-12 11:45:48,320 ERROR awx.main.consumers Request user is not authenticated to use websocket.

2018-03-12 11:45:50,474 ERROR awx.main.consumers Request user is not authenticated to use websocket.

2018-03-12 11:45:52,603 ERROR awx.main.consumers Request user is not authenticated to use websocket.

[2018-03-12 11:45:53,904: INFO/Beat] Scheduler: Sending due task tower_scheduler (awx.main.tasks.awx_periodic_scheduler)

[2018-03-12 11:45:53,917: DEBUG/Beat] awx.main.tasks.awx_periodic_scheduler sent. id->2de9620f-d8b6-4a32-92fa-4bc0a2ffa458

[2018-03-12 11:45:53,919: DEBUG/Beat] beat: Waking up in 10.43 seconds.

2018-03-12 11:45:54,745 ERROR awx.main.consumers Request user is not authenticated to use websocket.

2018-03-12 11:45:56,878 ERROR awx.main.consumers Request user is not authenticated to use websocket.

While looking at this, I noticed my docker system to only have 4gb ram. After reboot with 8gb, awx let me log in again, but now all jobs are stuck pending again...

@youam Not sure if that is the same problem. Your problem seems to be related to an upgrade that fails.

I have a similar issue, but, all my jobs are stuck in a pending state:

Running in docker, the latest images as todays date.

OKREADY

[2018-03-16 08:04:15,715: INFO/Beat] Scheduler: Sending due task cluster_heartbeat (awx.main.tasks.cluster_node_heartbeat)

[2018-03-16 08:04:15,717: DEBUG/Beat] awx.main.tasks.cluster_node_heartbeat sent. id->017495a3-7c8b-4c40-9c81-42127237652c

[2018-03-16 08:04:15,717: INFO/Beat] Scheduler: Sending due task tower_scheduler (awx.main.tasks.awx_periodic_scheduler)

[2018-03-16 08:04:15,718: DEBUG/Beat] awx.main.tasks.awx_periodic_scheduler sent. id->7de7abc8-fb2a-487f-8f77-4cbb6f204749

[2018-03-16 08:04:15,718: INFO/MainProcess] Received task: awx.main.tasks.cluster_node_heartbeat[017495a3-7c8b-4c40-9c81-42127237652c] expires:[2018-03-16 08:05:05.716431+00:00]

[2018-03-16 08:04:15,718: DEBUG/MainProcess] TaskPool: Apply <function _fast_trace_task at 0x36af488> (args:(u'awx.main.tasks.cluster_node_heartbeat', u'017495a3-7c8b-4c40-9c81-42127237652c', [], {}, {u'utc': True, u'is_eager': False, u'chord': None, u'group': None, u'args': [], u'retries': 0, u'delivery_info': {u'priority': 0, u'redelivered': False, u'routing_key': u'awx', u'exchange': u'awx'}, u'expires': u'2018-03-16T08:05:05.716431+00:00', u'hostname': 'celery@awx', u'task': u'awx.main.tasks.cluster_node_heartbeat', u'callbacks': None, u'correlation_id': u'017495a3-7c8b-4c40-9c81-42127237652c', u'errbacks': None, u'timelimit': [None, None], u'taskset': None, u'kwargs': {}, u'eta': None, u'reply_to': u'64f295e5-849c-3796-a3bb-350da445c5ce', u'id': u'017495a3-7c8b-4c40-9c81-42127237652c', u'headers': {}}) kwargs:{})

[2018-03-16 08:04:15,718: DEBUG/Beat] beat: Waking up now.

[2018-03-16 08:04:15,719: DEBUG/MainProcess] Task accepted: awx.main.tasks.cluster_node_heartbeat[017495a3-7c8b-4c40-9c81-42127237652c] pid:226

2018-03-16 08:04:15,734 DEBUG awx.main.tasks Cluster node heartbeat task.

[2018-03-16 08:04:15,761: DEBUG/Worker-7] Start from server, version: 0.9, properties: {u'information': u'Licensed under the MPL. See http://www.rabbitmq.com/', u'product': u'RabbitMQ', u'copyright': u'Copyright (C) 2007-2017 Pivotal Software, Inc.', u'capabilities': {u'exchange_exchange_bindings': True, u'connection.blocked': True, u'authentication_failure_close': True, u'direct_reply_to': True, u'basic.nack': True, u'per_consumer_qos': True, u'consumer_priorities': True, u'consumer_cancel_notify': True, u'publisher_confirms': True}, u'cluster_name': u'rabbit@74b91b967966', u'platform': u'Erlang/OTP 20.1.7', u'version': u'3.7.2'}, mechanisms: [u'AMQPLAIN', u'PLAIN'], locales: [u'en_US']

[2018-03-16 08:04:15,762: DEBUG/Worker-7] Open OK!

[2018-03-16 08:04:15,763: DEBUG/Worker-7] using channel_id: 1

[2018-03-16 08:04:15,763: DEBUG/Worker-7] Channel open

[2018-03-16 08:04:15,764: DEBUG/Worker-7] Closed channel #1

[2018-03-16 08:04:15,765: INFO/MainProcess] Received task: awx.main.tasks.handle_ha_toplogy_changes[c1b5b7c9-f56e-409c-a16d-765c15824fc8]

[2018-03-16 08:04:15,765: DEBUG/MainProcess] TaskPool: Apply <function _fast_trace_task at 0x36af488> (args:(u'awx.main.tasks.handle_ha_toplogy_changes', u'c1b5b7c9-f56e-409c-a16d-765c15824fc8', [], {}, {u'utc': True, u'is_eager': False, u'chord': None, u'group': None, u'args': [], u'retries': 0, u'delivery_info': {u'priority': None, u'redelivered': False, u'routing_key': u'', u'exchange': u'tower_broadcast_all'}, u'expires': None, u'hostname': 'celery@awx', u'task': u'awx.main.tasks.handle_ha_toplogy_changes', u'callbacks': None, u'correlation_id': u'c1b5b7c9-f56e-409c-a16d-765c15824fc8', u'errbacks': None, u'timelimit': [None, None], u'taskset': None, u'kwargs': {}, u'eta': None, u'reply_to': u'90ca1c20-9b3a-369b-b202-e1bda9fc027f', u'id': u'c1b5b7c9-f56e-409c-a16d-765c15824fc8', u'headers': {}}) kwargs:{})

[2018-03-16 08:04:15,766: DEBUG/MainProcess] Task accepted: awx.main.tasks.handle_ha_toplogy_changes[c1b5b7c9-f56e-409c-a16d-765c15824fc8] pid:227

[2018-03-16 08:04:15,769: INFO/MainProcess] Task awx.main.tasks.cluster_node_heartbeat[017495a3-7c8b-4c40-9c81-42127237652c] succeeded in 0.0509614500002s: None

2018-03-16 08:04:15,781 DEBUG awx.main.tasks Reconfigure celeryd queues task on host celery@awx

[2018-03-16 08:04:15,784: INFO/Beat] Scheduler: Sending due task task_manager (awx.main.scheduler.tasks.run_task_manager)

[2018-03-16 08:04:15,785: DEBUG/Beat] awx.main.scheduler.tasks.run_task_manager sent. id->276b404b-e676-4c3f-bee5-3232e9595751

[2018-03-16 08:04:15,785: DEBUG/Beat] beat: Waking up in 19.99 seconds.

[2018-03-16 08:04:15,802: DEBUG/Worker-8] Start from server, version: 0.9, properties: {u'information': u'Licensed under the MPL. See http://www.rabbitmq.com/', u'product': u'RabbitMQ', u'copyright': u'Copyright (C) 2007-2017 Pivotal Software, Inc.', u'capabilities': {u'exchange_exchange_bindings': True, u'connection.blocked': True, u'authentication_failure_close': True, u'direct_reply_to': True, u'basic.nack': True, u'per_consumer_qos': True, u'consumer_priorities': True, u'consumer_cancel_notify': True, u'publisher_confirms': True}, u'cluster_name': u'rabbit@74b91b967966', u'platform': u'Erlang/OTP 20.1.7', u'version': u'3.7.2'}, mechanisms: [u'AMQPLAIN', u'PLAIN'], locales: [u'en_US']

[2018-03-16 08:04:15,802: DEBUG/Worker-8] Open OK!

[2018-03-16 08:04:15,803: DEBUG/Worker-8] using channel_id: 1

[2018-03-16 08:04:15,803: DEBUG/Worker-8] Channel open

[2018-03-16 08:04:15,807: DEBUG/MainProcess] pidbox received method active_queues() [reply_to:{u'routing_key': u'e2178122-93d6-35f2-b460-527d5e1fddf8', u'exchange': u'reply.celery.pidbox'} ticket:4fd1700f-1782-4f88-aa36-bf2df1f34020]

[2018-03-16 08:04:15,810: DEBUG/Worker-8] Closed channel #1

2018-03-16 08:04:15,812 INFO awx.main.tasks Workers on tower node 'awx' removed from queues [] and added to queues []

2018-03-16 08:04:15,820 INFO awx.main.tasks Worker on tower node 'awx' updated celery routes {'awx.main.tasks.purge_old_stdout_files': {'queue': u'awx', 'routing_key': u'awx'}, 'awx.main.tasks.cluster_node_heartbeat': {'queue': u'awx', 'routing_key': u'awx'}} all routes are now {'awx.main.tasks.purge_old_stdout_files': {'queue': u'awx', 'routing_key': u'awx'}, 'awx.main.tasks.cluster_node_heartbeat': {'queue': u'awx', 'routing_key': u'awx'}}

[2018-03-16 08:04:15,823: INFO/MainProcess] Task awx.main.tasks.handle_ha_toplogy_changes[c1b5b7c9-f56e-409c-a16d-765c15824fc8] succeeded in 0.0574832910002s: None

[2018-03-16 08:04:35,784: INFO/Beat] Scheduler: Sending due task task_manager (awx.main.scheduler.tasks.run_task_manager)

[2018-03-16 08:04:35,786: DEBUG/Beat] awx.main.scheduler.tasks.run_task_manager sent. id->e8d0a60c-cb89-4579-b68e-ac8acd557028

[2018-03-16 08:04:35,786: DEBUG/Beat] beat: Waking up in 9.92 seconds.

[2018-03-16 08:04:45,717: INFO/Beat] Scheduler: Sending due task tower_scheduler (awx.main.tasks.awx_periodic_scheduler)

[2018-03-16 08:04:45,719: DEBUG/Beat] awx.main.tasks.awx_periodic_scheduler sent. id->3e8b94a9-4210-43b6-a927-b51f12856ea7

[2018-03-16 08:04:45,720: DEBUG/Beat] beat: Waking up in 10.06 seconds.

[2018-03-16 08:04:55,787: INFO/Beat] Scheduler: Sending due task task_manager (awx.main.scheduler.tasks.run_task_manager)

[2018-03-16 08:04:55,788: DEBUG/Beat] awx.main.scheduler.tasks.run_task_manager sent. id->3bd85555-b73b-42dd-9327-7513b1c86295

[2018-03-16 08:04:55,789: DEBUG/Beat] beat: Waking up in 19.92 seconds.

Any news on this ? I am stack on the same problem. SCM Updates are pending for ever. I use docker on mac and i upgrade awx regularly using docker-compose pull && docker-compose up -d --force-recreate.

Please is there any solution for this ? If this is due to a failed migrations process can we force downgrade migrations and re-execute them ? is there any other cli tool to investigate the problem from the container itself ?

Having the same issues running AWX 1.0.5 and all jobs are pending.

Just out of curiosity, do you see this only after an upgrade? During an upgrade of the awx_task container (possibly the web container too, but I'm not sure), I find that the the task container removes itself from the celery queue. I'm guessing this is by design for clustered environments, but it is a bit annoying for simple environments. I get around this with the following:

/bin/cd /var/lib/awx

/bin/docker-compose pull

/bin/docker-compose up -d --force-recreate

/bin/docker exec -it awx_web_1 awx-manage register_queue --queuename tower --hostnames awx

Alternatively, in the GUI try the following:

- Instance Groups >>

tower>> Instances +- Click the checkbox by

awxclick save

This effectively does the same as the last line in the script above by telling the task container to listen to the message queue and pick up pending jobs.

@matburt I think @ericrochow is right. After recreating the tower instance the queue starts working again. This seems to be a bug.

I'm not sure it's a big so much as an undocumented procedure. My guess is the manual step is a design decision to avoid unpredictable behavior in multi-node setups due to differing versions of Ansible, AWX, etc. Since I run a single now setup for now, that doesn't apply to me so I just use the above script and live with it. A documented upgrade procedure and/or a Boolean in the install playbook on whether to rejoin the instance group automatically might be a worthwhile consideration.

Fresh install. 1.0.5

My user is an administrator to everything I can find.

I tried @ericrochow 's recommendation

My task log is full of

task_1 | 2018-05-15 16:57:23,640 ERROR awx.main.consumers Request user is not authenticated to use websocket.

task_1 | 2018-05-15 16:57:24,695 ERROR awx.main.consumers Request user is not authenticated to use websocket.

task_1 | 2018-05-15 16:57:25,747 ERROR awx.main.consumers Request user is not authenticated to use websocket.

task_1 | 2018-05-15 16:57:26,799 ERROR awx.main.consumers Request user is not authenticated to use websocket.

task_1 | 2018-05-15 16:57:27,851 ERROR awx.main.consumers Request user is not authenticated to use websocket.

as soon as I attempt to update my dynamic inventory, I see

task_1 | 2018-05-15 14:48:40,145 WARNING awx.main.tasks inventory_update 7 (error) encountered an error (rc=None), please see task stdout for details.

task_1 | [2018-05-15 14:48:40,147: CRITICAL/MainProcess] Task awx.main.tasks.run_inventory_update[e2a972e0-a717-4baa-abcd-42d6a486b4d2]

INTERNAL ERROR: TypeError('super(type, obj): obj must be an instance or subtype of type',)

task_1 | Traceback (most recent call last):

task_1 | File "/var/lib/awx/venv/awx/lib/python2.7/site-packages/celery/app/trace.py", line 253, in trace_task

task_1 | I, R, state, retval = on_error(task_request, exc, uuid)

task_1 | File "/var/lib/awx/venv/awx/lib/python2.7/site-packages/celery/app/trace.py", line 201, in on_error

task_1 | R = I.handle_error_state(task, eager=eager)

task_1 | File "/var/lib/awx/venv/awx/lib/python2.7/site-packages/celery/app/trace.py", line 85, in handle_error_state

task_1 | }[self.state](task, store_errors=store_errors)

task_1 | File "/var/lib/awx/venv/awx/lib/python2.7/site-packages/celery/app/trace.py", line 120, in handle_failure

task_1 | task.on_failure(exc, req.id, req.args, req.kwargs, einfo)

task_1 | File "/usr/lib/python2.7/site-packages/awx/main/tasks.py", line 94, in on_failure

task_1 | super(LogErrorsTask, self).on_failure(exc, task_id, args, kwargs, einfo)

task_1 | TypeError: super(type, obj): obj must be an instance or subtype of type

in logs

Meanwhile, dynamic inventory update hangs. I see no logs

I setup a project doing an actual checkout from a git repo & it works fine.

If I log out & login as admin user, it works fine. So something is up with permissions. Where I must ask.

@greenscar that error would be https://github.com/ansible/awx/issues/1720

@wenottingham I thought so too so deleted / did a fresh install & had same problem.

Turns out, this is a permission error of some sort.

If I create a new dynamic inventory job as a user with admin privs but not the admin user itself, I cannot execute this dynamic inventory script to populate. I get the above errors. However, if I login as admin then execute the update then logout and log in as the user with admin privs I can then execute.

IOW: Only admin can populate the dynamic inventory the first time.

Hey,

I had a similar issue some month ago. Turns out to be my /var/lib/docker out of space.

Don’t forget this quick check.

Apologies for the delay - is anyone still seeing this with 1.0.6?

Yes I am still seeing the same issue, SCM jobs seems to be hanging forever, even management jobs like cleanup activity details are also hanging in pending state forever.

Started working fine for me after I killed and respawn the docker instances.

No further negative comments, closing.

Most helpful comment

Just out of curiosity, do you see this only after an upgrade? During an upgrade of the awx_task container (possibly the web container too, but I'm not sure), I find that the the task container removes itself from the celery queue. I'm guessing this is by design for clustered environments, but it is a bit annoying for simple environments. I get around this with the following:

Alternatively, in the GUI try the following:

tower>> Instances+awxclick saveThis effectively does the same as the last line in the script above by telling the task container to listen to the message queue and pick up pending jobs.