Aws-cli: Add ability to limit bandwidth for S3 uploads/downloads

Original from #1078, this is a feature request to add the ability for the aws s3 commands to limit the amount of bandwidth used for uploads and downloads.

In the referenced issue, it was specifically mentioned that some ISPs charge fees if you go above a specific mbps, so users need the ability to limit bandwidth.

I imagine this is something we'd only need to add to the aws s3 commands.

All 67 comments

Hello jamesis,

Could you provide a timeframe when the bandwidth limit could become available?

Thanks

austinsnow

:+1:

:+1:

:+1:

:+1:

![]()

:+1:

:+1:

:+1:

:+1:

Under Unix-flavor systems, trickle comes in handy for ad-hoc throttling. trickle hooks socket-APIs using LD_PRELOAD and throttles bandwidth.

You can run commands something like

$ trickle -s -u {UPLOAD_LIMIT(KB/s)} command

$ trickle -s -u {UPLOAD_LIMIT(KB/s)} -d {DOWNLOAD_LIMIT(KB/s)} command

Built-in feature will be really useful, but given cross-platform nature of AWS-CLI, it can cost a lot to implement and maintain it.

Trickle is specifically mentioned in issue #1078 which is linked to in the first comment here. The two (trickle and AWS-CLI) just don't play nice together in my experience.

:+1:

:+1:

+1

+1

:+1:

+1

+1

:+1:

(Y)

:+1:

:+1: this is much needed!

:+1:

:+1:

+1

+1

:+1:

👍

Any update on this feature?

:+1:

:+1:

👍

👍

![]()

Any updates on this?

👍

👍

👍

👍

👍

:+1:

On the one hand: much faster than s3cmd.

On the other hand: my hosting company automatically halted a server for using "suspiciously high amounts of bandwidth".

Someone suggested aws configure set default.s3.max_concurrent_requests $n where $n is less than 10. Not sure if that is enough; will investigate the trickle tool mentioned above.

👍

Over two years in, and this request is still outstanding. Is there a timeframe by which this could be implemented?

👍

👍

👍

👍🏿

:+1:

Just nuked the internet in a shared office.

This would be a nice feature when you want to be kind to other people

👍

👍

you can use trickle -s -u 100 aws s3 sync . s3://examplebucket

@sofuca does this work correctly though? There are many people that have tried trickle for this but the results were questionable. See #1078.

@ikoniaris

Works perfectly for me.

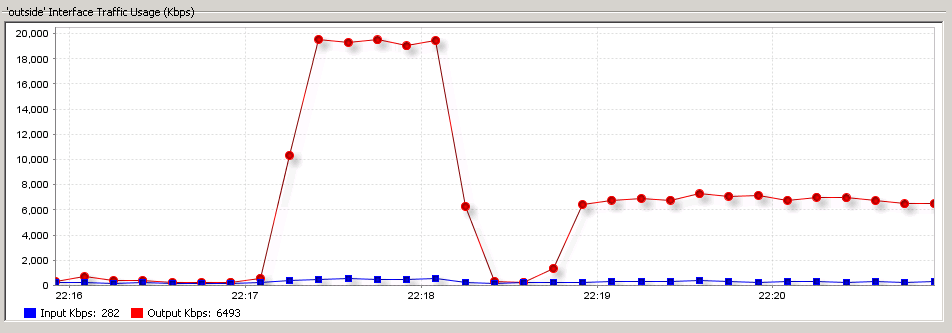

The following command nukes the internet in the office, it's a 20Mb/s connection

aws s3 cp /foo s3://bar

And the following command uploads at a nice 8Mb/s

trickle -s -u 1000 aws s3 sync /foo s3://bar

Screen shot of the outside interface of the firewall I'm using

👍

Trickle and large s3 files will cause the trickle to crash

(y)

sorry, Trickle and large s3 files will cause the trickle to crash using boto3 with 10 concurrent(default settings) uploads, changing the concurrent uploads will resolve the issue. I need to add this in the boto3's github, thanks!

👍

So it's been over 2.5 years since this was opened. Is this request just being ignored?

For us we use pv(https://linux.die.net/man/1/pv) in this maner:

/usr/bin/pv -q -L 20M $l_filepath | /usr/local/bin/aws s3 cp --region "us-east-1" - s3://<s3-bucket>/<path in s3 bucker>

This solution is not ideal(because it require additional support for filtering and recursion, we do it inside bash loop) but much better than trickle which in our case uses 100% of CPU, and behaves very unstable

Here our full usecase of pv(we limit upload speed to 20MB/s == 160Mbit/s)

for l_filepath in /logs/*.log-*; do

l_filename=`basename $l_filepath`

/usr/bin/pv -q -L 20M $l_filepath | /usr/local/bin/aws s3 cp --region "us-east-1" - s3://$S3BUCKET/${HOSTNAME}/$l_filename

/bin/rm $l_filepath

done

+1

Real life use case: Very large upload to S3 over DX, do not want to saturate the link and potentially impact production applications using the DX link.

throttle, trickle and pv all do not work for me on archlinux with the latest awscli from pip when uploading to a bucket. I have additionally set max_concurrent_connections for s3 in the awscli configuration to 1 with no difference made. This would be a much appreciated addition!

@ischoonover seems that you don't pass --expected-size to aws cli when use it with pv, it very useful when you try to upload very big files

--expected-size (string) This argument specifies the expected size of a stream in terms of bytes. Note that this argument is needed only when a stream is being uploaded to s3 and the size is larger than 5GB. Failure to include this argument under these conditions may result in a failed upload due to too many parts in upload.

@tantra35 Size was 1GB. I ended up using s3cmd, which has rate limiting built in with --limit-rate

Implemented in #2997.

Most helpful comment

Just nuked the internet in a shared office.

This would be a nice feature when you want to be kind to other people

👍