Aws-cdk: [aws-codepipeline-actions] EcsDeployAction: Can't reuse imported FargateService as service argument

Context: CDK-Pipeline in a shared account, which has a separate stage for ECS Deployments to target account(s).

Minimal code example (added 2020-09-22):

https://github.com/michaelfecher/aws-cdk-pipelines-cross-account-ecs-deployment-issue/tree/cross-account-resources-import-fails

I can't import an existing FargateService and reuse it in the EcsDeploy Action.

I also tried to use the BaseService to match the signature.

However, it doesn't provide an import method.

Alternatively, I tried to use a Stage variable to be able to pass the service to the EcsDeploy, but that failed with:

dependency cannot cross stage boundaries

Additionally, I tried the other method fromFargateServiceAttributes to retrieve the full context for the existing Fargate service.

This approach fails, when calling the VPC fromLookup with

All arguments to Vpc.fromLookup() must be concrete (no Tokens)

Guess it's all related to the fact, that contexts/implicit dependencies can't be resolved?

Reproduction Steps

This is the original issue:

const fargateService = ecs.FargateService.fromFargateServiceArn(

this,

"FargateService",

"ARNofFargateService"

);

cdkPipelineBackendDeployStage.addActions(

new codepipeline_actions.EcsDeployAction({

// Property 'cluster' is missing in type 'IFargateService' but required in type 'IBaseService'.ts

service: fargateService,

actionName: "DeployActionBackend",

})

);

This is the alternative setup of the Fargate Import with fromFargateServiceAttributes:

const stage="dev";

const backendFargateService = ecs.FargateService.fromFargateServiceAttributes(

this,

`${stage}ImportedBackendService`,

{

cluster: ecs.Cluster.fromClusterAttributes(

this,

`${stage}ImportedCluster`,

{

clusterName: cdk.Fn.importValue("ExistingCluster"),

vpc: ec2.Vpc.fromLookup(this, `${stage}ImportedVpc`, {

// error: All arguments to Vpc.fromLookup() must be concrete (no Tokens)

vpcId: cdk.Fn.importValue("ExistingVpcId"),

// Alternative with name leads to

// error: You can only specify either serviceArn or serviceName

// vpcName: "ShortNameVPC",

}),

securityGroups: [

ec2.SecurityGroup.fromSecurityGroupId(

this,

"importedSecGroup",

cdk.Fn.importValue("ExistingBackendSecurityGroup")

),

],

}

),

}

);

What did you expect to happen?

That the service argument is accepting the passed and imported Fargate Service object and can derive all already existing information, like the cluster, vpc, etc.

Additionally, the ECS deploy can redeploy the already created Fargate Service.

What actually happened?

Property 'cluster' is missing in type 'IFargateService' but required in type 'IBaseService'.ts(2741)

base-service.d.ts(180, 14): 'cluster' is declared here.

deploy-action.d.ts(37, 14): The expected type comes from property 'service' which is declared here on type 'EcsDeployActionProps'

Environment

- *CLI Version : *

- Framework Version: v.1.63.0 (CDK)

- Node.js Version: v12.18.2

- OS : Ubuntu

- Language (Version): TS 4.0.2

Other

This is :bug: Bug Report

All 21 comments

Today was a day full of investigations and new findings.. ;)

In the second attempt from above (see fromFargateServiceAttributes) I refactored all nested arguments like in the following to trace the error:

const importedVpc = Vpc.fromLookup(this, `ImportedVpc`, {

tags: {

name: "TargetAccountVpcShortName",

},

});

const importedCluster = Cluster.fromClusterAttributes(

this,

`ImportedCluster`,

{

clusterName: Fn.importValue("TargetAccountCluster"),

vpc: importedVpc,

securityGroups: [],

}

);

const backendFargateService = FargateService.fromFargateServiceAttributes(

this,

`ImportedBackendService`,

{

cluster: importedCluster,

serviceArn: Fn.importValue("TargetAccountBackendServiceArnOutput"),

}

);

fargateServiceAction = new EcsDeployAction({

service: backendFargateService,

actionName: `DeployActionBackend`,

input: props.containerArtifact,

});

Result: All resources used the environment of the shared-account, where the pipeline resides (of course!).

This is not desired, because all resources are living in the target account.

So I created an additional stack and put everything from above inside it:

export interface EcsDeploymentProps extends StackProps {

containerArtifact: Artifact;

}

export class TargetAccountEcsDeploymentActionStack extends Stack {

readonly fargateServiceAction: ecsDeployAction;

constructor(scope: cdk.Construct, id: string, props: EcsDeploymentProps) {

super(scope, id, props);

const importedVpc = Vpc.fromLookup(this, `ImportedVpc`, {

tags: {

name: "TargetAccountVpcShortName",

},

});

const importedCluster = Cluster.fromClusterAttributes(

this,

`ImportedCluster`,

{

clusterName: Fn.importValue("TargetAccountCluster"),

vpc: importedVpc,

securityGroups: [],

}

);

const backendFargateService = FargateService.fromFargateServiceAttributes(

this,

`ImportedBackendService`,

{

cluster: importedCluster,

serviceArn: Fn.importValue("TargetAccountBackendServiceArnOutput"),

}

);

this.fargateServiceAction = new codepipeline_actions.EcsDeployAction({

service: backendFargateService,

actionName: `DeployActionBackend`,

input: props.containerArtifact,

});

}

}

// instance creation in the cdk-pipeline stack (which has the env of shared-account)

const targetAccountDeploymentAction = new TargetAccountEcsDeploymentActionStack(

this,

`${stage}ImportedTargetAccountResourcesStack`,

{

containerArtifact: ecrBackendOutputArtifact,

env: {

account: targetAccountId,

region: this.region,

},

}

);

cdkBackendDeployStage.addActions(

targetAccountDeploymentAction.fargateServiceAction

);

result: Need to perform AWS calls for account targetAccount, but the current credentials are for sharedAccount.

The cross-account mechanism with cdk-pipelines works though.

I'm trying now or tomorrow a plugin, which was recommended by the support, which might solve this issue of cross-account synth issues.

Again, I'm very open for discussions and other mechanisms in terms of detecting a container version trigger and deploy them to other accounts!

Looking forward to any response. ;)

Hello @michaelfecher ,

you're using the Vpc.fromLookup function, which will require credentials to access your targetAccount. They'll be needed only once, though, and then the results will be cached in cdk.context.json (which you should commit alongside your code), and then the credentials won't be needed anymore.

Thanks,

Adam

Thanks, @skinny85 for your reply.

However, the cdk.context.json is already within the codebase.

The actual CDK deploy on the target accounts is also working.

The EcsCodeDeploy should (re-)deploy ECR based containers from the shared-account to the target account.

And this piece isn't working.

Below is an excerpt of the cdk.context.json:

"availability-zones:account=targetAccountNumber:region=eu-west-1": [

"eu-west-1a",

"eu-west-1b",

"eu-west-1c"

],

"availability-zones:account=sharedAccount:region=eu-west-1": [

"eu-west-1a",

"eu-west-1b",

"eu-west-1c"

],

This is the output from the underlying CodeBuild project, which synthesizes the CF template within the CDK-Pipeline:

[Container] 2020/09/17 14:21:04 Running command npx cdk synth

DEBUG: before CdkPipelineMultiSourceActions={account:sharedAccount,region:eu-west-1}

[Error at /CdkPipelineMultiSourceActions/integrationImportedTargetAccountResourcesStack] Need to perform AWS calls for account targetAccount, but the current credentials are for sharedAccount

Well, cdk.context.json contains the Availability Zones, but no the VPC, which is what the Vpc.fromLookup() uses. 🙂

Basically you're right that this information is missing in the cdk.context.json.

However, it doesn't work for a use-case, where the account infrastructure (incl. VPC) is being created each time from scratch like a test environment.

Why? The JSON is static and only rendered/filtered once during build/synth time.

Anyhow, I'll try that out to verify if it's working for the 80% solution. :)

In terms of reproducibility (and finding an easier way hopefully), I created a git repo to follow along with the approaches:

Initial attempt with Stage access:

https://github.com/michaelfecher/aws-cdk-pipelines-cross-account-ecs-deployment-issue/tree/stage-property-access-fail

Ok, I tried your suggested approach and it isn't failing during synth.

However, when the CDK-pipeline is trying to deploy to the target account, I'm getting the following:

Error: Need to perform AWS calls for account TARGET_ACCOUNT, but the current credentials are for PIPELINE_ACCOUNT.

Here's the part, I added to the cdk.context.json:

"vpc-provider:account=TARGET_ACCOUNT:filter.tag:name=TAGGED_VPC_NAME:region=eu-west-1:returnAsymmetricSubnets=true": {

"comment": "the existing vpcId needs to be added in this JSON manually and the VPC mustn't be deleted! Used for vpc.fromLookup method.",

"vpcId": "EXISTING_VPC_ID",

"stage": "integration",

"vpcCidrBlock": "10.0.0.0/16",

"availabilityZones": ["eu-west-1a", "eu-west-1b", "eu-west-1c"]

}

The call fromLookup (in an extra stack, which isn't part of the ApplicationStage, but has the environment from the TARGET_ACCOUNT) looks like the following:

const importedVpc = ec2.Vpc.fromLookup(this, `${stage}ImportedVpc`, {

tags: {

name: TAGGED_VPC_NAME,

},

});

I also changed the fromLookup arguments to vpcId, but that also didn't help.

Alternatively to the tagged variant, I used this in the cdk.context.json:

"vpc-provider:account=TARGET_ACCOUNT:filter.vpc-id:EXISTING_VPC_ID:region=eu-west-1": {

"comment": "the existing vpcId needs to be added in this JSON manually and the VPC mustn't be deleted! Used for vpc.fromLookup method.",

"vpcId": "EXISTING_VPC_ID",

"stage": "integration",

"vpcCidrBlock": "10.0.0.0/16",

"availabilityZones": [

"eu-west-1a",

"eu-west-1b",

"eu-west-1c"

]

}

When trying to call the this.node.tryGetContext("vpcId") within the ImportingClusterResourcesTargetAccountStack, it returned an undefined.

@michaelfecher silly question, but did you actually git push all of your changes that you mentioned (including cdk.context.json)?

There are no silly questions... :)

Yes, it's within the master branch, where the CDK resides

Ok, during our Slack conversations, we found out, that I created all the context by myself, which isn't OK. ;)

I did that manual editing because the synth itself wasn't able to figure it out (see original post) and was failing already.

Consequently, the cdk.context.json wasn't created by CDK itself during synth.

In general, the question is on how to reuse cross-account resources in a CDK-pipeline like the VPC.

Find the minimal example with the importing resources from the target account approach here:

https://github.com/michaelfecher/aws-cdk-pipelines-cross-account-ecs-deployment-issue/tree/cross-account-resources-import-fails

To sum up:

- Do not fill the

cdk.context.jsonfile manually. - When you get the error "Need to perform AWS calls for account target_account, but the current credentials are for shared_account", set the credentials locally for target_account, and execute

cdk synthagain locally. That will populate thecdk.context.jsonfile. - Make sure to Git commit and push that file to your repository.

Talked offline on Slack.

Hey guys, so I am trying to do exactly the same. I followed your discussion and implemented the same approach, and now I indeed also get the same error while trying to cdk deploy:

Need to perform AWS calls for account TARGET_ACCOUNT, but the current credentials are for PIPELINE_ACCOUNT.

Now I'm trying to figure out the solution that you discussed in Slack 😃

My cdk.context.json is already generated and contains exactly the values for vpc in the target account.

So... what's missing now? Running cdk synth --profile target_account doesn't change anything.

@innokenty it means you're still missing some values in your cdk.context.json file for the target account. Make sure the correct cdk.context.json file is git pushed.

Yeah, this I understood, but how can I add the values there? I already have some values there for the target account vpc. As I understood synth command should create them somehow, but it doesn't change anything.

P.S. this has nothing to do with the push, as I'm trying to cdk deploy the pipeline itself first. This is the step that fails, we're not talking about running the pipeline yet.

Can you show the exact command that you run that is failing?

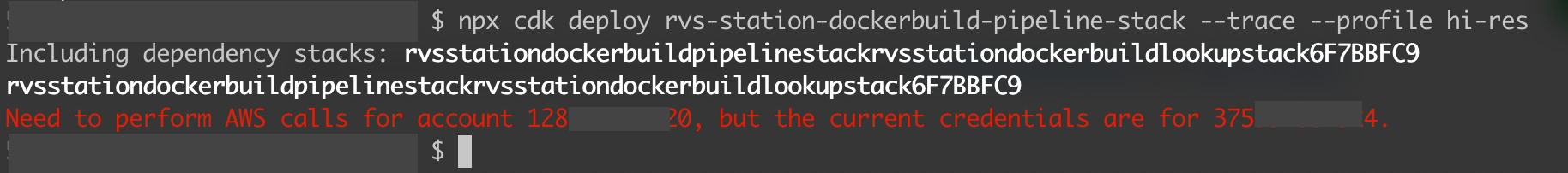

npx cdk deploy my-pipeline-stack --profile pipeline-account

gives

Including dependency stacks: <id_of_my_auxillary_lookup_stack>

<id_of_my_auxillary_lookup_stack>

Need to perform AWS calls for account <target_account>, but the current credentials are for <pipeline-account>

Uhm. What's the "auxillary lookup stack"?

Also, try the -e switch. But I think you're doing something peculiar here.

I mean the TargetAccountEcsDeploymentActionStack from the example above, of course. To sum up, there is:

- The pipeline called

my-pipeline, that must be deployed inpipeline-account. - Pipeline gets sources from a codecommit repo, builds a docker image, pushes it to an ecr bucket – all in the same `pipeline-account.

- Then pipeline performs an

EcsDeployAction, but it needs to deploy the image totarget-account. - Therefore it creates the stack that I called auxiliary –

TargetAccountEcsDeploymentActionStackin the example from @michaelfecher , passesenv: targetAccountEnvin there. That stack performs lookup of the VPC, the cluster and the service in the target account. - That looked up information we use to pass the

serviceparameter toEcsDeployAction.

Now, the cdk code is written. My cdk.context.json contains contents like in the snippets above from Michael. I am doing cdk synth --profile pipeline_account or cdk synth --profile target_account – they both work, contents of cdk.context.json don't change.

Then I'm trying to deploy the pipeline stack itself – in order to try to run it in codepipeline after. I am doing:

cdk deploy my-pipeline-stack --profile pipeline_account

and here is when it breaks, during the cdk deploy command, I get this same error:

Including dependency stacks: <id_of_my_auxillary_lookup_stack>

<id_of_my_auxillary_lookup_stack>

Need to perform AWS calls for account <target_account>, but the current credentials are for <pipeline-account>

Am I doing something wrong? Looks exactly the same as in example from Michael to me. I am not sure what the solution is though, the one you found and discussed privately 😺

Hm, I will try with -e, maybe it's actually the problem, that it's trying to deploy the "lookup stack" as well, which we don't want.

Deploying with -e gives other error output during deploy, I suppose I need to _check my privileges_ or something 😄

So tl&dr: the solution seems to deploy the pipeline with -e flag, so that it doesn't try to deploy the auxiliary stack that we're using for vpc/cluster/service lookup only!

Yeah, so one more summary for those trying to do the same. If you're getting this error while trying to deploy your pipeline with -e flag:

Policy contains a statement with one or more invalid principals. (Service: Kms, Status Code: 400...)

– this is happening because you first need to deploy your "auxilliary lookup stack" in your target account. The one that's called TargetAccountEcsDeploymentActionStack in the example above. Check the output of your cdk synth, it should have a new stack that would be called integrationImportedTargetAccountResourcesStack in the example above.

Do this first:

cdk deploy integrationImportedTargetAccountResourcesStack --profile target_profile

And the deploy your pipeline:

cdk deploy my-pipeline-stack -e --profile pipeline_account

Hope this helps someone 🙏

God Bless! 🇺🇸

@innokenty

The summary of Adam's and my conversation was, that I had a wrong understanding of CDK Pipelines and the ECS CodeDeploy.

I assumed, that I could utilize ECR in combination with CodeDeploy in a CDK Pipeline, which isn't the use case.

Instead, I switched completely to an (Docker)Asset based approach, which works as I expect.

However, thanks for posting the solution for your issue. :)

Take care ;)