Aws-cdk: Deployment crashing while zipping assets

I'm submitting a ...

- [x] :beetle: bug report

What is the current behavior?

After upgrading to CDK 0.36.0, deployment fails without an error message while creating an asset zip file for my lambda. Running deploy in verbose mode, i can see the last debug message printed:

Preparing zip asset from directory: cdk.out/asset.357a0d25d1...Please tell us about your environment:

- CDK CLI Version: 0.36.0

- Module Version: 0.36.0

- OS: Antergos (Arch Linux)

- Language: Typescript

Other information

After looking through the corresponding source code (here) it seems like the process is crashing completely, as thefinallystatement (removing the staging directory in/tmp) is'nt run, the directory still exists.

I've made sure there is enough disk space in/tmpand it is writable.

All 53 comments

Can you give more information about the content of the folder you are zipping?

It is a gatsby application with some dependent subprojects, so there are:

- package.json

- node_modules

- src

- main.js

- gatsby-config.js

- gatsby-node.js

- gatsby-browser.js

- components (subproject containing package.json and src folder)

- feature-toggles (subproject containing package.json and src folder)

- lambda (folder with the lambda handler and some additional source files)

- yarn.lock

What about the size and number of files? Is it failing with for a specific Lambda function or all functions?

25823 files

341 links

5077 directories

Total size of the directory is 239M, with 236M being node_modules

This is a major issue.

I remember @rix0rrr had a comment about this in #2931, and I can't see if it was addressed or not. If it wasn't, I rather we revert this change and rethink this.

@eladb agree

@PygmalionPolymorph it would be nice if you could share your code (at least what's generating the folder content) so that we can reproduce. Can you also confirm that the issue is specific to this Lambda function's asset?

I have another lambda@edge inside the same stack which is being deployed fine, so yes, it is specific to this asset. I don't know whether this occurs on other lambdas as well though, as i only have this stack..

And unfortunately i can't share the code as it is a closed source client project

Can you at least share the dependencies of your package.json so that I can generate an equivalent node_modules folder?

Sure, here they are:

"dependencies": {

"@clientname/components": "*",

"@contentful/rich-text-react-renderer": "^13.2.0",

"@contentful/rich-text-types": "^13.1.0",

"babel-plugin-styled-components": "^1.10.2",

"dotenv": "^8.0.0",

"gatsby": "2.9.4",

"gatsby-cli": "^2.6.0",

"gatsby-image": "^2.1.0",

"gatsby-plugin-google-gtag": "^1.0.17",

"gatsby-plugin-manifest": "^2.1.1",

"gatsby-plugin-offline": "^2.1.1",

"gatsby-plugin-react-helmet": "^3.0.12",

"gatsby-plugin-sharp": "^2.0.37",

"gatsby-plugin-styled-components": "^3.1.0",

"gatsby-source-contentful": "^2.0.62",

"gatsby-source-filesystem": "^2.0.37",

"gatsby-transformer-sharp": "^2.1.19",

"prop-types": "^15.7.2",

"react": "^16.8.6",

"react-dom": "^16.8.6",

"react-helmet": "^5.2.1",

"styled-components": "^4.3.2"

}

@clientname/components has these:

"dependencies": {

"@clientname/feature-toggles": "*",

"react": "^16.8.6",

"styled-components": "^4.3.2"

}

And @clientname/feature-toggles doesn't have any

I'm able to zip the node_modules after installing all the dependencies:

import { zipDirectory } from '../archive';

(async () => {

await zipDirectory('./node_modules', 'test.zip');

})();

Around 1.5GB of RAM is used by node during this process

I am not 100% comfortable that we need to load the entire directory into memory...

I am not 100% comfortable that we need to load the entire directory into memory...

Not sure it's the case, zipDirectory uses streams.

I've had a colleague try the same deployment, and it worked on his machine. I'm not quite sure how to continue debugging this.

@PygmalionPolymorph can you add a console.log(file) statement in the forEach?

(before L#26 in https://unpkg.com/[email protected]/lib/archive.js)

Is it then crashing after all files have been appended?

Yeah, i'll investigate, where it crashes exactly...

Tried this:

console.log('Appending files...');

files.forEach(file => {

console.log(file);

archive.append(fs.createReadStream(path.join(directory, file)), {

name: file,

date: new Date('1980-01-01T00:00:00.000Z'),

});

});

console.log('Done appending');

console.log('Finalizing...');

archive.finalize();

console.log('Done Finalizing');

// archive has been finalized and the output file descriptor has closed, resolve promise

output.once('close', () => {

console.log('Output closing...');

ok();

});

And i can see Done finalizing, but not Output closing.... Seems like it has nothing to do with the zipping itself

The zipping is async and happens after the sync call to archive.finalize().

Can you console log in the archive.on('warning', ...) and archive.on('error', ...)

Strangely, if i change the ok() to fail(), i can see both the log Output closing... and the error resulting from the failing promise

The zipping is async and happens after the sync call to

archive.finalize().

Oh, right..

Can you console log in the

archive.on('warning', ...)andarchive.on('error', ...)

I did, but none of those two were called.

archive.on('warning', (err) => {

console.log('WARNING');

console.log(err);

fail(err);

});

archive.on('error', (err) => {

console.log('ERROR');

console.log(err);

fail(err);

});

Do you see this debug message?

https://github.com/awslabs/aws-cdk/blob/aa28db7179f367558cfe82658c25d1d3a916bea2/packages/aws-cdk/lib/assets.ts#L86

Do you see this debug message?

No, the process ends before that

Can you add this in zipDirectory?

output.on('error', (err) => console.log(err));

output.on('warning', (err) => console.log(err));

Can you add this in

zipDirectory?output.on('error', (err) => console.log(err)); output.on('warning', (err) => console.log(err));

Both don't get called

Node version?

v10.16.0

What are the differences with your colleague's environnement/machine?

v10.16.0

I just tried with Node 12, but i get the same error

What are the differences with your colleague's environnement/machine?

He is also running Arch Linux, and i suppose the same node version. I'll ask him for his machine specs tomorrow. It also runs fine on our Gitlab CI server running alpine, as well as on the MacOS machines of two other colleagues.

Seems like it's something personal :smile:

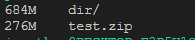

Made some more tests and I was able to successfully zip a directory containing 3 times the mentioned dependencies (98,193 files and 14,952 folders):

(note: this is way beyond the 50MB zip file limit for AWS Lambda...)

I notice mention of the presence of symlinks. A normal node install wouldn't create those, so is it the presence of symlinks that is causing the issue?

I put an intermediate step into our deployment which removes all symlinks prior to zipping the lambda, but that didnt' seem to solve the problem unfortunately.

I just confirmed however, that the zipping is the problem by feeding a path to a previously built zip file into the lambda construct. That went through and the deployment worked fine.

As no one else seems to have this problem, i think i will just zip the lambda myself beforehand.

I got the same issue recently. I tried to debug it and it seems that the issue related to the archiver module used in zipDirectory.

What i found out:

When appending files to the archiver they are added to the async.queue. On queue.drain event the finalization happens. Normally everything works fine, but in my case for one of the asset folders the queue.drain event is not coming. No errors or something present. I will try to investigate more for this issue

Update:

I have no idea why it is happening, just at some point callbacks deep inside ZipArchiveOutputStream are not fired. Maybe some issues with archiver implementation

I wonder if there is an issue where node runs out of memory while trying to drain the queue, and the process dies... Could you try to run again while setting NODE_OPTIONS=--max-old-space-size=8192?

@artyom-melnikov can you provide code to reproduce the issue?

@jogold I can provide you with the content of the folder on which I always can reproduce this issue (this is node_modules for my project, I also verified that issue remains after I unzip content).

https://drive.google.com/open?id=1LPF5cJX9jZAEPscgp4vJlytJTq0dmZVo

As for the code - I used this one: (based on aws-cdk/lib/archive.js:36)

zipDirectory('/path/to/layer', '/tmp/1.zip')

.then(() => console.log('Done'));

The 'Done' is never printed for that folder, works fine with other

Some more observations:

- I tried to remove some folder and it seems that when there are about 7200 files the process works fine (originally there are 10500 files)

- I'm using

Ubuntu 18.04.1 LTS, I have a feeling that it can be a platform-related issue

@artyom-melnikov cannot reproduce with your zip on WSL using Ubuntu...

@jogold seems like a platform-related issue then... I can try to debug it on my machine if you want, but for that, I need some pointers, I'm not a nodejs expert

@artyom-melnikov can you try playing with nodir and follow here?

https://github.com/aws/aws-cdk/blob/cd0e9bd53e37f4b5824d64a0234e28c3a86b1ed9/packages/aws-cdk/lib/archive.ts#L12-L17

@jogold I tried to change those but it didn't result in anything good. I also tried to pin archiver to the versions used to work for me and it doesn't work either, so the problem with the new code in the zipDirectory function.

I have a bold guess:

files.forEach(file => { // Append files serially to ensure file order

archive.append(fs.createReadStream(path.join(directory, file)), {

name: file,

date: new Date('1980-01-01T00:00:00.000Z'), // reset dates to get the same hash for the same content

});

});

Shouldn't such code result in all files being open simultaneously? I feel like it can be related to the EMFILE, too many open files and the OS file limit. Will try to check this version

@jogold I created a very crude solution that sends files in batch as soon as the previous batch is processed

function zipDirectory(directory, outputFile) {

return new Promise((ok, fail) => {

// The below options are needed to support following symlinks when building zip files:

// - nodir: This will prevent symlinks themselves from being copied into the zip.

// - follow: This will follow symlinks and copy the files within.

const globOptions = {

dot: true,

nodir: true,

follow: true,

cwd: directory,

};

const files = glob.sync('**', globOptions); // The output here is already sorted

const output = fs.createWriteStream(outputFile);

const archive = archiver('zip');

archive.on('warning', fail);

archive.on('error', fail);

let i = 0;

archive.on('progress', (progress) => {

if (progress.entries.total === progress.entries.processed) {

// Previous batch has been processed, add next batch

if (i < files.length) {

let j = i;

for (j; j < Math.min(files.length, i + 1000); j++) {

appendFile(archive, directory, files[j]);

}

i = j;

} else {

// Everything done, finalize

archive.finalize();

}

}

});

archive.pipe(output);

if (files.length > 0) {

// Send first file to start processing

appendFile(archive, directory, files[i++]);

} else {

archive.finalize();

}

output.once('close', () => ok());

});

}

function appendFile(archive, directory, file) {

archive.append(fs.createReadStream(path.join(directory, file)), {

name: file,

date: new Date('1980-01-01T00:00:00.000Z')

});

}

This solution works for me, processing everything and returning proper callback. Please consider using similar approach

Can you try with:

files.forEach(file => { // Append files serially to ensure file order

archive.file(path.join(directory, file), {

name: file,

date: new Date('1980-01-01T00:00:00.000Z'), // reset dates to get the same hash for the same content

});

});

archive.file uses lazystream to solve this issue (EMFILE), but then I'm not sure that file order is preserved across zips...

@jogold yes, this solution also works

@aereal @artyom-melnikov can you try this https://github.com/jogold/aws-cdk/commit/d0464b2869cf572c3057e532a15781ce57eb0a84

@jogold that one didn't work for me

@jogold that one didn't work for me

The last one? https://github.com/aws/aws-cdk/compare/master...jogold:fix-zip

@jogold https://github.com/aws/aws-cdk/compare/master...jogold:fix-zip works for me!

@PygmalionPolymorph can you give it a try?

@artyom-melnikov and this one https://github.com/aws/aws-cdk/compare/master...jogold:fix-zip-stream?

@jogold no, https://github.com/aws/aws-cdk/compare/master...jogold:fix-zip-stream is not working

@artyom-melnikov the one from this commit https://github.com/jogold/aws-cdk/commit/151303919036a7d8c913e008cc94328d32f9946d?

@jogold yes, the one which use graceful-fs

@artyom-melnikov ok reverting to something much simpler, can you try this last one https://github.com/jogold/aws-cdk/commit/d34e6060302dc1ee29cca3011ae716b834a20a31? Will then open a PR

@jogold the last solution https://github.com/jogold/aws-cdk/commit/d34e6060302dc1ee29cca3011ae716b834a20a31 works for me!