Aspnetcore: "The client reset the stream" stress errors?

ASP.NET Core 3.0.0-preview7.19365.7

I:

- downloaded the latest SDK from https://dotnetcli.blob.core.windows.net/dotnet/Sdk/master/dotnet-sdk-latest-win-x64.exe

- sync'd corefx to master

- cd d:\repos\corefx\src\System.Net.Http\tests\StressTests\HttpStress

- dotnet run -c Release

That runs HttpClient with HTTP/2 against Kestrel, using whatever SDK is installed.

When I do that, I get frequent failures like:

Error from iteration 1839 (GET Parameters) in task 5 with 19797 successes / 11 fails:

System.Net.Http.HttpRequestException: An error occurred while sending the request.

---> System.IO.IOException: The request was aborted.

---> System.Net.Http.Http2StreamException: The HTTP/2 server reset the stream. HTTP/2 error code 'PROTOCOL_ERROR' (0x1).

--- End of inner exception stack trace ---

at System.Net.Http.Http2Connection.Http2Stream.CheckResponseBodyState() in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\SocketsHttpHandler\Http2Stream.cs:line 627

at System.Net.Http.Http2Connection.Http2Stream.TryEnsureHeaders() in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\SocketsHttpHandler\Http2Stream.cs:line 655

at System.Net.Http.Http2Connection.Http2Stream.ReadResponseHeadersAsync(CancellationToken cancellationToken) in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\SocketsHttpHandler\Http2Stream.cs:line 700

at System.Net.Http.Http2Connection.SendAsync(HttpRequestMessage request, CancellationToken cancellationToken) in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\SocketsHttpHandler\Http2Connection.cs:line 1698

--- End of inner exception stack trace ---

at System.Net.Http.Http2Connection.SendAsync(HttpRequestMessage request, CancellationToken cancellationToken) in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\SocketsHttpHandler\Http2Connection.cs:line 1698

at System.Net.Http.HttpConnectionPool.SendWithRetryAsync(HttpRequestMessage request, Boolean doRequestAuth, CancellationToken cancellationToken) in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\SocketsHttpHandler\HttpConnectionPool.cs:line 541

at System.Net.Http.RedirectHandler.SendAsync(HttpRequestMessage request, CancellationToken cancellationToken) in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\SocketsHttpHandler\RedirectHandler.cs:line 33

at System.Net.Http.HttpClient.FinishSendAsyncBuffered(Task`1 sendTask, HttpRequestMessage request, CancellationTokenSource cts, Boolean disposeCts) in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\HttpClient.cs:line 546

at HttpStress.RequestContext.SendAsync(HttpRequestMessage request, HttpCompletionOption httpCompletion, Nullable`1 token) in d:\repos\corefx\src\System.Net.Http\tests\StressTests\HttpStress\ClientOperations.cs:line 76

at HttpStress.ClientOperations.<>c.<<get_Operations>b__1_3>d.MoveNext() in d:\repos\corefx\src\System.Net.Http\tests\StressTests\HttpStress\ClientOperations.cs:line 164

--- End of stack trace from previous location where exception was thrown ---

at HttpStress.StressClient.<>c__DisplayClass2_2.<<-ctor>g__RunWorker|4>d.MoveNext() in d:\repos\corefx\src\System.Net.Http\tests\StressTests\HttpStress\StressClient.cs:line 147

and

Error from iteration 896 (POST Duplex Slow) in task 4 with 9115 successes / 10 fails:

System.Net.Http.HttpRequestException: Error while copying content to a stream.

---> System.IO.IOException: The request was aborted.

---> System.IO.IOException: Unable to read data from the transport connection: An existing connection was forcibly closed by the remote host..

---> System.Net.Sockets.SocketException (10054): An existing connection was forcibly closed by the remote host.

--- End of inner exception stack trace ---

at System.Net.Security.SslStream.<WriteSingleChunk>g__CompleteAsync|210_1[TWriteAdapter](ValueTask writeTask, Byte[] bufferToReturn)

at System.Net.Security.SslStream.WriteAsyncInternal[TWriteAdapter](TWriteAdapter writeAdapter, ReadOnlyMemory`1 buffer)

at System.Net.Http.Http2Connection.FlushOutgoingBytesAsync() in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\SocketsHttpHandler\Http2Connection.cs:line 186

--- End of inner exception stack trace ---

at System.Net.Http.Http2Connection.Http2Stream.CheckResponseBodyState() in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\SocketsHttpHandler\Http2Stream.cs:line 627

at System.Net.Http.Http2Connection.Http2Stream.TryReadFromBuffer(Span`1 buffer) in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\SocketsHttpHandler\Http2Stream.cs:line 754

at System.Net.Http.Http2Connection.Http2Stream.ReadDataAsync(Memory`1 buffer, HttpResponseMessage responseMessage, CancellationToken cancellationToken) in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\SocketsHttpHandler\Http2Stream.cs:line 813

at System.IO.Stream.CopyToAsyncInternal(Stream destination, Int32 bufferSize, CancellationToken cancellationToken)

at System.Net.Http.HttpConnectionResponseContent.SerializeToStreamAsync(Stream stream, TransportContext context, CancellationToken cancellationToken) in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\SocketsHttpHandler\HttpConnectionResponseContent.cs:line 47

at System.Net.Http.HttpContent.LoadIntoBufferAsyncCore(Task serializeToStreamTask, MemoryStream tempBuffer) in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\HttpContent.cs:line 436

--- End of inner exception stack trace ---

at System.Net.Http.HttpContent.LoadIntoBufferAsyncCore(Task serializeToStreamTask, MemoryStream tempBuffer) in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\HttpContent.cs:line 443

at System.Net.Http.HttpContent.WaitAndReturnAsync[TState,TResult](Task waitTask, TState state, Func`2 returnFunc) in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\HttpContent.cs:line 716

at HttpStress.ClientOperations.<>c.<<get_Operations>b__1_8>d.MoveNext() in d:\repos\corefx\src\System.Net.Http\tests\StressTests\HttpStress\ClientOperations.cs:line 268

--- End of stack trace from previous location where exception was thrown ---

at HttpStress.StressClient.<>c__DisplayClass2_2.<<-ctor>g__RunWorker|4>d.MoveNext() in d:\repos\corefx\src\System.Net.Http\tests\StressTests\HttpStress\StressClient.cs:line 147

and if I instead run with dotnet run -c Release -aspnetlog in order to see server failures, I see errors like:

fail: Microsoft.AspNetCore.Server.Kestrel[13]

Connection id "0HLOHSA333TBP", Request id "0HLOHSA333TBP:000045E3": An unhandled exception was thrown by the application.

System.IO.IOException: The request stream was aborted.

---> Microsoft.AspNetCore.Connections.ConnectionAbortedException: The HTTP/2 connection faulted.

---> System.IO.IOException: The read operation failed, see inner exception.

---> System.InvalidOperationException: Reading is already in progress.

at System.IO.Pipelines.ThrowHelper.ThrowInvalidOperationException_AlreadyReading()

at System.IO.Pipelines.Pipe.GetReadResult(ReadResult& result)

at System.IO.Pipelines.Pipe.ReadAsync(CancellationToken token)

at System.IO.Pipelines.Pipe.DefaultPipeReader.ReadAsync(CancellationToken cancellationToken)

at Microsoft.AspNetCore.Server.Kestrel.Core.Internal.DuplexPipeStream.ReadAsyncInternal(Memory`1 destination, CancellationToken cancellationToken)

at System.Net.Security.SslStream.<FillBufferAsync>g__InternalFillBufferAsync|215_0[TReadAdapter](TReadAdapter adap, ValueTask`1 task, Int32 min, Int32 initial)

at System.Net.Security.SslStream.ReadAsyncInternal[TReadAdapter](TReadAdapter adapter, Memory`1 buffer)

--- End of inner exception stack trace ---

at System.Net.Security.SslStream.ReadAsyncInternal[TReadAdapter](TReadAdapter adapter, Memory`1 buffer)

at System.IO.Pipelines.StreamPipeReader.ReadAsync(CancellationToken cancellationToken)

at Microsoft.AspNetCore.Server.Kestrel.Core.Internal.Http2.Http2Connection.ProcessRequestsAsync[TContext](IHttpApplication`1 application)

--- End of inner exception stack trace ---

--- End of inner exception stack trace ---

at System.IO.Pipelines.PipeCompletion.ThrowLatchedException()

at System.IO.Pipelines.Pipe.GetReadResult(ReadResult& result)

at System.IO.Pipelines.Pipe.GetReadAsyncResult()

at Microsoft.AspNetCore.Server.Kestrel.Core.Internal.Http2.Http2MessageBody.ReadAsync(CancellationToken cancellationToken)

at Microsoft.AspNetCore.Server.Kestrel.Core.Internal.Http.HttpRequestStream.CopyToAsyncInternal(Stream destination, CancellationToken cancellationToken)

at HttpStress.StressServer.<>c.<<MapRoutes>b__10_5>d.MoveNext() in d:\repos\corefx\src\System.Net.Http\tests\StressTests\HttpStress\StressServer.cs:line 172

--- End of stack trace from previous location where exception was thrown ---

at Microsoft.AspNetCore.Routing.EndpointMiddleware.<Invoke>g__AwaitRequestTask|6_0(Endpoint endpoint, Task requestTask, ILogger logger)

at Microsoft.AspNetCore.Server.Kestrel.Core.Internal.Http.HttpProtocol.ProcessRequests[TContext](IHttpApplication`1 application)

in addition to ones (presumably expected due to cancellation) about the client resetting the stream.

If I instead run with dotnet run -c Release -httpSys, which uses UseHttpSys instead of UseKestrel, the stress app runs happily with no client errors until I eventually terminate it.

cc: @davidfowl, @halter73, @geoffkizer, @eiriktsarpalis

All 28 comments

Turns out we didn't fix it @jkotalik

Just noticed this is running preview7, its fixed in preview8

Thanks, @davidfowl. I thought that was strange, but figured it was just one of our many versioning oddities. Turns out even though I uninstalled all previous .NET Core versions, I still had a stale preview7 folder sitting in C:\Program Files\dotnet\shared\Microsoft.AspNetCore.App, and apparently dotnet was preferring that over the brand new preview 8 folder.

Deleted the old folder, and now the "in progress" reading errors went away, or at least I haven't seen any yet.

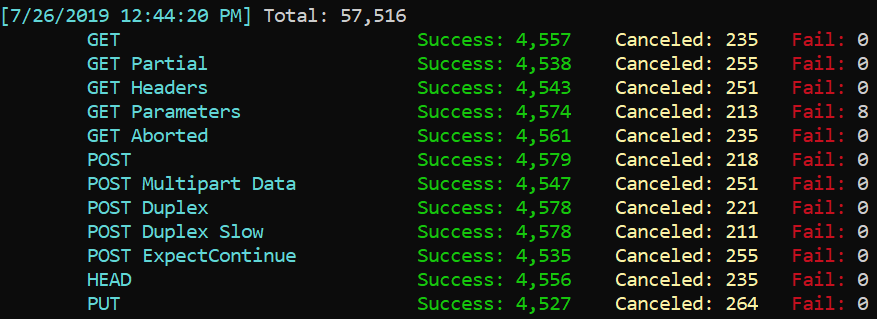

I do still however see sporadic failures, e.g. I just spent 20s or so running:

d:\repos\corefx\src\System.Net.Http\tests\StressTests\HttpStress>dotnet run -c Release -aspnetlog

and out of the 50K successes, I saw 8 sporadic failures:

On the client I see these failures as:

Error from iteration 4887 (GET Parameters) in task 10 with 51449 successes / 8 fails:

System.Net.Http.HttpRequestException: An error occurred while sending the request.

---> System.IO.IOException: The request was aborted.

---> System.Net.Http.Http2StreamException: The HTTP/2 server reset the stream. HTTP/2 error code 'PROTOCOL_ERROR' (0x1).

--- End of inner exception stack trace ---

at System.Net.Http.Http2Connection.Http2Stream.CheckResponseBodyState() in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\SocketsHttpHandler\Http2Stream.cs:line 627

at System.Net.Http.Http2Connection.Http2Stream.TryEnsureHeaders() in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\SocketsHttpHandler\Http2Stream.cs:line 655

at System.Net.Http.Http2Connection.Http2Stream.ReadResponseHeadersAsync(CancellationToken cancellationToken) in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\SocketsHttpHandler\Http2Stream.cs:line 700

at System.Net.Http.Http2Connection.SendAsync(HttpRequestMessage request, CancellationToken cancellationToken) in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\SocketsHttpHandler\Http2Connection.cs:line 1698

--- End of inner exception stack trace ---

at System.Net.Http.Http2Connection.SendAsync(HttpRequestMessage request, CancellationToken cancellationToken) in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\SocketsHttpHandler\Http2Connection.cs:line 1698

at System.Net.Http.HttpConnectionPool.SendWithRetryAsync(HttpRequestMessage request, Boolean doRequestAuth, CancellationToken cancellationToken) in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\SocketsHttpHandler\HttpConnectionPool.cs:line 541

at System.Net.Http.RedirectHandler.SendAsync(HttpRequestMessage request, CancellationToken cancellationToken) in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\SocketsHttpHandler\RedirectHandler.cs:line 33

at System.Net.Http.HttpClient.FinishSendAsyncBuffered(Task`1 sendTask, HttpRequestMessage request, CancellationTokenSource cts, Boolean disposeCts) in D:\repos\corefx\src\System.Net.Http\src\System\Net\Http\HttpClient.cs:line 546

at HttpStress.RequestContext.SendAsync(HttpRequestMessage request, HttpCompletionOption httpCompletion, Nullable`1 token) in d:\repos\corefx\src\System.Net.Http\tests\StressTests\HttpStress\ClientOperations.cs:line 76

at HttpStress.ClientOperations.<>c.<<get_Operations>b__1_3>d.MoveNext() in d:\repos\corefx\src\System.Net.Http\tests\StressTests\HttpStress\ClientOperations.cs:line 169

--- End of stack trace from previous location where exception was thrown ---

at HttpStress.StressClient.<>c__DisplayClass2_2.<<-ctor>g__RunWorker|4>d.MoveNext() in d:\repos\corefx\src\System.Net.Http\tests\StressTests\HttpStress\StressClient.cs:line 147

and on the server log I see an appx equal number of:

fail: Microsoft.AspNetCore.Server.Kestrel[13]

Connection id "0HLOHT1QIJDED", Request id "0HLOHT1QIJDED:000143A9": An unhandled exception was thrown by the application.

System.IO.IOException: The client reset the request stream.

at System.IO.Pipelines.PipeCompletion.ThrowLatchedException()

at System.IO.Pipelines.Pipe.GetReadResult(ReadResult& result)

at System.IO.Pipelines.Pipe.GetReadAsyncResult()

at Microsoft.AspNetCore.Server.Kestrel.Core.Internal.Http2.Http2MessageBody.ReadAsync(CancellationToken cancellationToken)

at Microsoft.AspNetCore.Server.Kestrel.Core.Internal.Http.HttpRequestStream.ReadAsyncInternal(Memory`1 buffer, CancellationToken cancellationToken)

at HttpStress.StressServer.<>c.<<MapRoutes>b__10_7>d.MoveNext() in d:\repos\corefx\src\System.Net.Http\tests\StressTests\HttpStress\StressServer.cs:line 184

--- End of stack trace from previous location where exception was thrown ---

at Microsoft.AspNetCore.Routing.EndpointMiddleware.<Invoke>g__AwaitRequestTask|6_0(Endpoint endpoint, Task requestTask, ILogger logger)

at Microsoft.AspNetCore.Routing.EndpointRoutingMiddleware.SetRoutingAndContinue(HttpContext httpContext)

at Microsoft.AspNetCore.Server.Kestrel.Core.Internal.Http.HttpProtocol.ProcessRequests[TContext](IHttpApplication`1 application)

Seems like no one wants to take the blame ;-) "You canceled me. No, you canceled me. No, you canceled me."

I assume those failures occur when GET Parameters is canceled, correct? And the cancellation is done on the client-side?

I think what Kestrel is reporting is correct; initially seems like a client issue to me.

@jkotalik, that's certainly possible, but I don't see any client failures when running with UseHttpSys. What would explain the difference?

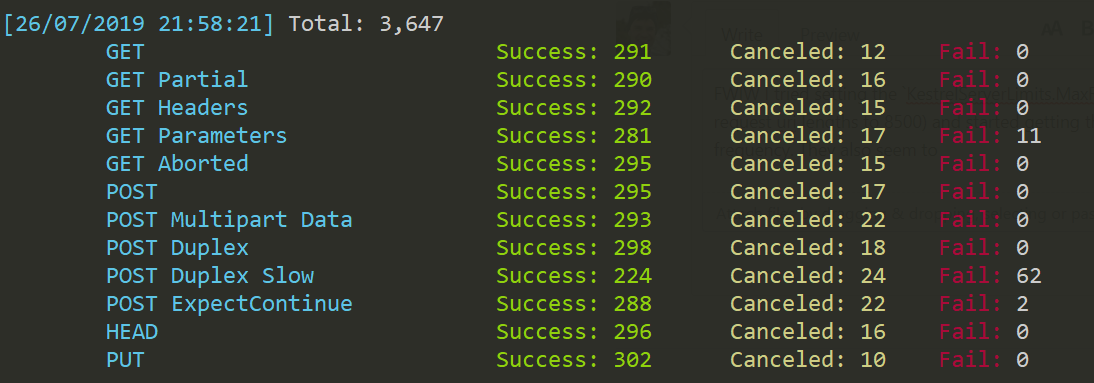

Also, I still see such issues even when the client doesn't issue cancellation at all, e.g.

dotnet run -c Release -cancelRate 0 -ops 0 2 3 5 6 7 8 9 10 11

Interesting, let me check the scenario and try to repro.

It is interesting though that the failures are always in the "GET Parameters" operation:

[7/26/2019 1:01:29 PM] Total: 320,330

GET Success: 32,034 Canceled: 0 Fail: 0

GET Headers Success: 32,035 Canceled: 0 Fail: 0

GET Parameters Success: 32,004 Canceled: 0 Fail: 32

POST Success: 32,037 Canceled: 0 Fail: 0

POST Multipart Data Success: 32,038 Canceled: 0 Fail: 0

POST Duplex Success: 32,039 Canceled: 0 Fail: 0

POST Duplex Slow Success: 32,029 Canceled: 0 Fail: 0

POST ExpectContinue Success: 32,032 Canceled: 0 Fail: 0

HEAD Success: 32,033 Canceled: 0 Fail: 0

PUT Success: 32,034 Canceled: 0 Fail: 0

Maybe we're hitting up against the kestrel line length limits again, and Http.Sys uses different limits that we're not hitting, or something like that.

I think Kestrel would throw a different exception if it did hit one of those limits though.

It could be that these logs aren't directly correlated, e.g. maybe we made a request exceeding a limit, kestrel balked and aborted the request, causing the client to abort some other request using the same connection (I'm waving my hands a bit), and then kestrel said "the client canceled me".

Trying to narrow it down now...

Definitely line length. If we send a URL that's 8170 characters long, everything runs smoothly. If we send a URL that's 8171 characters long, we get back "The HTTP/2 server reset the stream. HTTP/2 error code 'PROTOCOL_ERROR' (0x1).".

I believe it's the same issue as https://github.com/aspnet/aspnetcore/issues/12213.

@jkotalik, how should I think about the 8192 line limit length? i.e. how long should a URL should I expect to be able to GET before I fail because of that limit?

I'm still trying to rationalize it to myself. So the MaxRequestHeaderFieldSize applies only to Http2, but there is also the MaxRequestLineSize which is a general kestrel limit that defaults to 8KB as well. My guess is that even if we increase the MaxRequestHeaderFieldSize to 32 Kb like the issue said, we would hit the MaxRequestLineSize limit in Kestrel. However, it would return a different status code to the client rather than just saying there was a protocol error when we were doing HPack decoding.

I'll chat will @halter73 and @Tratcher to figure out how to make these limits work well. The main issue I see is if when the client receives the settings frame from the server, it has no knowledge of the MaxRequestHeaderFieldSize. I also think the MaxRequestHeaderFieldSize should at least be the size of the max window.

I believe the issue is with the stress suite which treats the max request line size as max request uri size. Adding this hack made all errors go away.

which treats the max request line size as max request uri size

Yup. That's why I was asking about how I should think about the max line length in regards to what URIs can be sent.

I think it's here

Hmm. The entirely of the URL I was using was 8171 characters, from the beginning of the scheme to the end of the query variables. If that's the only equation, that means _methodText for a GET was 21 characters?

Yeah that's interesting. I throw a breakpoint there and see why it's adding to over 8192.

So an exception I hit had the total requestLineLength as 8202.

The method text was GET (3 characters), scheme was 5 characters (https), host was 14 (localhost:5001), and the path was 8180 characters.

@jkotalik, yes, the current HttpStress app will create urls up to 8192, which is obviously wrong by this limit. But what I was saying is I changed the app to explicitly control the length of the urls, and it was failing with a url of length 8171, which is what's confusing me.

Are you sure the URL length is 8171 rather than the path? It seems like if the path is 8171 characters long, with the extra 22 characters it would exceed the limit.

And @Tratcher and I discussed fixing this error on the server to respond to the client with an error that wasn't a protocol violation. It may be too late/too risky to land in 3.0.

@stephentoub I presume the url length is increased when HttpClient.BaseAddress is taken into account?

I presume the url length is increased when HttpClient.BaseAddress is taken into account?

Oh! You changed to use BaseAddress? That would explain it :(

Closing this as the issue is understood and the server issue around limits is tracked elsewhere. Thanks.

FWIW I tried setting the KestrelServerLimits.MaxRequestLineSize property to 9216 (constraining request uri lengths to 8500) and started getting the same errors again, albeit at a much larger frequency. The errors also seem to leak to other operations this time:

The errors go away when I constraint the uris to 8200 characters.

That makes sense. It's hitting that limit as you randomly create a request with different path lengths.

Most helpful comment

Oh! You changed to use BaseAddress? That would explain it :(