Aspnetcore: [Blazor] Breaking big batches into smaller ones

We should consider breaking big batches to reduce payload size and improve UI update smoothness.

Imagine I have a component where I render A TON of elements. That is going to imply that we send a HUGE payload down through Signalr that can hit size limits and that its going to take time to get computed.

The idea here is that while we are processing a batch, instead of producing a single huge batch, we can produce many smaller batches that end up becoming UI updates.

For example if we want to keep a framerate of 30 frames per second, we can start processing renders from the renderqueue and after 33ms we can stop, produce a batch and send it back to the client.

This might require us to change the way we process the queue and instead of processing elements one by one in the order they arrive, we should process them in subtrees to guarantee a meaningful/correct render, but it will smooth updates when rendering large amounts of elements

All 9 comments

@anurse @BrennanConroy @halter73 - is the size of message sent from SERVER to CLIENT a practical concern? Is this something we should investigate or is this a red herring?

_There is no limit on the message size when sending from SERVER to CLIENT_.

We have a maximum for CLIENT to SERVER messages. When sending from the server, using WebSockets, we will automatically chunk messages when they are larger than a single buffer in the Pipelines memory pool (4KB). Using Long Polling we load the whole shebang into a single ping response but we do so in a way that forces the server to use chunked encoding and send it in fragments (no more than 4KB each).

This is all to say I don't think there's a transport reason why the size of the message should be a concern. Sending 10 x 100MB vs sending 1GB should be about the same when it comes to getting bits on to the wire.

The JavaScript client is probably where I would have the biggest concern. If a Very Large Message:tm: comes in, the browser is forced to allocate a single buffer to hold it because ArrayBuffer guarantees contiguous memory. You'd have to profile the JavaScript code to know more about the effect that has.

If it's possible for you to create _logical_ chunks, where each chunk can actually be processed and disposed of separately, then I think that makes sense because it keeps the memory burden on the browser lower. If you are just taking a big message, splitting it at a byte boundary and stitching it back together on the client before processing then I don't think you'll see a benefit.

You can break big render batches into smaller ones, by processing a smaller a maximum amount of renders at a time. Its the equivalent to your graphic card not being able to handle the update rates and the screen only partially updating (but in the component cases is done logically).

This has an effect on the server side too, as it prevents having to allocate very large buffers, and in normal circumstances the effect on the client should be negligible. It's only when the server sends big payloads to the client that this has an stabilizing effect, by making sure the server doesn't spend a lot of time to allocate and fill a lets say 1GB array with all the pending renders and instead it generates multiple smaller batches that can be delivered sooner and don't require a lot of memory to be computed.

So it sounds like you are able to chunk "logically", which means it would provide some benefit. The server-side memory allocation benefit is there, but less of a concern to me since we already use chains of pooled 4KB buffers there (though you may still have to allocate large buffers yourself to manage the data). You'd likely see an improvement in client-side allocations by doing this.

@anurse That's the point. It doesn't matter if you efficiently send 1GB data in chunks if on the server the app still needs to allocate 1GB buffer. By breaking batches into a given size, we can better leverage Array

pooling and reduce memory usage.

Does the chunking work automatically in server-side Blazor or do we have to configure something here?

@anurse

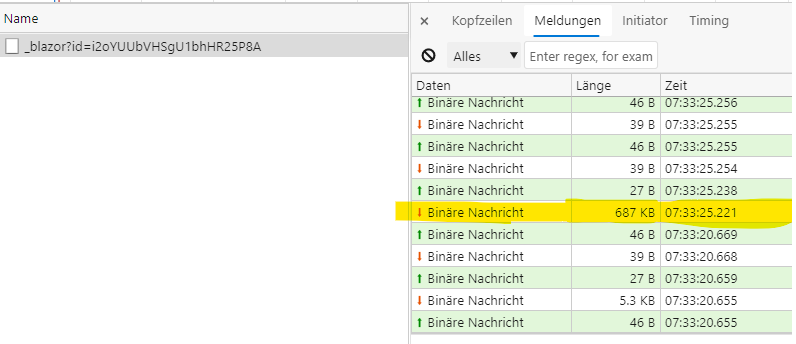

You've said "we will automatically chunk messages" . However, we currently see a huge message that is transferred from server to client and causes a high latency in the app:

So that seems to me there is no splitting of larger messages.

What can we do here?

I'm actually no longer on the ASP.NET team, @BrennanConroy or @halter73 could probably answer this question more accurately. So this is mostly the speculation of someone who used to be clued in to what was going on 😝.

I believe the data you're seeing in the browser dev tools isn't completely representative of what goes on the wire. WebSocket messages can be split into chunks, but each chunk is marked as a "continuation" message, so that the original message can be reassembled on the client. SignalR's JavaScript client depends on this behavior. So if you send a 16KB message in 4KB chunks, you get these WebSocket messages:

#1 [FIN: false] [Size: 4KB]

#2 [FIN: false] [Size: 4KB]

#3 [FIN: false] [Size: 4KB]

#4 [FIN: true ] [Size: 4KB]

(Where FIN is the FIN flag defined in the WebSocket header that indicates the full message is complete).

So along the wire it is being sent in chunks, and I believe if you used a network tracing tool like Wireshark, you'd see that.

However, going back to something I said above about the JS client:

The JavaScript client is probably where I would have the biggest concern. If a Very Large Message™️ comes in, the browser is forced to allocate a single buffer to hold it because

ArrayBufferguarantees contiguous memory.

The browser-provided APIs for WebSockets don't expose partial messages. The browser waits for all the chunks to arrive and then allocates a single ArrayBuffer for the whole message. The browser dev tools are also showing complete messages and not the intermediate chunks that they make up.

I'm also not sure that network chunking is really going to help with latency. Chunking helps with latency if the client can do something with the chunks as they arrive. However, in this case the message being sent is a single Blazor rendering batch that can't really be "partially processed". The Blazor code on the client must, by definition, wait for the entire batch to arrive before it can process it. Unless chunking occurs at the Blazor level (i.e. breaking a large batch down into smaller complete messages where possible) I don't think any SignalR-implemented chunking would actually help latency. This is also generally true for any protocol running over SignalR. If we do chunking, we're splitting messages at completely arbitrary boundaries. Only when the message is reconstructed could the application actually process it. If the application (Blazor in this case) makes it's messages smaller, it can process them as they arrive.

This issue is about us potentially breaking SSB render batches into smaller chunks to provide more "continuous", "faster", render cycles, but we haven't heard feedback about this being necessary, so it's very unlikely we do anything here.

@anurse

Thanks for clarification. Now it's clear.

However, I've analysed our issues and it's not a problem with Blazor.

Most helpful comment

I'm actually no longer on the ASP.NET team, @BrennanConroy or @halter73 could probably answer this question more accurately. So this is mostly the speculation of someone who used to be clued in to what was going on 😝.

I believe the data you're seeing in the browser dev tools isn't completely representative of what goes on the wire. WebSocket messages can be split into chunks, but each chunk is marked as a "continuation" message, so that the original message can be reassembled on the client. SignalR's JavaScript client depends on this behavior. So if you send a 16KB message in 4KB chunks, you get these WebSocket messages:

(Where

FINis theFINflag defined in the WebSocket header that indicates the full message is complete).So along the wire it is being sent in chunks, and I believe if you used a network tracing tool like Wireshark, you'd see that.

However, going back to something I said above about the JS client:

The browser-provided APIs for WebSockets don't expose partial messages. The browser waits for all the chunks to arrive and then allocates a single

ArrayBufferfor the whole message. The browser dev tools are also showing complete messages and not the intermediate chunks that they make up.I'm also not sure that network chunking is really going to help with latency. Chunking helps with latency if the client can do something with the chunks as they arrive. However, in this case the message being sent is a single Blazor rendering batch that can't really be "partially processed". The Blazor code on the client must, by definition, wait for the entire batch to arrive before it can process it. Unless chunking occurs at the Blazor level (i.e. breaking a large batch down into smaller complete messages where possible) I don't think any SignalR-implemented chunking would actually help latency. This is also generally true for any protocol running over SignalR. If we do chunking, we're splitting messages at completely arbitrary boundaries. Only when the message is reconstructed could the application actually process it. If the application (Blazor in this case) makes it's messages smaller, it can process them as they arrive.