Argo-cd: Prometheus-operator CRDs out-of-sync due to `helm.sh/hook: crd-install` annotation missing

Describe the bug

I'm currently using the latest available image in a test cluster:

Image: argoproj/argocd:latest

Image ID: docker-pullable://argoproj/argocd@sha256:f3fcd9a9866d541e1bbdfc20749b57d37eceacc857572c1e59470e289220a1ff

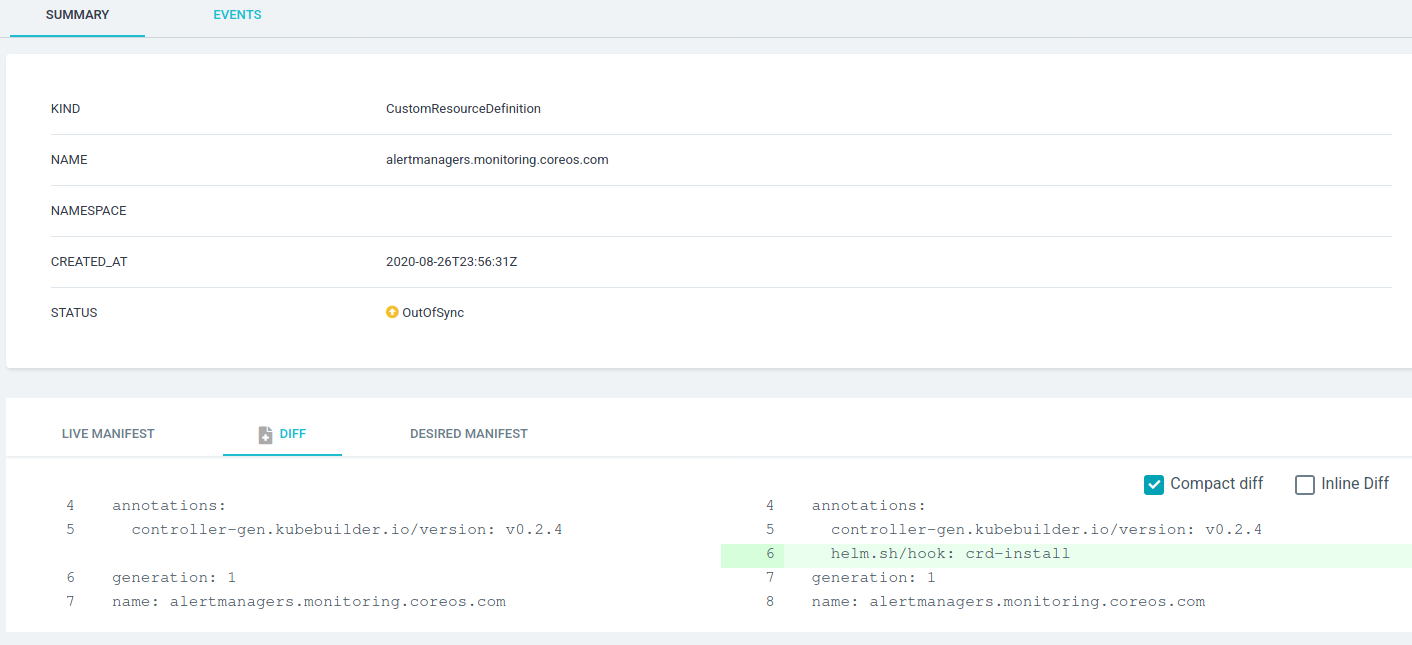

The prometheus-operator CRDs stay out-of-sync due to missing helm.sh/hook: crd-install annotation.

I did some digging and found this commit https://github.com/argoproj/argo-cd/commit/275b9e194d3f091f1400f509c0786f8b443f58e4

So it seems like this was a fixed at some point but I'm not sure which part of the code handles this now.

To Reproduce

argocd helm chart (2.6.0) with image override:

global:

image:

tag: latest

imagePullPolicy: Always

prometheus-operator helm chart (9.3.1): https://github.com/helm/charts/tree/master/stable/prometheus-operator

Expected behavior

CRDs are in sync.

All 14 comments

I dont think we unset/remove that annotation. Are you saying that even after the sync, the annotation is still missing?

Yes, exactly. I checked the object in Kubernetes and it's missing there as well so it's not just a display bug.

% kubectl get crd alertmanagers.monitoring.coreos.com -o yaml

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

annotations:

controller-gen.kubebuilder.io/version: v0.2.4

creationTimestamp: "2020-08-26T23:56:31Z"

generation: 1

name: alertmanagers.monitoring.coreos.com

resourceVersion: "12158"

selfLink: /apis/apiextensions.k8s.io/v1beta1/customresourcedefinitions/alertmanagers.monitoring.coreos.com

uid: aed2d09a-37d2-4d38-9046-4f1a9dc1803b

spec:

...

I forgot to open firewall port 8443 for GKE as described here which might have caused the above issues.

After a complete reinstall it seems to sync correctly now.

I'll close this for now but I'll try to reproduce sometime this weekend with a new cluster to verify.

I get the same issue with CRD's from Istio Operator.

Argo CD version v1.7.3+b4c79cc

Might be too early to close this bug.

I have the same with the Prometheus operator, also with the latest ArgoCD version. After manually syncing it seems good until something changes in the manifest and an autosync happens.

Still seeing the issue with:

- ArgoCD v1.7.7

- kube-prometheus-stack 9.4.5

Webhook disabled with prometheusOperator.admissionWebhooks.enabled=false so 8443 firewall rule most likely not the cause here.

I have been experiencing this issue for some time. I've just upgraded to 1.7.6 and the bug is still present.

A temporary workaround is to sync with "Apply Only" after the first regular sync.

I tried reinstalling Prometheus with ArgoCD (previously installed with Helm) on a non-private GKE cluster and had the same issue happening.

So my assumption about a firewall issue was wrong as others above pointed out.

Hey all, it turns out this is not an Argo CD issue, but rather due to the behaviour of the prometheus-operator itself.

As mentioned, when you look at the difference between what Argo CD expects (desired state), and what it finds (live state), you will see the only difference is this:

annotations:

helm.sh/hook: crd-install # <---- this field is missing from 'annotations' in live state

Argo CD expects to find the above field in annotations, but the CRD itself does not contain it. Why is that?

Well, Argo CD _is_ applying the correct version of the manifest CRD containing this field:

- If you examine the Argo CD logs, you can see that the k8s CRD resource that is being applied to k8s _does_ correctly contain this field

- Likewise, if you setup a breakpoint in Argo CD, you can see that the correct version of the CRD is applied to the cluster (but then overwritten by a subsequent sync operation)

So who is doing the overwriting of the "good" desired version of the CRD, with the "bad" live version of the CRD?

The prometheus-operator deployment itself! Before version v0.39.0 of the prometheus-operator, the operator Helm chart starts the operator with the following parameter: --manage-crds (source).

This parameter, --manage-crds, tells the operator to replace the existing CRDs (the 'good' version) with an operator-managed version (source), which causes the CRD k8s resource to diverge from what Argo CD expects.

To confirm if this is the issue you are seeing, you can kubectl logs deployment/prometheus-kube-promet-operator -n (namespace) kube-prometheus-stack to the operator deployment, and you will see the following in the logs:

level=info ts=2020-10-20T04:41:50.240287866Z caller=operator.go:294 component=thanosoperator msg="connection established" cluster-version=v1.18.6+k3s1

level=info ts=2020-10-20T04:41:50.620988059Z caller=operator.go:701 component=thanosoperator msg="CRD updated" crd=ThanosRuler

level=info ts=2020-10-20T04:41:50.659110663Z caller=operator.go:1918 component=prometheusoperator msg="CRD updated" crd=Prometheus

level=info ts=2020-10-20T04:41:50.682323905Z caller=operator.go:1918 component=prometheusoperator msg="CRD updated" crd=ServiceMonitor

level=info ts=2020-10-20T04:41:50.699229523Z caller=operator.go:1918 component=prometheusoperator msg="CRD updated" crd=PodMonitor

level=info ts=2020-10-20T04:41:50.707645936Z caller=operator.go:1918 component=prometheusoperator msg="CRD updated" crd=PrometheusRule

level=info ts=2020-10-20T04:41:51.031850543Z caller=operator.go:655 component=alertmanageroperator msg="CRD updated" crd=Alertmanager

(notice the 'CRD Updated' message, as it updates the CRDs one-by-one)

Fortunately it appears that this parameter is no longer in use in newer versions of the prometheus operator, so you may get better luck out of those versions.

In any case, this appears not to be an Argo CD issue, and mechanisms exist in Argo CD to ignore differences like this.

(comment from https://github.com/argoproj/argo-cd/issues/3663#issuecomment-712616591 where I investigated this issue)

Thanks @jgwest for your detailled answer !

In any case, this appears not to be an Argo CD issue, and mechanisms exist in Argo CD to ignore differences like this.

I tried to add this, but still have a diff:

ignoreDifferences:

- group: apiextensions.k8s.io

kind: CustomResourceDefinition

jsonPointers:

- /metadata/annotations/

Any idea ?

Hi @nicolas-geniteau, this worked for me:

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: jgw-prometheus

spec:

destination:

namespace: jgw

server: https://kubernetes.default.svc

project: default

source:

path: helm-prometheus-operator

repoURL: https://github.com/jgwest/argocd-example-apps.git

ignoreDifferences:

- group: apiextensions.k8s.io

kind: CustomResourceDefinition

jsonPointers:

- /metadata/annotations

Think the main difference is I used /metadata/annotations rather than /metadata/annotations/

Hi @wrdls, are you happy with the explanation and can close this issue?

@jgwest Sorry for my very late response.

I will try this ! Thanks !

Hi @wrdls, are you happy with the explanation and can close this issue?

Yeah sure. Thanks for investigating.

Most helpful comment

Hey all, it turns out this is not an Argo CD issue, but rather due to the behaviour of the prometheus-operator itself.

As mentioned, when you look at the difference between what Argo CD expects (desired state), and what it finds (live state), you will see the only difference is this:

Argo CD expects to find the above field in annotations, but the CRD itself does not contain it. Why is that?

Well, Argo CD _is_ applying the correct version of the manifest CRD containing this field:

So who is doing the overwriting of the "good" desired version of the CRD, with the "bad" live version of the CRD?

The prometheus-operator deployment itself! Before version v0.39.0 of the prometheus-operator, the operator Helm chart starts the operator with the following parameter:

--manage-crds(source).This parameter,

--manage-crds, tells the operator to replace the existing CRDs (the 'good' version) with an operator-managed version (source), which causes the CRD k8s resource to diverge from what Argo CD expects.To confirm if this is the issue you are seeing, you can

kubectl logs deployment/prometheus-kube-promet-operator -n (namespace) kube-prometheus-stackto the operator deployment, and you will see the following in the logs:(notice the 'CRD Updated' message, as it updates the CRDs one-by-one)

Fortunately it appears that this parameter is no longer in use in newer versions of the prometheus operator, so you may get better luck out of those versions.

In any case, this appears not to be an Argo CD issue, and mechanisms exist in Argo CD to ignore differences like this.

(comment from https://github.com/argoproj/argo-cd/issues/3663#issuecomment-712616591 where I investigated this issue)