Argo-cd: Application stays OutOfSync but k8s objects are created

Checklist:

- [X] I've searched in the docs and FAQ for my answer: http://bit.ly/argocd-faq.

- [] I've included steps to reproduce the bug.

- [X] I've pasted the output of

argocd version.

Describe the bug

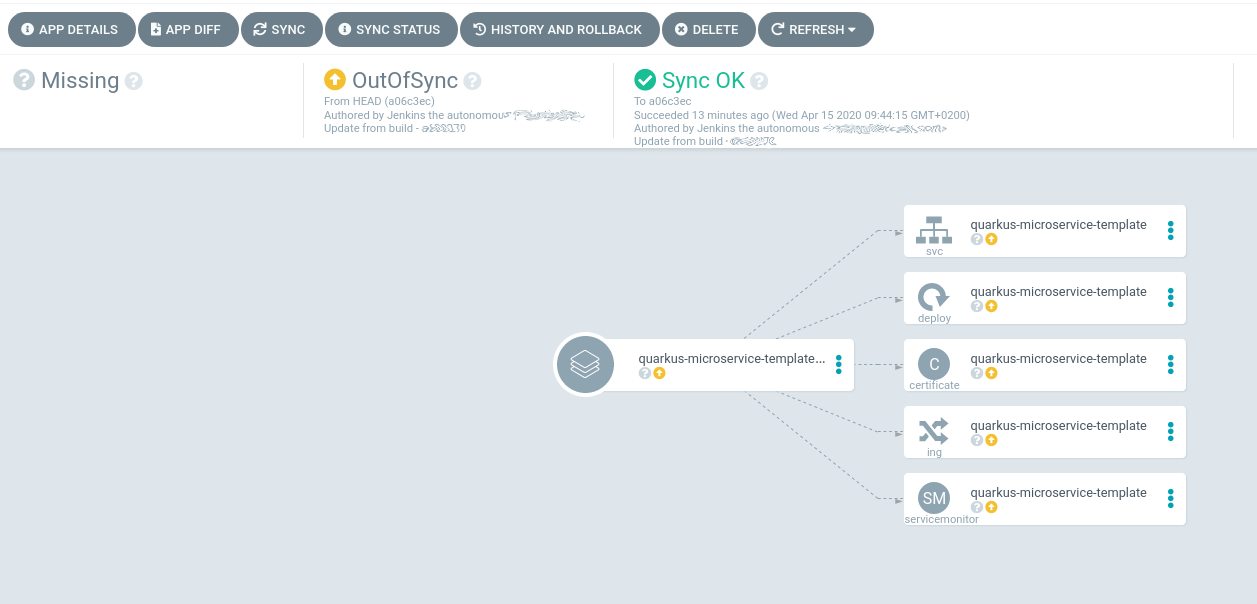

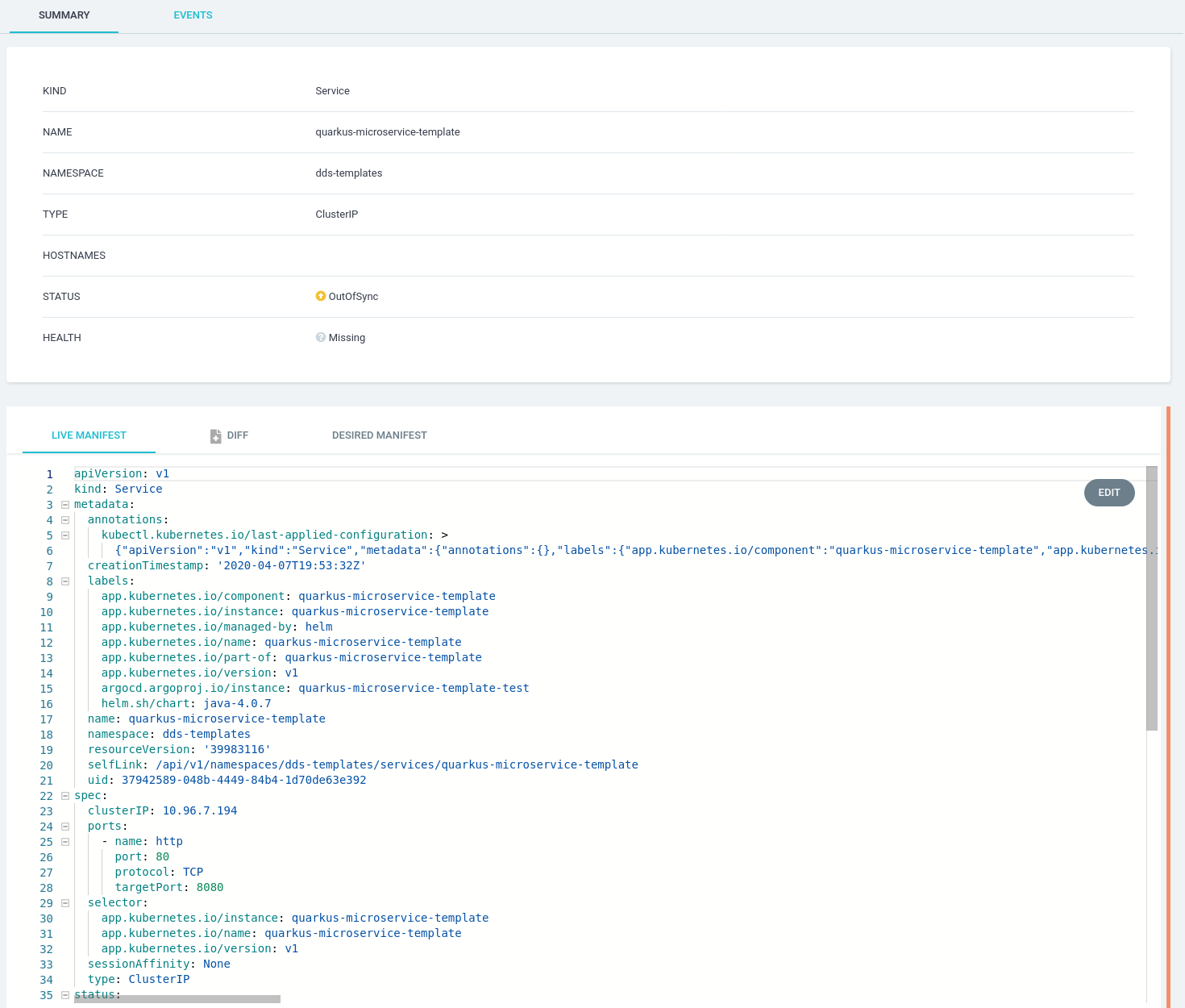

Application stays OutOfSync but k8s objects are created and they are labeled correctly

To Reproduce

N/A

Expected behavior

Argo Application should error out or become synced properly.

Screenshots

Version

argocd: v1.4.0+2d02948

BuildDate: 2020-01-18T05:53:00Z

GitCommit: 2d029488aba6e5ad48b2a756bfcf43d5cb7abcee

GitTreeState: clean

GoVersion: go1.12.6

Compiler: gc

Platform: linux/amd64

argocd-server: v1.5.1+8a3b36b

BuildDate: 2020-04-06T15:58:54Z

GitCommit: 8a3b36bd28fe278f7407b9a8797c79ad859d1acd

GitTreeState: clean

GoVersion: go1.14

Compiler: gc

Platform: linux/amd64

Ksonnet Version: v0.13.1

Kustomize Version: Version: {Version:kustomize/v3.2.1 GitCommit:d89b448c745937f0cf1936162f26a5aac688f840 BuildDate:2019-09-27T00:10:52Z GoOs:linux GoArch:amd64}

Helm Version: version.BuildInfo{Version:"v3.1.1", GitCommit:"afe70585407b420d0097d07b21c47dc511525ac8", GitTreeState:"clean", GoVersion:"go1.13.8"}

Kubectl Version: v1.14.0

Logs

time="2020-04-16T06:14:26Z" level=info msg="Refreshing app status (comparison expired. reconciledAt: 2020-04-16 06:11:25 +0000 UTC, expiry: 3m0s), level (2)" application=argocd

time="2020-04-16T06:14:26Z" level=info msg="Comparing app state (cluster: https://kubernetes.default.svc, namespace: argocd)" application=argocd

time="2020-04-16T06:14:26Z" level=info msg="getRepoObjs stats" application=quarkus-microservice-template-test build_options_ms=0 helm_ms=0 manifests_ms=1432 plugins_ms=0 repo_ms=0 time_ms=1432 unmarshal_ms=0 version_ms=0

time="2020-04-16T06:14:26Z" level=info msg="Skipping auto-sync: most recent sync already to a06c3ec5b79556db5731f9cef98738a4bae90994" application=quarkus-microservice-template-test

time="2020-04-16T06:14:26Z" level=info msg="Update successful" application=quarkus-microservice-template-test

time="2020-04-16T06:14:26Z" level=info msg="Reconciliation completed" application=quarkus-microservice-template-test dedup_ms=0 dest-namespace=dds-templates dest-server="https://eae-test-e6b9193c.hcp.westeurope.azmk8s.io:443" diff_ms=1 fields.level=2 git_ms=1432 health_ms=0 live_ms=0 settings_ms=0 sync_ms=0 time_ms=1463

All 5 comments

Hello @ekarlso , Is the target application cluster connected using namespace isolation mode? The v1.4.0 had a bug related to namespace isolation mode causing similar symptoms. It was fixed in v1.4.2.

Indeed it was ¡ :(

https://xhcp.westeurope.azmk8s.io:443 (1 namespaces) aks-x-test 1.15 Successful

Maybe you should add like a error or something on that ? ;)

I believe I'm seeing this issue as well, or something similar, in v1.5.3.

I'm isolating to a specific list of namespaces:

apiVersion: v1

kind: Secret

metadata:

name: in-cluster

labels:

argocd.argoproj.io/secret-type: cluster

stringData:

name: in-cluster

server: https://kubernetes.default.svc

namespaces: "argocd,namespace-1,namespace-2,namespace-3"

config: "{}"

type: Opaque

I have selfHeal and prune enabled for each application (one app per namespace):

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: namespace-1

spec:

destination:

namespace: namespace-1

server: https://kubernetes.default.svc

project: default

source:

path: cluster/namespace-1

repoURL: [email protected]:example/kubernetes.git

targetRevision: HEAD

syncPolicy:

automated:

selfHeal: true

prune: true

The changes I make are applied but the UI often shows the objects that have been updated as OutOfSync, even when I can see that they have been applied. At first I thought this was to do with kustomize generated resources but it also happens even with the following generator options and on resources that aren't generated:

generatorOptions:

annotations:

argocd.argoproj.io/compare-options: IgnoreExtraneous

argocd.argoproj.io/sync-options: Prune=false

Restarting the argocd-application-controller Deployment causes the status to change to Synced.

The argocd service accounts are not bound to any cluster roles but they have full permissions for each of the namespaces argo manages. If I understand the namespace scoping correctly this should be fine, but I thought I'd mention it.

This seems related to the the number of different resource types in the cluster and the number of applications/namespaces

The issue is resolved by:

- Reducing the number of resource types in the cluster by deleting CRDs

- Defining a smaller list of

resource.inclusionsinargocd-cm. In my testing, including 20 namespaced types out of a possible 50 seems to have resolved the issues for me.

Here is a testcase that reproduces the issue:

https://github.com/ribbybibby/argocd-testcase

We were able to reproduce this in v1.5, but not in v1.6+. We believe this to be fixed.

Most helpful comment

I believe I'm seeing this issue as well, or something similar, in v1.5.3.

I'm isolating to a specific list of namespaces:

I have

selfHealandpruneenabled for each application (one app per namespace):The changes I make are applied but the UI often shows the objects that have been updated as

OutOfSync, even when I can see that they have been applied. At first I thought this was to do with kustomize generated resources but it also happens even with the following generator options and on resources that aren't generated:Restarting the

argocd-application-controllerDeployment causes the status to change toSynced.The argocd service accounts are not bound to any cluster roles but they have full permissions for each of the namespaces argo manages. If I understand the namespace scoping correctly this should be fine, but I thought I'd mention it.