Checklist:

- [X] I've searched in the docs and FAQ for my answer: http://bit.ly/argocd-faq.

- [X] I've included steps to reproduce the bug.

- [X] I've pasted the output of

argocd version.

Describe the bug

I tried to deploy nginx chart using app of apps pattern, specify a path to the chart and to values.yaml. The chart is deployed correctly, the values.yaml is detected correctly (I can see them on the UI), but still - not taking any effect on the deployed manifests. When running helm install stable/nginx-ingress --debug --dry-run -f values.yaml, the manifests files are generated correctly.

To Reproduce

This is my app definition:

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: nginx

namespace: argocd

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

destination:

namespace: default

server: {{ .Values.spec.destination.server }}

project: default

source:

path: nginx

repoURL: <>

targetRevision: HEAD

helm:

valueFiles:

- values.yaml

This is the values yaml:

controller:

service:

annotations:

service.beta.kubernetes.io/aws-load-balancer-internal: "0.0.0.0/0"

service.beta.kubernetes.io/aws-load-balancer-additional-resource-tags: "<>"

externalTrafficPolicy: "Cluster"

replicaCount: 2

resources:

requests:

cpu: 100m

memory: "512Mi"

limits:

cpu: 200m

memory: "1024Mi"

livenessProbe:

initialDelaySeconds: 30

timeoutSeconds: 10

readinessProbe:

initialDelaySeconds: 30

timeoutSeconds: 10

image:

repository: quay.io/kubernetes-ingress-controller/nginx-ingress-controller

tag: "0.24.1"

pullPolicy: IfNotPresent

replicaCount: 3

extraArgs:

default-ssl-certificate: default/mysoluto.com

stats:

enabled: true

resources:

requests:

cpu: 600m

memory: "1.5G"

limits:

cpu: "1"

memory: "2G"

config:

http2-max-field-size: "8k"

large-client-header-buffers: "4 12k"

proxy-buffer-size: "32k"

vts-status-zone-size: "20m"

disable-access-log: "false"

ssl-ciphers: "ECDH+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:RSA+AESGCM:RSA+AES:!aNULL:!MD5:!DSS;"

hide-headers: "Server,X-Powered-By,X-AspNet-Version,X-AspNet-Mvc-Version,x-envoy-upstream-service-time,x-envoy-decorator-operation"

server-tokens: "False"

ssl-protocols: "TLSv1.3 TLSv1.2"

log-format-escape-json: "true"

log-format-upstream: '{ "type": "access_logs", "ssl_protocl": "$ssl_protocol", "time": "$time_iso8601", "remote_addr": "$proxy_protocol_addr","x-forward-for": "$proxy_add_x_forwarded_for", "request_id": "$req_id", "remote_user":"$remote_user", "bytes_sent": $bytes_sent, "request_time": $request_time, "status":"$status", "vhost": "$host", "request_proto": "$server_protocol", "path": "$uri","request_query": "$args", "request_length": $request_length, "duration": $request_time,"method": "$request_method", "http_referrer": "$http_referer", "http_user_agent":"$http_user_agent", "upstream": "$upstream_addr", "upstream_status": "$upstream_status", "upstream_latency": "$upstream_response_time", "ingress": "$ingress_name", "namespace": "$namespace" }'

http-snippet: |

more_set_headers "Server: "; map $geoip_city_continent_code $is_eu_visit { default 0; EU 1; }

limit-conn-status-code: "429"

worker-shutdown-timeout: 240s

enable-opentracing: "true"

datadog-collector-host: $HOST_IP

extraEnvs:

- name: HOST_IP

valueFrom:

fieldRef:

fieldPath: status.hostIP

metrics:

enabled: true

service:

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

autoscaling:

enabled: true

minReplicas: 2

maxReplicas: 15

targetCPUUtilizationPercentage: 80

targetMemoryUtilizationPercentage: 80

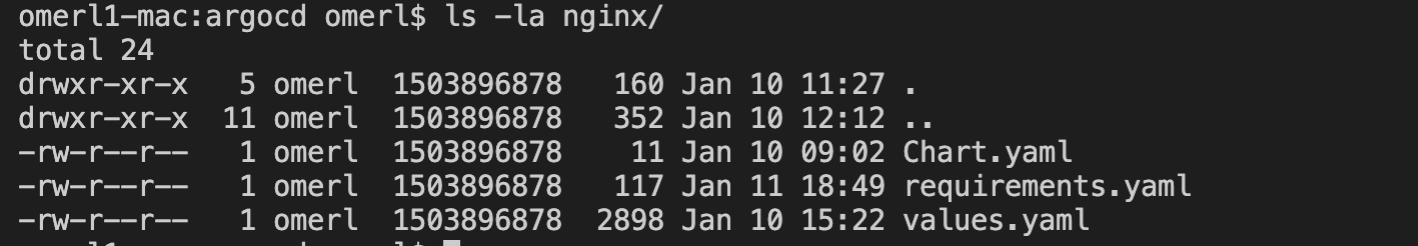

nginx folder structure:

argocd app get nginx:

Name: nginx

Project: default

Server: https://kubernetes.default.svc

Namespace: default

URL: http://localhost:8080/applications/nginx

Repo: <>

Target: HEAD

Path: nginx

Helm Values: values.yaml

SyncWindow: Sync Allowed

Sync Policy:

Sync Status: Synced to HEAD (d584844)

Health Status: Healthy

Operation: Sync

Sync Revision: d584844cea146d82fcee552e7bedde638ae80a8b

Phase: Succeeded

Start: 2020-01-11 19:01:19 +0200 IST

Finished: 2020-01-11 19:01:21 +0200 IST

Duration: 2s

Message: successfully synced (all tasks run)

GROUP KIND NAMESPACE NAME STATUS HEALTH HOOK MESSAGE

ServiceAccount default nginx-nginx-ingress-backend Synced serviceaccount/nginx-nginx-ingress-backend unchanged

ServiceAccount default nginx-nginx-ingress Synced serviceaccount/nginx-nginx-ingress unchanged

rbac.authorization.k8s.io ClusterRole default nginx-nginx-ingress Running Synced clusterrole.rbac.authorization.k8s.io/nginx-nginx-ingress reconciled. clusterrole.rbac.authorization.k8s.io/nginx-nginx-ingress unchanged

rbac.authorization.k8s.io ClusterRoleBinding default nginx-nginx-ingress Running Synced clusterrolebinding.rbac.authorization.k8s.io/nginx-nginx-ingress reconciled. clusterrolebinding.rbac.authorization.k8s.io/nginx-nginx-ingress unchanged

rbac.authorization.k8s.io Role default nginx-nginx-ingress Synced role.rbac.authorization.k8s.io/nginx-nginx-ingress reconciled. role.rbac.authorization.k8s.io/nginx-nginx-ingress unchanged

rbac.authorization.k8s.io RoleBinding default nginx-nginx-ingress Synced rolebinding.rbac.authorization.k8s.io/nginx-nginx-ingress reconciled. rolebinding.rbac.authorization.k8s.io/nginx-nginx-ingress unchanged

Service default nginx-nginx-ingress-controller Synced Healthy service/nginx-nginx-ingress-controller unchanged

Service default nginx-nginx-ingress-default-backend Synced Healthy service/nginx-nginx-ingress-default-backend unchanged

apps Deployment default nginx-nginx-ingress-default-backend Synced Healthy deployment.apps/nginx-nginx-ingress-default-backend configured

apps Deployment default nginx-nginx-ingress-controller Synced Healthy deployment.apps/nginx-nginx-ingress-controller configured

rbac.authorization.k8s.io ClusterRole nginx-nginx-ingress Synced

rbac.authorization.k8s.io ClusterRoleBinding nginx-nginx-ingress Synced

Notice there is no config map created like it should be. Also, when inspecting the service it doesn' have the annotations specified in the values.yaml

Expected behavior

Nginx deployed as specified in the values.yaml

Screenshots

Version

argocd: v1.3.6+89be1c9

BuildDate: 2019-12-10T22:48:19Z

GitCommit: 89be1c9ce6db0f727c81277c1cfdfb1e385bf248

GitTreeState: clean

GoVersion: go1.12.6

Compiler: gc

Platform: darwin/amd64

argocd-server: v1.3.6+89be1c9

BuildDate: 2019-12-10T22:47:48Z

GitCommit: 89be1c9ce6db0f727c81277c1cfdfb1e385bf248

GitTreeState: clean

GoVersion: go1.12.6

Compiler: gc

Platform: linux/amd64

Ksonnet Version: v0.13.1

Kustomize Version: Version: {Version:kustomize/v3.2.1 GitCommit:d89b448c745937f0cf1936162f26a5aac688f840 BuildDate:2019-09-27T00:10:52Z GoOs:linux GoArch:amd64}

Helm Version: v2.15.2

Kubectl Version: v1.14.0

omerl1-mac:argocd omerl$ ```

**Logs**

Paste any relevant application logs here.

```

All 2 comments

Are you using the helm-dependency approach where you have a requirements.yaml with something like:

dependencies:

- name: nginx-ingress

version: 1.14.0

repository: https://kubernetes-charts.storage.googleapis.com

If so, then you need to move your values beneath nginx-ingress so they get used for the correct dependency:

nginx-ingress:

controller:

kind: DaemonSet

resources:

requests:

memory: 500Mi

cpu: 100m

limits:

memory: 500Mi

cpu: 100m

Example here: https://github.com/argoproj/argocd-example-apps/tree/master/helm-dependency

Yes, this solves my issue- thanks!

Most helpful comment

Are you using the helm-dependency approach where you have a requirements.yaml with something like:

If so, then you need to move your values beneath nginx-ingress so they get used for the correct dependency:

Example here: https://github.com/argoproj/argocd-example-apps/tree/master/helm-dependency