Apollo: Possibilities of VLP-16 on the roof

Hi Apollo team and developers

VLP-16 is a low-cost Lidar for ADAS. Our team is currently exploring the possibility that put VLP-16 on the top of the car.

Our car is a 2 seats EV which has the same shape of Mercedes-benz Smart for Two. With this type of shape, is it possible that use single Lidar on the top like Apollo use HDL-64 on a MKZ?

are there any other team have tried or thinking about this solution? Is it doable ? Kindly comment below about your opinions regarding this idea or if you have done, what's the performance of this solution?

Best regards,

John

All 4 comments

@JonathanJones92 we have not experimented with using the VLP-16 LiDAR on top of our MKZ. It would be great if you were able to mount the LiDAR and test it and let us know if it worked for you. It is definitely doable. What are the other sensors you are planning to use on this vehicle?

If you have any additional questions, please let us know.

@natashadsouza Hi Natasha,

we have tried the "third party perception" in our model A002 car. Using the architecture of third party perception, we added 4 corner radar and 1 front radar,which achieved acceptable performance.

Now we want to explore the possibilities of Lidar but we only have one VLP-16 in our hand currently. However, considering that size of our car, VLP-16 may work well on our model A002.

Before we starting to roll out, please allow me to ask few questions from experienced Apollo developer like you:

Is there any installation specification/suggestion for VLP-16 or 16-channel Lidar if I mount it on the top of a car? What height should the Lidar be or should the lidar be a angle horizontally?

How can I the verify there is enough point cloud for the Apollo Lidar algo?

How to do the calibration for the Lidar?

If I choose different Radar other than Conti_Radar or B01HC which are recommanded by apollo platform, how can I do the caliberation for those radar? I can't get enough info from the docs.

Thank you for you help.

Best regards,

John

@JonathanJones92 we used at QNX the VLP-16 to try it out. I recommend mounting it on your car horizontally and driving around using pcl recorder, and then afterwards, use the offline lidar visualization tool to examine the data. What I did earlier this year was capture the data while driving for 20 minutes or so, through dense area with lots of cars, parked cars, pedestrians, etc. Even without having a model trained for the VLP-16 (the standard model comes with Apollo is for the HDL-64), it was pretty good at identifying cars. Of course at longer distances and smaller objects with the point cloud density being much less, accuracy of detection should be lower, as you are guessing.

To capture PCL data, you need to run the extrinsics transform broadcaster, use roslaunch export_pcd_online_16.launch, then generate the pose file, then run the offline visualizer tool.

I generally followed these instructions:

https://github.com/ApolloAuto/apollo/blob/master/docs/howto/how_to_run_offline_perception_visualizer.md

Had to modify bits of code and scripts that were hardcoded for HDL-64 to use the VLP-16, but not much. Doing this gave a really good idea of what it sees with the VLP-16 though, through the offline visualizer tool.

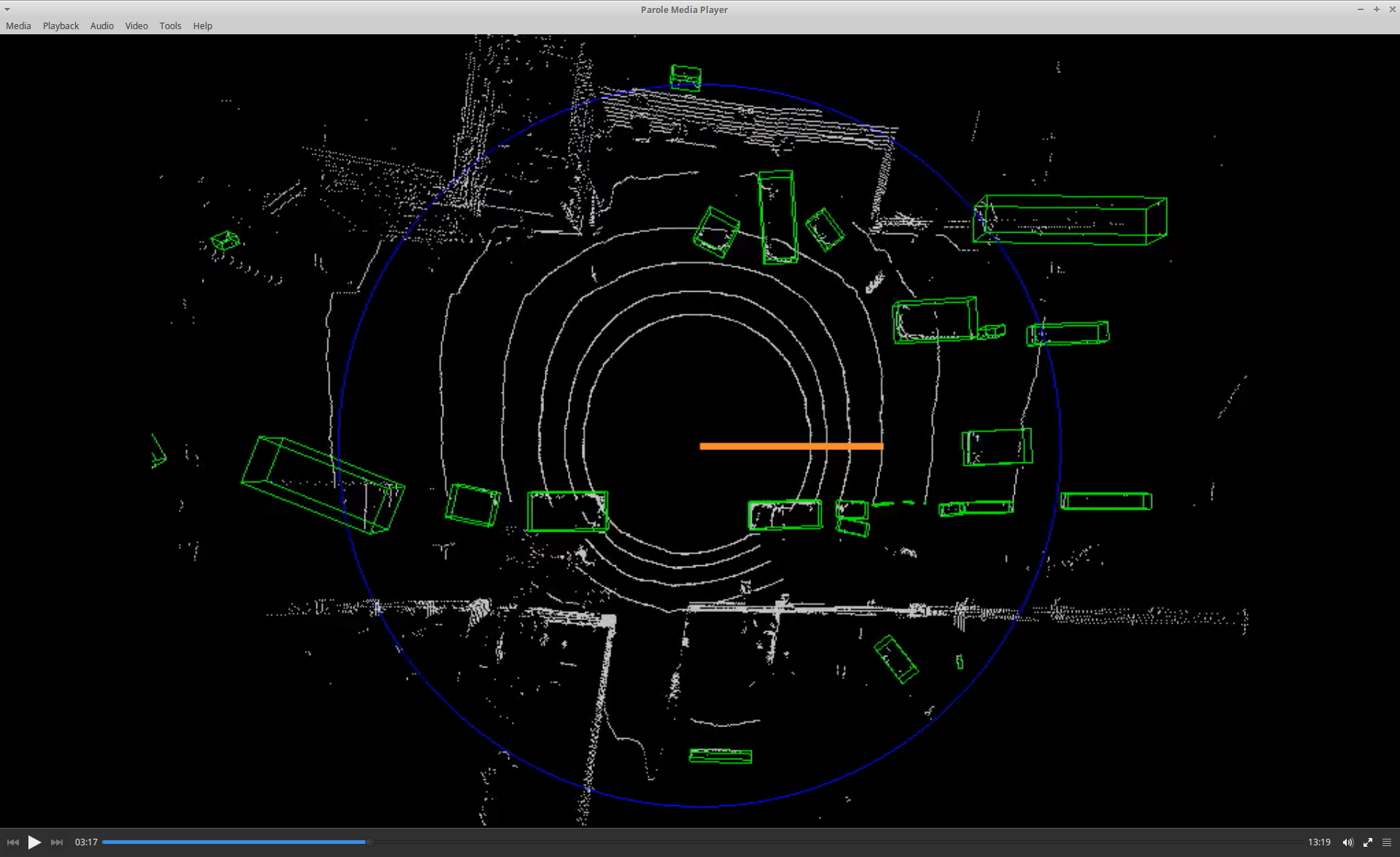

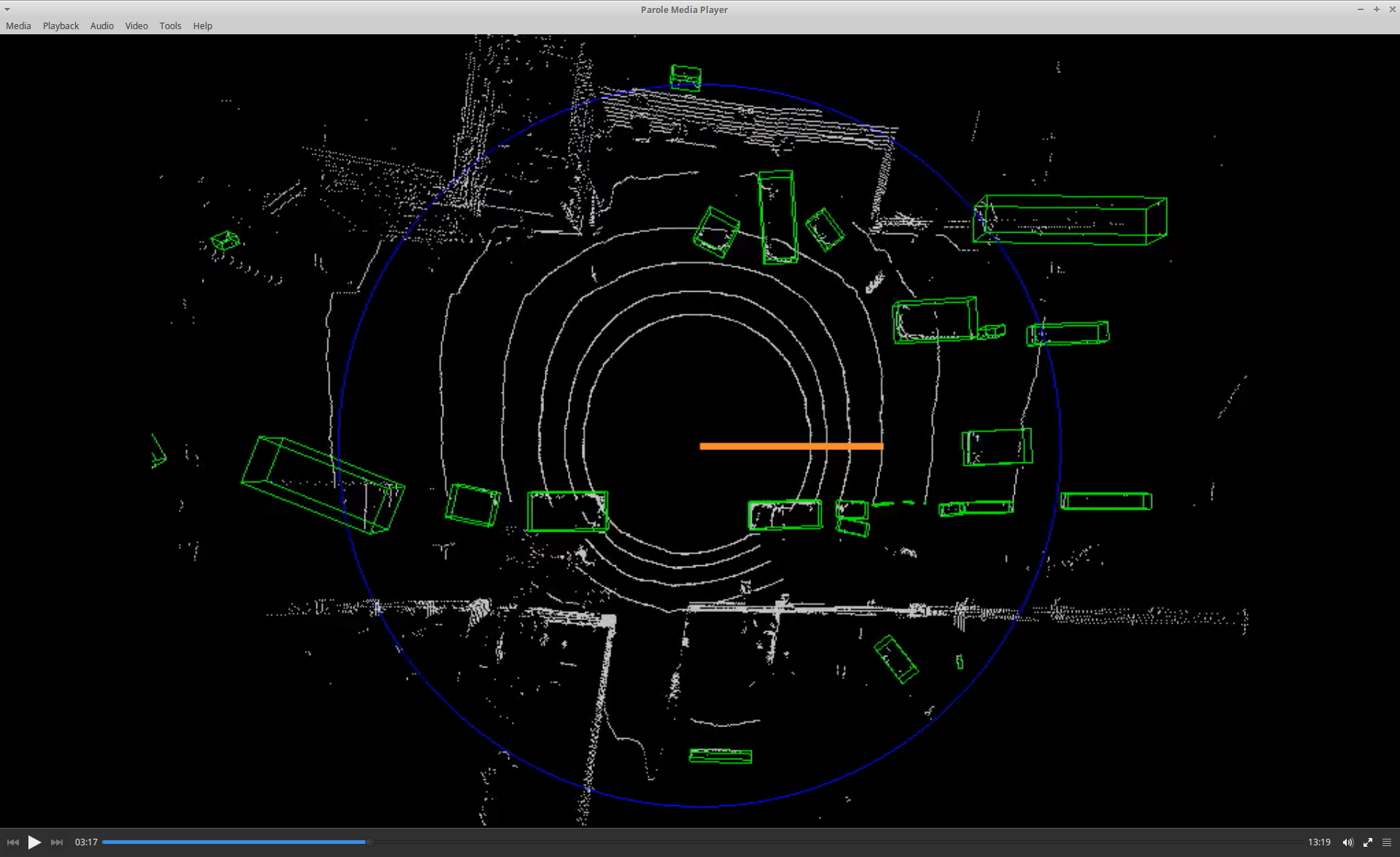

For example, see below screenshot while running the offline visualizer... you can see the vehicle ahead was identified, as well as most parked cars on the right side, and on the left as well.

Hope this helps to give you some more ideas.

Cheers,

David van Geyn (QNX)

@dvangeyn Thank you so much for your help, I will definetely do the offline_vis with VLP-16.

Most helpful comment

@JonathanJones92 we used at QNX the VLP-16 to try it out. I recommend mounting it on your car horizontally and driving around using pcl recorder, and then afterwards, use the offline lidar visualization tool to examine the data. What I did earlier this year was capture the data while driving for 20 minutes or so, through dense area with lots of cars, parked cars, pedestrians, etc. Even without having a model trained for the VLP-16 (the standard model comes with Apollo is for the HDL-64), it was pretty good at identifying cars. Of course at longer distances and smaller objects with the point cloud density being much less, accuracy of detection should be lower, as you are guessing.

To capture PCL data, you need to run the extrinsics transform broadcaster, use

roslaunch export_pcd_online_16.launch, then generate the pose file, then run the offline visualizer tool.I generally followed these instructions:

https://github.com/ApolloAuto/apollo/blob/master/docs/howto/how_to_run_offline_perception_visualizer.md

Had to modify bits of code and scripts that were hardcoded for HDL-64 to use the VLP-16, but not much. Doing this gave a really good idea of what it sees with the VLP-16 though, through the offline visualizer tool.

For example, see below screenshot while running the offline visualizer... you can see the vehicle ahead was identified, as well as most parked cars on the right side, and on the left as well.

Hope this helps to give you some more ideas.

Cheers,

David van Geyn (QNX)