Angular.js: Small Memory Leak in $rootScope.$on

I'm submitting a ...

- [x] bug report

- [ ] feature request

- [ ] other (Please do not submit support requests here (see above))

Current behavior:

Registering a new handler with $rootScope.$on pushes the handler to the end of a list. Removing the handler only nulls out the array index that contains the handler. The code never resizes the array to remove the null entries, leading to an array that grows without bounds.

Given:

- An event

'e'such that there is always 1 registered listener on the event (preventing the handler list from being removed).

- Note that this is easily achievable even if all handlers are regularly removed. All it takes is another call to

$on('e', handler)to run before all handlers are de-registered.

- Note that this is easily achievable even if all handlers are regularly removed. All it takes is another call to

- An application that regularly calls

$rootScope.$on('e', handler)` and responsibly de-registers the handler over the course of a session

...then the handler list for 'e' will grow by 1 element every time $rootScope.$on('e', handler) is called. The end result is an array that looks like this: [null, null, null, ... handler, handler].

I'm observing this happen in an app with the $translateChangeSuccess event. The array grows to about 40 null entries over 8 navigations to different views/pages.

The leak is small, but avoidable.

Expected / new behavior:

The handler list does not grow unboundedly over the course of a session. Handler positions are either re-used (as in a free list-based memory allocator), or handlers are registered in an object where handler positions are delete-ed once they are de-registered.

Minimal reproduction of the problem with instructions:

See above description.

Angular version: Observed in 1.4.1, but code still present in 1.6.

Browser:

All

Anything else:

See above for suggestions on how to fix.

Note that I am not heavily experienced with AngularJS, but I am heavily experienced in JavaScript. Hence, I am not able to provide you with a succinct reproduction of the issue, but the description and a quick glance of the code should confirm the problem.

All 22 comments

The array does get resized the next time the event occurs. Is that not happening in your case?

No, it is not. The event $translateChangeSuccess occurs when the user changes their language, which does not happen in most sessions.

(Sorry for brevity -- I am on a phone right now. I found this happening in the open source Loomio application.)

I see. With angular-translate the events are added to the $rootScope often, removed often (on page-change?), but the actual event does not fire often. That does seem like a potential issue...

The reason (I assume) that the listener-removal only sets them to null is because that array might be getting looped over at the same time, so modifying it will cause the loop to potentially skip entries. Will have to look into potential solutions and see how easy it is...

Note that scope watchers solve this by storing the loop index on watchers.$$digestWatchIndex, and watcher deregistration may modify the index.

However I think this only works because you can not have nested calls to $digest. Where events could potentially fire more events and clobber that shared index.

I wonder if deleting the array entry is any better then setting it to null?

I wonder if deleting the array entry is any better then setting it to null?

I was wondering the same. @jvilk, have you seen a meassurable impact on memory allocation due to the nulls?

An (possibly easy) way out could be defragmanting the array in the post-digest phase, when there should be no emitting/broadcasting taking place.

Yeah I think doing it post-digest would work and be fairly easy. But then removing a listener would cause a post-digest event, and causing a post-digest event might mean causing a digest. Is that ok? Would it be worth it?

I don't think $$postDigest() invokes another digest.

That's true, it just might not get run until the next digest which should be fine...

@gkalpak After about 5 navigations to different views (Dashboard -> Group Page -> Thread Page -> Group Page -> Thread Page), the array grows to 215 entries. All but 18 are null.

Assuming a constant rate of growth, that's 43 fresh array entries per navigation. Assuming 32-bit entries in the array for object references / null entries, that's 172 bytes per navigation. If the JavaScript engine doesn't do that optimization and allocates a full 64 bits for every entry (since any could plausibly hold a 64-bit double), that's 344 bytes per navigation.

It's not an urgent memory leak, but it seemed warranted to bring up to all of you and confirm that it's not due to an incorrect usage of an API.

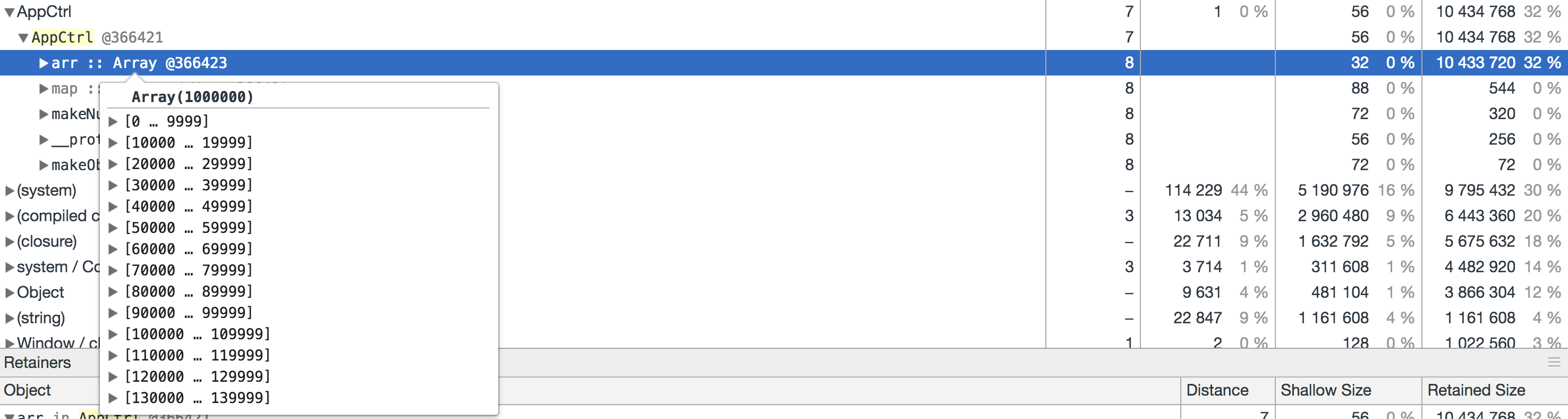

I would expect VMs to optimize storing null values. After a quick test here, it seems that 1000000 nulls only take 10KB (and that might even be without GC), so the memory leak is really a non-issue in practice.

But I do agree that we should fix this if the fix is simple enough. One idea is using $$postDigest() (when it is guaranteed that listeners are not being looped over). Another idea is to set a flag when $emiting/$broadcasting (since that is the only occasion when listeners are looped over) and skip removing the listener when the flag is set (potentially scheduling the defragmentation for later).

If anyone wants to take a stub at it, please do :smiley:

After a quck test here, it seems that 1000000 nulls only take 10KB

I think you mean 10MB? JITs don't typically optimize the storage of individual array entries AFAIK. It's either an optimization on the entire thing (e.g. 32 bits per element because it only stores integers), or nothing. Of course, I'm happy to be proven wrong!

10MB would be consistent with 8 bytes (64 bits) per NULL, plus extra room for the size class. (Since arrays in JS are resizeable, the JS engine likely increases the size of the array in powers of 2 to anticipate further elements.)

(1000000 nulls * 8 bytes per null) / 1024 bytes per kilobyte / 1024 kilobytes per megabyte = ~7.62 MB

@jvilk if you have a test up and running... what if you delete half of those array entries? I'm curious if deleting array entries is any different then assigning null...

@jbedard Okay, I did a bit of research.

- If you create an array of 1000000

nulls, thendelete arr[i]500000 entries, then the size appears to not change -- even after a forced GC. - If you use an object as a hash map, add 1000000

nullsas numeric properties, and delete entries withdelete obj[i];, the size also does not appear to change -- even after a forced GC.

- It makes sense to me that this matches the behavior of the array; once you start deleting random entries from an array, V8's JIT should treat it more like an object used as a hash map. But it is surprising to me that neither resize / shrink after the deletions.

- Best guess: V8's JIT performs a special allocation for huge objects like this one, and never reduces its size. (Unless maybe it falls below the threshold for the special allocation -- maybe I should try deleting more elements?)

- If you add

nulls to an array and delete random array elements to keep the array at or below 5 in size, the array will still be very small even if you go through 1000000 distinct indices (< 1KB).

- This would likely reflect the scenario seen in this bug. The application would perform a mix of deletions and additions to keep the array at a small size.

Here's the modified CodePen. Note that it takes a long time for the deletions to complete. Pull up devtools to see the console messages illustrating progress. (The in-browser "console" that CodePen provides won't update, since we're doing all of this synchronously.)

tl;dr: Deleting array properties seems like it would work quite well. (As would using an object instead -- while not tested in my CodePen, it's likely the size of an object used in the same manner would be equivalent to the size of an array.)

So just switching from namedListeners[indexOfListener] = null to delete namedListeners[indexOfListener] should fix the memory issue? The namedListeners.length will continue increasing until we actually loop over it and splice it, but if memory doesn't increase that should be fine and fix the problem. WDYT?

I think you mean 10MB?

@jvilk, oops. I didn't realize the numbers I was looking at where localized; misinterpreted the thousand separator for a comma (I said it was a quick test :grin:)

So just switching from

namedListeners[indexOfListener] = nulltodelete namedListeners[indexOfListener]should fix the memory issue?

@jbedard, that will totally come back and bite as at some point. I would stay away from such hacks for dealing with a _minor_ memory leak (especially when there are simple alternatives - as discussed above).

Yeah? I thought that was a reasonable solution, and a super simple!

This seems to explain it fairly well: https://stackoverflow.com/questions/614126/why-is-array-push-sometimes-faster-than-arrayn-value/614255#614255

As discussed "offline", delete should be fine (according to the spec). So, I am happy to go with that :smiley:

I've tried to profile the delete solution (what #16161 currently does). Basically profiling $rootScope.$on('foo', function(){})() (adding/removing listeners, normally in a loop N times).

= null grows like @jvilk said (~8 bytes per entry)

delete keeps the $$listeners['foo'] array at 32 bytes

Both also get slower as the array length increases, however... the delete version slows at about 3x the rate. This seems consistent in both Chrome and FF.

If an event gets $broadcasted after each iteration then speed+memory are pretty even between the two, although the delete version causes a bit more GC (I assume because the browser switches the sparse array to a hash/map implementation and that switch causes more GC? or hash/map entries use more then 8 bytes?).

This makes me question this fix. It basically changes event add/remove (with no firing of the event) from a small/slow memory leak to a small but fast speed leak. WDYT?

Naturally, delete will be slower -- you're doing more work to fix the problem, and you're changing the array into a 'hole-y' array that operates like a dictionary internally. Is the slowdown even noticeable, though? How often is $rootScope.$on called? I would think that it's far from a hot function -- with and without the fix -- so the slowdown isn't measurable from a real application.

FYI @jvilk I think everyone agreed with you and the PR should be merged soon

FYI this is now fixed in 1.7 and 1.6 and should be in the next release of both.

In 1.6 we replaced the null assignment with a delete like discussed here.

In 1.7 we replaced the null assignment with a splice but this required limiting the event-listener loop to not be executed recursively (on the same scope with the same event name). This is enforced with a new inevt error. This solution is much simpler, but adds this restriction, we'll have to see how it works out.

Thanks @jvilk for all the help

Most helpful comment

As discussed "offline",

deleteshould be fine (according to the spec). So, I am happy to go with that :smiley: