Amplify-js: Caching files from Storage.get()

Right now all links received from Storage.get() are signed links with AWSAccessKeyId, Expire, Signature etc. And on each call to Storage.get() to the same object key we receive different unique link to the same object.

As we receive signed link even with level: 'public' objects this behavior prevents any content to be cached in web browser as it uses the whole url query as key for cached resource.

Is this an intended behavior? Or is there any workaround to be able to cache resources requested from s3?

All 13 comments

Hi @AlpacaGoesCrazy

This is the expected behavior, when using the Storage module, credentials are assumed to be available (from either the authenticated role, or unauthenticated role configured in a Cognito Identity Pool).

Using level: 'public' means that the resources can be accessed by both authenticated and unauthenticated roles, but credentials are still needed. You can think of it as It is public for users of your app, not for the whole world

I haven't tested this, but you might be able to pass an expires option to Storage.get(), and achieve some client side caching by using a fixed time in the future.

Let us know how it goes

Right now not passing expires to Storage.get() returns link which expires after 15 minutes by default. So passing custom expiration time will do nothing to browser caching behavior as on subsequent link generation it will still generate different Signature for that link which will result in a cache miss.

Or am I missing something and maybe I am supposed to store a signer url once I generated it?

@AlpacaGoesCrazy I think your are supposed to store the signed url once you generated it.

@manueliglesias It seems that expires can't be greater than Auth.currentSession().accessToken.payload.exp time. When I passing expires: 604800 (1 week, max allowed value) to Storage.put all the signed urls are expired after Auth.currentSession().accessToken.payload.exp

If there is no way to extend the expiration time Storage.get should set the value accordingly

Another way to to cache the images is to download them (@AlpacaGoesCrazy) e.g.

See comment below for other example of caching images.

@MainAero Thank you for this solution. While there is not much sense in storing images in Amplify cache if you have lots of them (as it's max size is capped at 5Mb), storing links to images works just fine!

Here is my implementation with link caching:

const getImgLinkCached = async key => {

const cachedImage = Cache.getItem(key)

console.log(cachedImage)

if (cachedImage) {

console.log('Cache hit: ', key)

return cachedImage

}

console.log('Cache miss: ', key)

const url = await Storage.get(`img/${key}`, { expires: 604800 })

Cache.setItem(key, url)

return url

}

It works fine however when I try to add expiry to my cache entry it seems to not be able to retrieve the record

const expires = (new Date).getTime() + 604800000;

Cache.setItem(key, url, { expires })

After this Cache.getItem(key) on such record returns null and logger doesn't say anything useful

By the way setting default TTL time in Cache.configure() works fine

@AlpacaGoesCrazy Yes you are right. The AsyncStorage is limited to 6MB and Amplify Cache to 5MB. Furthermore AsyncStorage is not intended to cache blob data like images. I missed that in my first attempt of caching.

For me caching the signed urls is not satisfying because the signed urls will expire mostly within a 1h (see my comment above).

I wrote a Cache util which will cache the images in the cache directory of the storage let the cache key be the md5 hash of the image. On Storage.put I will also write the image to the cache directory. In case the image is not the my storage cache I will download it via Storage.get(key, { download: true }) and store it in storage cache.

By the way the expires option on Cache will take the utc time in milliseconds e.g. (new Date()).getTime() + 604800000

@MainAero You are right, I just realized that no matter what expiration time you set while retrieving signed URL the cognito token which was used to sign that URL will expire much earlier and render that link inaccessible. This makes storing signer URLs for longer period of times not an option.

However I am not sure how to implement caching in this case. Can you give me a hint on how to better implement it?

Storage.get(key, { download: true })

Can someone post some example code using this to access the actual data that is downloaded?

@ScubaDrew Something like this:

return Storage.get(key, { download: true })

.then(s3Obj => s3Obj["Body"].toString())

You can access to a level: 'public' or level: 'protected' picture without signature, just remove the signature before adding the url in a dom.

Something like this :

photoUrl.substring(0, photoUrl.indexOf('?'))

Seem the question has been answered. Going to close this now. Please reopen if you have any related questions or concerns.

@jordanranz this issue has not been solved yet

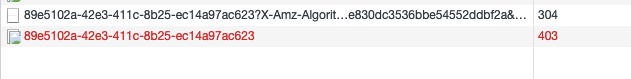

I have tried the method from @destpat , and still got the 403

i can access the public files only with Storage.get() but not the url without signature

is there any other ideas to get the permanent link of the public files with Storage.get()?

the following is the public file with/without signature:

me neither, can someone post some example code using this to access the actual data that is downloaded?