Amplify-cli: Lambda Layer versions CLI - Console

Note: If your issue/bug is regarding the AWS Amplify Console service, please log it in the

Amplify Console GitHub Issue Tracker

Describe the bug

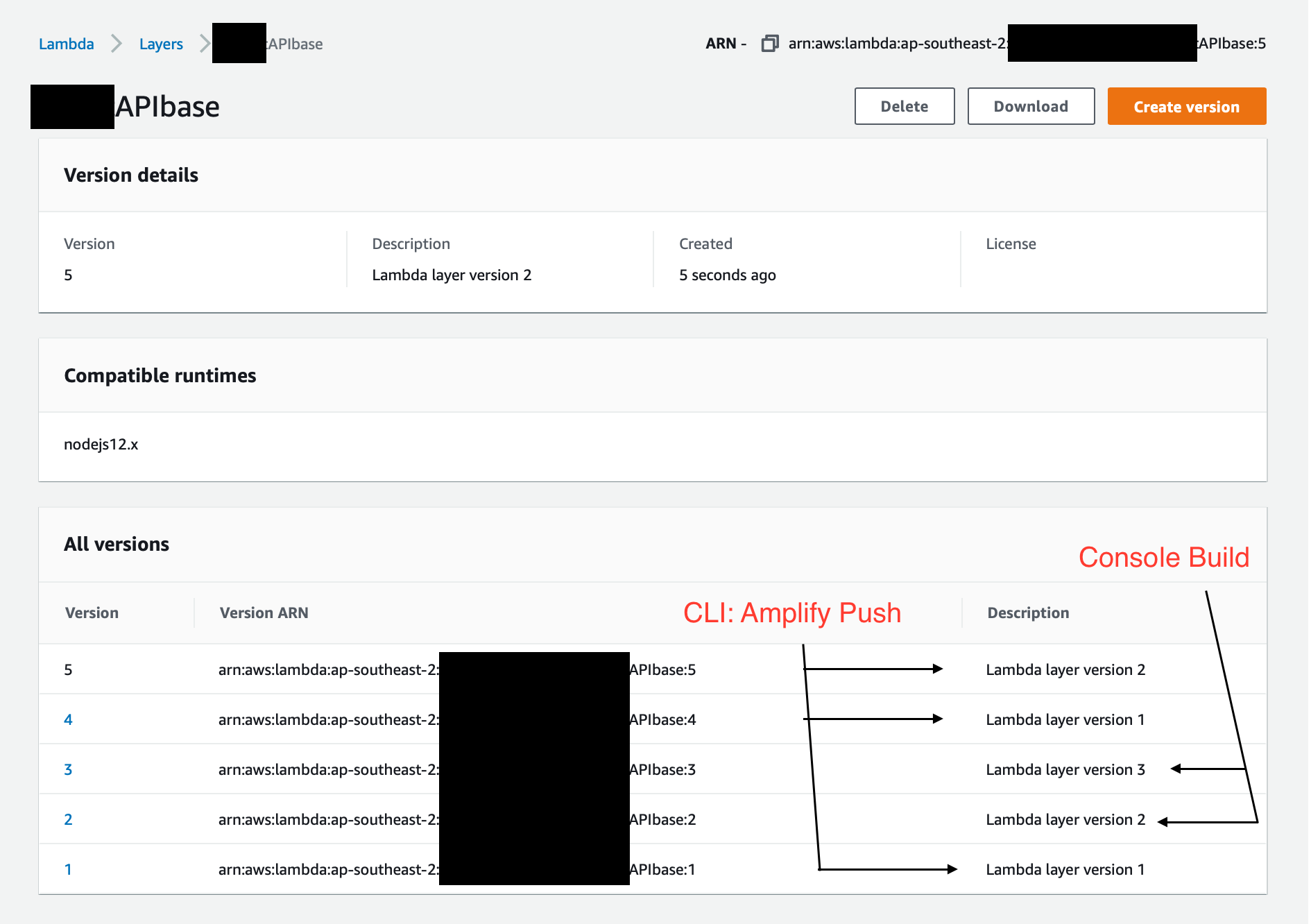

The Lambda layer versions are not being kept upto date, between console deployments and local deployments

When I use amplify push from the CLI a new version of the Layer is created and the local state is updated and I can select that version for use with my other functions.

When the repository is pushed and triggers a CI build/deploy, AND a new lambda layer is created, this new layer version is not available to the CLI.

If I now push a 3rd layer version, the local CLI "names" it layer 2, but it is actually layer 3.

Amplify CLI Version

4.27.1

To Reproduce

CLI:

Add Layer function. (Creates layer function)

Amplify Push (deploys lambda layer 1)

Modify Layer Function

GIT Commit & Push

[Console CI Build triggered] (deploy lambda layer 2)

Amplify Push ( deploy lambda layer 3, but thinks its 2)

Expected behavior

Amplify push should retrieve actual lambda layer versions from cloud and not rely on local state, as it can be out of date

Screenshots

Desktop (please complete the following information):

- OS: Mac

- Node Version. v12.18.0

Additional context

Compounding my issue here is the fact that the CI build is incorrectly adding new lambda layers, as the amplifyPush is not running an npm install on the lambda layers, and was creating empty layers.

I understand __now__ that the layer expectation is that the repo is complete and self contained, but it has really complicated my workflow.

The problem of the versions remains however, that I can not configure my functions with the correct version as the local CLI state isn't correct.

All 24 comments

+1, I have the same issue, its making my deployments very brittle as there is no real effective workaround that I know of besides just manually setting the layer version in the console

+1 same issue

+1 also same issue, Workaround is to amplify function remove all my lambdas and then remove the layer - then add them all back again. Results in them going haywire again shortly after

+1

Any update on this @jhockett? Our team is interested in offloading the build to the CI console, but so far the Amplify CI console does not appear to pick up changes to our layer code?

+1 to this comment by OP:

Amplify push should retrieve actual lambda layer versions from cloud and not rely on local state, as it can be out of date

Seems like best workaround is to avoid updating layers and creating a new layer for every version if you really have to. :X

+1

+1

+1

Same here, I have to manually change the layer in the console. The description will say 'layer version 5' but the actual layer version in the console is 10. But the CLI only shows up to 5.

@kennandavison I tried this, however now I'm unable to delete the layer as it thinks it's still in use by the function. (It isn't I've moved it to the new layer) now I have a dangling layer I can't remove, and all my layers are out of sync in amplify resulting in me manually making changes through the console, which isn't great for the CI/CD pipeline.

@6foot3foot - yeah, I had that as well. I ended up grepping/deleting references in the cloudformation files.

for me its a lot like a box of chocolates, you never know what you're going to get (version wise)

@micduffy same feeling here. Decided to refactor the code to have less dependencies of my lambda layer. Now I only use it to pack all the heavy node_modules together and it's used by a single function.

Just one extra issue I had (on top of versioning). When changing amplify environments, sometimes, somehow, a new version of the layer was created (which we already know) + the node_modules folder was empty. That was making all the lambdas depending on the layer break.

This is the issue I'm seeing. amplify is going by the description not the actual version. so version 8 doesn't have the right code, it's version 12 I need. But amplify will only show up to 8. (As a note all my uploads of this function have only been through the amplify CLI, so not sure why the version stayed at 1 for a while, jumped to 2 then back to 1)

@micduffy same feeling here. Decided to refactor the code to have less dependencies of my lambda layer. Now I only use it to pack all the heavy node_modules together and it's used by a single function.

Just one extra issue I had (on top of versioning). When changing amplify environments, sometimes, somehow, a new version of the layer was created (which we already know) + the

node_modulesfolder was empty. That was making all the lambdas depending on the layer break.

+1, so many things broken here

+1

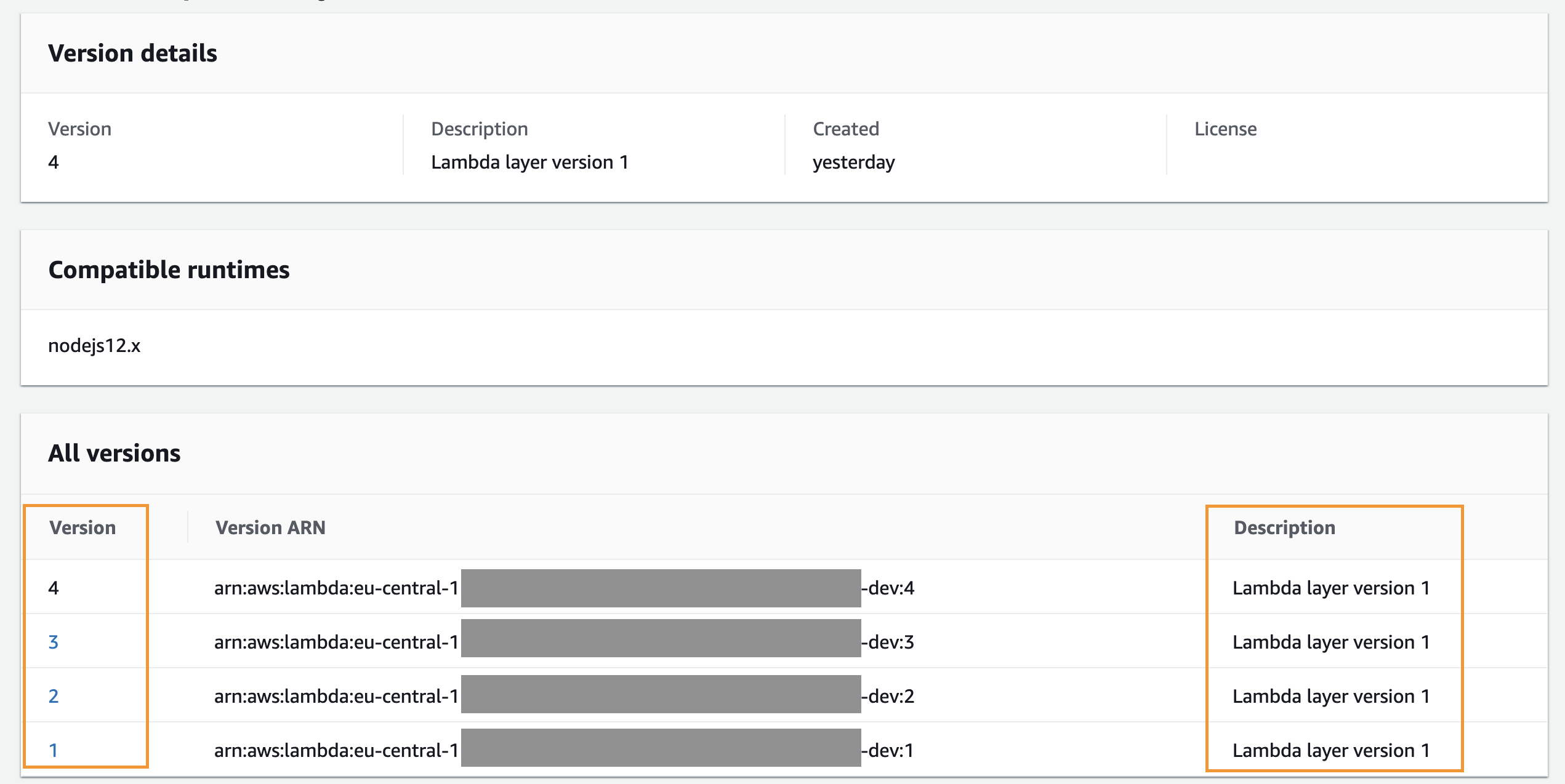

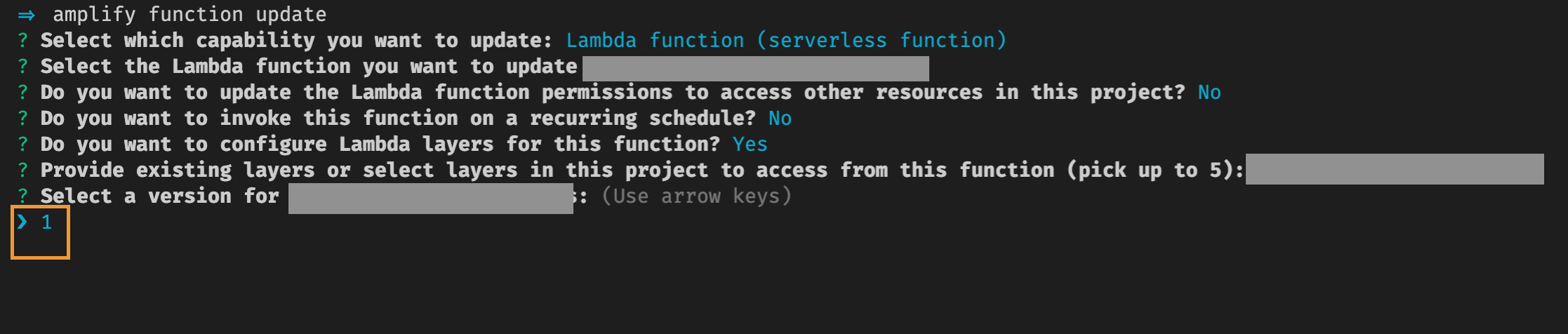

I'm facing the same issue. My latest version for dev environment is 4, however when I'm updating function using amplify-cli, I'm only able to select version 1:

Any update on this?

Hi all, we are actively looking into reworking how layer versions work in the CLI so that this version de-synchronization doesn't happen.

Thats great to hear. In our application, we always use latest version with our shared layer. We have a temporary workaround in place which is to associate to the latest version, and then remove all other old versions.

This seems to work okay as an interim measure - but there are still manual steps when we move between environment.

Hope this helps

Workaround Here :-)

So, I'm working on a Gatsby project where I use console re-deployment any time I need to update the contents.

Since Lambda layers became available in amplify I thought to tidy up a bit and implemented one for starter (and arrived to version 3 on the same day). Today I needed to update it and the lambda using it kept giving error, then I discovered me and Amplify thought we were at layer version 4 but in reality we were at layer version 30 :-D

(so, beside fixing the misalignment could you please also work on avoiding un-needed re-deployments?)

Anyway after playing for a while I found that if I manually changed the last version number (from 4 to 30) in the dedicated part of team-provider-info.json file.

"nonCFNdata": {

"function": {

"<lambda-layer-name>": {

"layerVersionMap": {

"1": {...},

"2": {...},

"3": {...},

"30": {...}

}}}}

and then re-updating the consuming lambda amplify proposed me to use layers 1, 2, 3 and 30. Selecting 30 simply worked.

After this I needed to do a few more changes and amplify automatically created/used layer version 31 without further intervention ;-)

That is good, but try it with a multi environment deployment. In different

environments U can can very possibly get burned

On Mon, 7 Dec. 2020, 6:41 pm Daniele Tieghi, notifications@github.com

wrote:

Workaround Here :-)

So, I'm working on a Gatsy project where I use console re-deployment any

time I need to update the contents.

Since Lambda layers became available in amplify I thought to tidy up a bit

and implemented one for starter (and arrived to version 3 on the same day).

Today I needed to update it and the lambda using it kept giving error, then

I discovered me and Amplify thought we were at layer version 4 but in

reality we were at layer version 30 :-D(so, beside fixing the misalignment could you please also work on avoiding

un-needed re-deployments?)Anyway after playing for a while I found that if I manually changed the

last version number (from 4 to 30) in the dedicated part of

team-provider-info.json file."nonCFNdata": { "function": { "

": { "layerVersionMap":

{ "1": {...}, "2": {...}, "3": {...}, "30": {...} }}}}and then re-updating the consuming lambda amplify proposed me to use

layers 1, 2, 3 and 30. Selecting 30 simply worked.

After this I needed to do a few more changes and amplify automatically

created/used layer version 31 without further intervention ;-)—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

https://github.com/aws-amplify/amplify-cli/issues/5072#issuecomment-739734507,

or unsubscribe

https://github.com/notifications/unsubscribe-auth/ABHYU5DNYOMGD6PHBUKQ5WTSTSBLPANCNFSM4P5R7ONA

.

I have it :-/ but luckily I'm still not in production so for now I'm burying my head in the sand and waiting for the fix before merging branches

We have a script we run after deployments that causes all of our lambdas to

use latest version of our shared layer

On Mon, 7 Dec. 2020, 6:47 pm Daniele Tieghi, notifications@github.com

wrote:

I have it :-/ but luckily I'm still not in production so for now I'm

burying my head in the sand and waiting for the fix before merging branches—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

https://github.com/aws-amplify/amplify-cli/issues/5072#issuecomment-739737338,

or unsubscribe

https://github.com/notifications/unsubscribe-auth/ABHYU5HN5C7NX2KCGDBTMN3STSCA7ANCNFSM4P5R7ONA

.

Great Idea! If I have more problems I'll create something similar. Do you simply change the layer version in every lambda cloudformation template json or else?

PS: do you know if there is a repository of amplify-lifesaving scripts? I also created a useful bunch of those and it should be nice to share them.

we use python to update the cloudformation template json.

Closest thing to a repo of amplify-lifesaving scripts would be this I reckon : https://github.com/dabit3/awesome-aws-amplify

Most helpful comment

Hi all, we are actively looking into reworking how layer versions work in the CLI so that this version de-synchronization doesn't happen.