Amplify-cli: Generated boilerplates for Lambda triggers long startup time

Describe the bug

Lambda triggers generated code with boilerplates has a long startup time (4 seconds).

Amplify CLI Version

4.12.0

To Reproduce

Generating any cognito triggers boilerplates as per: https://aws-amplify.github.io/docs/cli-toolchain/cognito-triggers

It generates an index.js like this:

exports.handler = (event, context, callback) => {

const modules = process.env.MODULES.split(',');

for (let i = 0; i < modules.length; i += 1) {

const { handler } = require(`./${modules[i]}`);

handler(event, context, callback);

}

};

Expected behavior

Lambda to finish execution without a timeout.

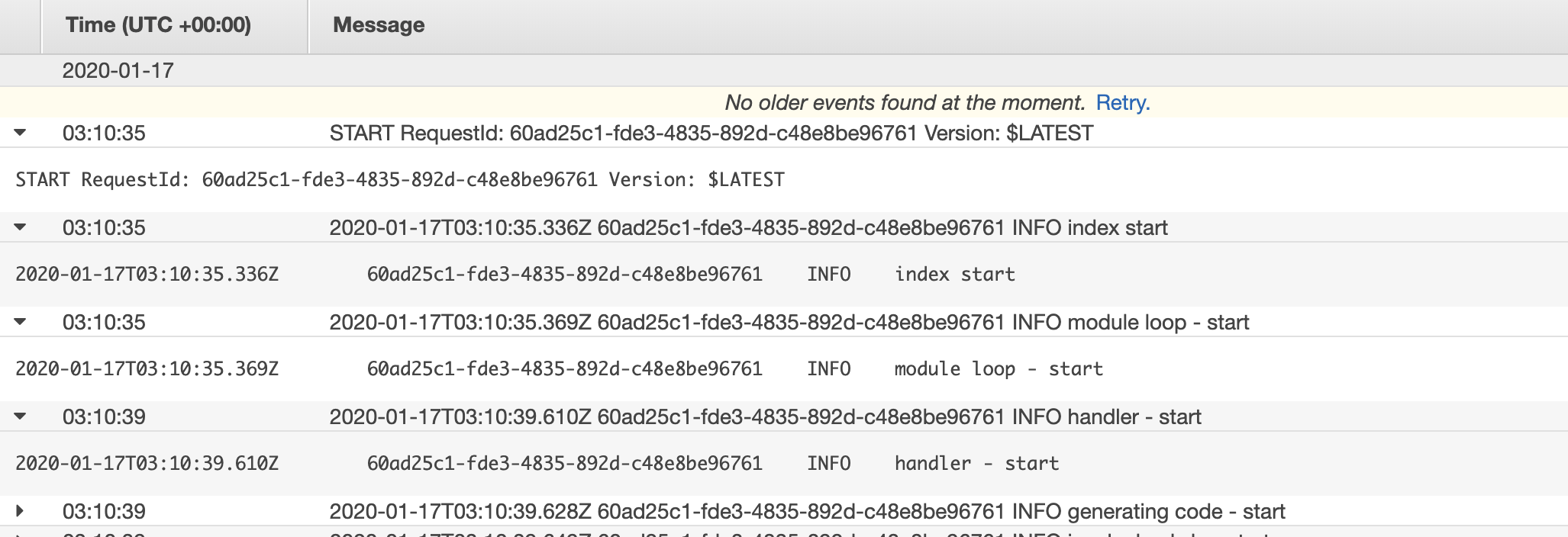

Screenshots

Second call to the function is faster and doesn't timeout.

Desktop (please complete the following information):

- OS: Mac

- Node Version: AWS Nodejs 10.x

- Region: us-east-2

Additional context

If you overwrite the content of index.js with the code of the generated boilerplate (for example, boilerplate-create-challenge.js for createAuthChallenge trigger), then execution time is back to normal (250ms - 500 ms). Even for a cold start (first execution).

All 12 comments

It looks like your code takes >3s to execute. Three seconds is the default Lambda execution timeout. If latencfy isn't a big deal for your Lambda triggers, you could try raising that limit from the AWS CLI or AWS console (no way to specify Lambda timeout from Amplify CLI currently as far as I know).

It would be cool if Amplify could do things like parse CloudWatch events for the maximum execution time over a period of time, then add a user-specified offset to that maximum, then set the timeout based on that maximum + offset and alert an SNS topic if that is exceeded (the Lambda fails to execute). It would also be nice if you could configure a dead letter queue and generic DLQ Lambda handler for a given function.

That being said, I would assume that calling the callback parameter would only be semantically meaningful the first time it is executed (indicating either a success or termination). It seems to me it would behave similarly to return await x(); return await y(); return await z(); which obviously means only x() would ever be executed, or if y or z _begin_ execution there is no reason to believe they will return successfully (why continue execution of unreachable code)? In other words, the generated code doesn't seem to respect the Cognito trigger lifecycle.

Hello and thank you for your response.

Actually, cognito triggers expire after 5 seconds, and it cannot be changed, this is an AWS constraint (see: https://docs.aws.amazon.com/cognito/latest/developerguide/cognito-user-identity-pools-working-with-aws-lambda-triggers.html#important-lambda-considerations).

Regarding code, it behaves like this even with the default autogenerated code.

The strange thing is really the behavior of const { handler } = require(`./${modules[i]}`);.

Before that line, everything is fast (a few ms to go to that line, even with cold start), but loading that line of code takes 4 seconds (see my screenshot).

My workaround is not to rely on that default amplify mechanism (i.e. providing a list of "modules" through env variables to load a list of .js files). Without this, everything loads fine under 500 ms.

@guioum Wasn't aware of the 5 second upper bound. Seems arbitrary, I'd check to see if it wasn't really a soft limit.

I think the issue is that require from inside the exports.handler function will import those libraries at runtime, rather than at cold-start. Do you still get a 4s+ long cold start?

No my cold start is fixed (I removed the require inside the handler function).

@guioum Curious to know your workaround after removing the require inside the handler function. How did you manage to interact with other modules?

@guioum Curious to know your workaround after removing the require inside the handler function. How did you manage to interact with other modules?

@kaustavghosh06 The boilerplate modules only have one module listed in the parameters.json file. If he's using it out of the box, what other modules would be missing?

I used plain old 'static' require statements in my function:

const apiHostname = process.env.API_HOSTNAME

const apiUserKey = process.env.API_USER_KEY

const apiSiteKey = process.env.API_SITE_KEY

const awsSdk = require('aws-sdk');

const axios = require('axios');

...

+1. Also there's zero documentation on this modules boilerplate. For example, and pardon my ignorance, but is it correct to call handler(event, context, callback); multiple times? If one of the handlers already does a callback that would cut out the rest of the handlers correct?

Also why is there boilerplate code generated anyways, why not just a standard lambda and let us write the code? What's with all this "modules" business, seems unnecessary. And is it ok to manually modify the modules and add our own custom modules? (e.g. user-migration).

Also isn't it recommended that we have one lambda that handles all cognito triggers, to avoid cold start issues? Why does amplify cli want to generate one lambda per trigger? Why not one lambda to handle all possible triggers? I remember for graphql lambda resolvers for example, @mikeparisstuff recommended we use one lambda with a switch statement to implement all resolvers. That makes sense to me.

for me not using the module pattern and importing like so const addToGroup = require("./add-to-group").handler; works for me (no longer timing out) thank @guioum

Hi,

I would like to propose a PR using this code:

const moduleNames = process.env.MODULES.split(',');

const modules = []

for (let i = 0; i < moduleNames.length; i += 1) {

modules.push(require(`./${moduleNames[i]}`));

}

exports.handler = (event, context, callback) => {

for (let i = 0; i < modules.length; i += 1) {

const { handler } = modules[i];

handler(event, context, callback);

}

};

Before opening it, I would like to ensure this code goes in the right direction. It's based on the comments posted here, and it keeps the approach using MODULES var env to define the modules.

Using it reduced my cold start duration by 5x and I don't hit the timeout anymore.

Hi @thibaultdalban thank you for sharing this solution. I can confirm my Post Confirmation Lambda is not getting called multiple times anymore after implementing your fix. It went from more than 5 seconds to 1.5.

This has been released in CLI v 4.32.0

Most helpful comment

Hi,

I would like to propose a PR using this code:

Before opening it, I would like to ensure this code goes in the right direction. It's based on the comments posted here, and it keeps the approach using MODULES var env to define the modules.

Using it reduced my cold start duration by 5x and I don't hit the timeout anymore.