Amplify-cli: Error: Only one resolver is allowed per field

The following GraphQL-Schema leads to the Error "Only one resolver is allowed per field" when pushed to the AWS Cloud.

type TypeA @model {

id: ID!

typeB: [TypeB!]! @connection(name: "myCon")

}

type TypeB @model {

id: ID!

typeA: TypeA! @connection(name: "myCon")

}

The generated Resolver and CloudFormation looks good to me. The Resolvers seems to be unique per Type and Field.

We had to rename the non-collection property to something else so the resolver could be deployed:

type TypeA @model {

id: ID!

typeB: [TypeB!]! @connection(name: "myCon")

}

type TypeB @model {

id: ID!

typeBTypeA: TypeA! @connection(name: "myCon")

}

I don't know if this is a CloudFormation or a Amplify CLI Bug. There is a Forum Post (https://forums.aws.amazon.com/thread.jspa?messageID=884492󗼌) which I replied to because this can be that CloudFormation Bug. But since I use the Amplify CLI, I wanted to bring this up, even if it's just for documentation.

All 43 comments

Same thing here :-(

Same thing here.

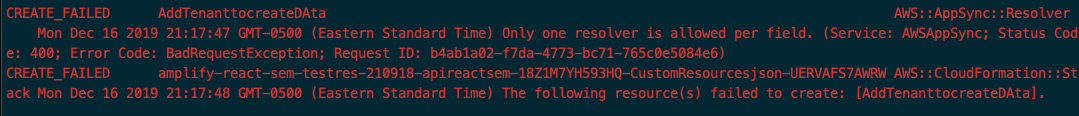

I tried adding a keyField to a connection (https://github.com/aws-amplify/amplify-cli/issues/300#issuecomment-433480985) and I get the following error:

Cannot update GSI's properties other than Provisioned Throughput. You can create a new GSI with a different name.

So I deleted a table and all it's connections, and then tried to re add it, which gave me the following error:

Only one resolver is allowed per field. (Service: AWSAppSync; Status Code: 400; Error Code: BadRequestException; Request ID: b21a3364-2a37-11e9-bce1-cfaf75c1d372)

The resolver does not seem to be in the AppSync Console anymore, so the only way I see is to delete the stack (as suggested in the forum posted above) which is really not an option for me.

It seems there is some path that leaves APIs in a state where a resolver is left dangling even after the field is removed from the schema. When this happens you can get around the issue by going to the AppSync console, adding a field to the schema with the same name as the supposed conflict, delete the resolver record by selecting it from the right half of the schema page and clicking "Delete Resolver" on the resolver page.

I will try to reproduce and identify the underlying issue but it appears that it is not within the Amplify CLI itself.

Got the same error today, also renamed my connection and got the error Only one resolver is allowed per field.

Only way I could correct it was to remove the two models which had the connection (first made a backup of data in DynamoDB), push it so all was removed and then push the model without connection in it so it recreated the schema, resolvers and table and after that re-made the connection

@mikeparisstuff trick worked for me, but really annoying. Hope it will be fixed soon!

I had the same issues.

Carefully read the log to see which resolver creation fail.

Go to your Appsync panel to schema and on the right side look up the resolver in question and delete it.

Appsync is not always perfectly in sync I guess :P

Same problem here as well, it happened to me when I tried to rename a Resolver. It seems like the renamed resolver is created by CF before the old resolver is removed and therefore raises this exception.

@sprucify solution works but this needs to be fixed as it's not viable in the long run

@pierremarieB Was the resolver that you added, a custom resolver?

@kaustavghosh06 It was a regular resolver. Not sure what you mean by custom resolver.

I have had this problem when I create models with keys defined and connections within them.

If you leave the connections out and first deploy (amplify push), then once thats done, add your connections and it works.

I faced the problem too. I was trying to overwrite a resolver that got generated by Amplify for a @connection directive. Furthermore, I couldn't fix it using Mike's fix.

Update: Fixed it. I created an entry in CustomResources.json, which is only needed when you create new resolvers, and not for overwriting them.

@pierremarieB wrote:

Same problem here as well, it happened to me when I tried to rename a Resolver. It seems like the renamed resolver is created by CF before the old resolver is removed and therefore raises this exception.

We've run into the same on more than one occasion when renaming a resolver. Attempting to deploy both the old and newly named resolvers, by pointing the old resolver to a blackhole field name, also did not work; CF appears to process creations before deletes and updates. Our current CI/CD approach is to deploy a delete and then deploy the creation of the resolver again under the new name.

Hit this as well :/ Unfortunate that CF cannot process this as an override.

This happened to me on two different scenarios:

When I'm trying to create a many to many relationship

I simple followed the docs.

When I updated the schema.graphql.

- I needed to add a new column on a table, the column is required.

- The table is already populated with test data that a script I wrote inserted.

- I commented out the

schema.graphqland didamplify pushwhich I expected should have removed the data sources and the resolvers. - I added the new column to the table and did

amplify push, at this point I was expecting the API to not contain anything, basically it should have started from "nothing".

The weird part is that when I went to the AppSync dashboard > schema and looked for the resolver, the resolver doesn't exist there.

I ran into this issue yesterday. After 2 hours I just gave up and deleted the API, I couldn't resolve the issue. This was testing only, so not really an issue. For production ready API's i'm a little more concerned.

Since I've tried every trick in this issue without result, can anyone point me to the file(s) which causes the issue? Or do I have to dig into CloudFormation? Thanks.

I ran into a similar situation Only one resolver is allowed per field. Maybe this will help other developers out there. It seems like AppSync has a chicken before the egg problem and this should be reported to the AWS AppSync development team.

We had a GraphQL type named ChangeInCondition, which had resolvers wired up. Somehow CloudFormation rolled back a deploy and removed the GraphQL type ChangeInCondition from AppSync, yet kept its resolver data somehow.

So upon the next deploy, it would throw the error Only one resolver is allowed per field, due to the fact that it thought ChangeInCondition had a few resolvers bound and CF was trying to re-create them.

Oddly, I wasn't able to remove the resolvers through the aws appsync CLI since the type didn't exist...

aws appsync delete-resolver --api-id XXXXX --type-name ChangeInCondition --field-name status

An error occurred (NotFoundException) when calling the DeleteResolver operation: Type ChangeInCondition not found

So then I decided to try and create the missing type ChangeInCondition and see if I could then remove the resolver. Yep, that did the trick. Funny how creating the "missing type" worked. I thought it would throw an error at the API level. Here it is in Python using boto3...

def main():

api_id = 'XXXX'

client = boto3.client('appsync')

# most fields removed for brevity

definition = """

type ChangeInCondition {

id: ID!

status: String!

}

"""

client.create_type(apiId=api_id, definition=definition, format='SDL')

client.delete_resolver(apiId=api_id,

typeName='ChangeInCondition',

fieldName='status')

if __name__ == "__main__":

main()

Same issue!

That's the second time I ran into this issue (7 months after).

A lot of time lost trying to find the correct resolver to delete (by pushing and waiting for rollbacks).

@mikeparisstuff I guess this should probably be handled directly by the AppSync team, but could we expect some workaround in Amplify (like cleaning dangling resolvers before running amplify push)? Or any ETA for the AppSync fix?

In addition to the time it takes to figure out the right thing to do, this is really scary when thinking about CD and if this happens during a deployment in production...

This is definitely the kind of issues that prevent me from advising Amplify to non-CloudFormation "experts".

Experienced this issue when attempting to replace a resolver. The delete of the old resolver is not performed before the CLI attempts to add the replacement.

@adamup928 Can you provide more details on what you did to cause this? When replacing resolvers in CFN, it is generally recommended to create new fields & resolvers before removing old ones. This falls in line with GraphQL API evolution practices and prevents older clients from breaking (e.g. you might have native apps that user's don't update as often) while allowing newer clients to target the new fields.

As an example, one way to cause this issue would be if I were trying to move from a stack with a resolver like this:

{

"Resources": {

"ResolverLogicalIdA": {

"TypeName": "Query",

"FieldName": "getPost"

// ...

},

// ...

}

to a stack with the same resolver but a different logical resource id:

{

"Resources": {

"ResolverLogicalIdB": {

"TypeName": "Query",

"FieldName": "getPost"

// ...

},

// ...

}

Since the logical id of the resource blocks changed, CFN considers these distinct resources and it is not guaranteed that the stack will delete the resource with resource id "ResolverLogicalIdA" before creating a resource with "ResolverLogicalIdB". Normally you could use a CFN dependsOn to specify that a resource depends on another but since you are removing "ResolverLogicalIdA" there is no way to depend on it. If the stack tries to create the "ResolverLogicalIdB" before deleting resolver "ResolverLogicalIdA" then you will see the error as this clashes with AppSync's guarantee that a field has at most one resolver.

If you were to instead, create a stack with a new logical id on a new field then you would not have this issue. E.G. adding a resolver like this would not result in the clash.

{

"Resources": {

"ResolverLogicalIdB": {

"TypeName": "Query",

"FieldName": "getTopRatedPosts"

// ...

},

// ...

}

You would also not see this clash if the resource logical id were not being updated.

{

"Resources": {

"ResolverLogicalIdA": {

"TypeName": "Query",

"FieldName": "getPost"

// ... e.g. update the mapping template.

},

// ...

}

Since the logical resource id is the same, CFN will perform an UpdateResolver operation and the operation will succeed.

@I encountered the same problem. I was trying to replace autogenerated resolvers into pipeline resolvers.

For example my schema creates below queries and attach a autogenerated resolvers to this.

type Query {

getFactory(pk: ID!, sk: ID!): Factory

listFactorys(

pk: ID,

sk: ModelIDKeyConditionInput,

filter: ModelFactoryFilterInput,

limit: Int,

nextToken: String,

sortDirection: ModelSortDirection

): ModelFactoryConnection

}

Instead of having normal autogenerated resolvers I wanted to convert them into pipeline resolvers by adding a new function to each query.

Mynewfunction resolver-->autogenerated resolver-->result

What I tried I changed the Customresource.json file and added my function resolver into that and then added autogenerated resolver to be pipeline.

` "GetBusinessPermissions": {

"Type": "AWS::AppSync::FunctionConfiguration",

"Properties": {

"ApiId": {

"Ref": "AppSyncApiId"

},

"Name": "getBusinessPermissions",

"DataSourceName": "FactoryTable",

"FunctionVersion": "2018-05-29",

"RequestMappingTemplateS3Location": {

"Fn::Sub": [

"s3://${S3DeploymentBucket}/${S3DeploymentRootKey}/resolvers/Query.getBusinessPermissions.req.vtl",

{

"S3DeploymentBucket": {

"Ref": "S3DeploymentBucket"

},

"S3DeploymentRootKey": {

"Ref": "S3DeploymentRootKey"

}

}

]

},

"ResponseMappingTemplateS3Location": {

"Fn::Sub": [

"s3://${S3DeploymentBucket}/${S3DeploymentRootKey}/resolvers/Query.getBusinessPermissions.res.vtl",

{

"S3DeploymentBucket": {

"Ref": "S3DeploymentBucket"

},

"S3DeploymentRootKey": {

"Ref": "S3DeploymentRootKey"

}

}

]

}

}

},

"GetFactoryResolver": { ---> I copied it from buid/stacks and converted into pipeline

"Type": "AWS::AppSync::Resolver",

"Properties": {

"ApiId": {

"Ref": "AppSyncApiId"

},

"Kind": "PIPELINE",

"FieldName": "getFactory",

"TypeName": "Query",

"PipelineConfig": {

"Functions": [

{

"Fn::GetAtt": [

"GetBusinessPermissions",

"FunctionId"

]

}

]

},

"RequestMappingTemplateS3Location": {

"Fn::Sub": [

"s3://${S3DeploymentBucket}/${S3DeploymentRootKey}/resolvers/${ResolverFileName}",

{

"S3DeploymentBucket": {

"Ref": "S3DeploymentBucket"

},

"S3DeploymentRootKey": {

"Ref": "S3DeploymentRootKey"

},

"ResolverFileName": {

"Fn::Join": [

".",

[

"Query",

"getFactory",

"req",

"vtl"

]

]

}

}

]

},

"ResponseMappingTemplateS3Location": {

"Fn::Sub": [

"s3://${S3DeploymentBucket}/${S3DeploymentRootKey}/resolvers/${ResolverFileName}",

{

"S3DeploymentBucket": {

"Ref": "S3DeploymentBucket"

},

"S3DeploymentRootKey": {

"Ref": "S3DeploymentRootKey"

},

"ResolverFileName": {

"Fn::Join": [

".",

[

"Query",

"getFactory",

"res",

"vtl"

]

]

}

}

]

}

}

}

`

I get the error:

CREATE_FAILED GetFactoryResolver AWS::AppSync::Resolver Fri Nov 15 2019 16:20:20 GMT+0100 (Central European Standard Time) Only one resolver is allowed per field. (Service: AWSAppSync; Status Code: 400; Error Code: BadRequestException;)

Is there anyway to achieve the same. I am trying to protect autogenerated queries and mutations with some access permissions rules before they get invoked.

@mikeparisstuff Is there any way that one can convert autogenerated resolvers into pipeline resolvers while deploying graphql schema. It would be really nice feature to see if I can convert or force my deployment to have pipelined resolvers rather then unit resolvers.

@dahersoftware Did you find a resolution to your problem? I have the exact same issue. Thanks.

@joebri I have to create a different type query to make it work. In my case that is not the ideal solution I am aiming for but its okay for now.

The Update Behavior of the CloudFormation for Resolvers is Replacement

It creates the new resolver first and then deletes the old one, but it cannot create a new resolver as the resolver has the exact FieldName

The workaround is to to create a new Query type and make sure the resolver depends on the GraphQLSchema

GraphQLSchema:

Type: AWS::AppSync::GraphQLSchema

Properties:

ApiId: !GetAtt GraphQLApi.ApiId

Definition: |

type Location {

name: !String

}

type QueryV1 {

getLocations: [Location]

}

schema {

query: QueryV1

}

OldGetLocationsResolver:

Type: AWS::AppSync::Resolver

DependsOn: GraphQLSchema

Properties:

ApiId: !GetAtt GraphQLApi.ApiId

TypeName: QueryV1

FieldName: getLocations

RequestMappingTemplate: ....

ResponseMappingTemplate: ....

to be:

GraphQLSchema:

Type: AWS::AppSync::GraphQLSchema

Properties:

ApiId: !GetAtt GraphQLApi.ApiId

Definition: |

type Location {

name: !String

}

type QueryV2 {

getLocations: [Location]

}

schema {

query: QueryV2

}

NewGetLocationsResolver:

Type: AWS::AppSync::Resolver

DependsOn: GraphQLSchema

Properties:

ApiId: !GetAtt GraphQLApi.ApiId

TypeName: QueryV2

FieldName: getLocations

RequestMappingTemplate: ....

ResponseMappingTemplate: ....

I am facing the same issue and not sure how any of these solutions apply to me?

I am not trying to replace resolvers, I am trying to override the autogenerated resolver?

How in heaven do I keep getting this error?

Issue #3002

I am hoping @mikeparisstuff answer does not apply here? as the basic definition of 'overriding' autogenerated resolvers is just that! 'override'!

I have the same error of @c0dingarchit3ct :(

This is not the first time and usually the best way to avoid the problem was rebuild another API.

Anyway, my project is too big now and I would to know if is it possible to solve this error once and for all!

This time, I've encountered this error when I was experimenting on the _graphql.schema_ , with @key directive. In specific, I was trying to change the primary key... but ups! I didn't think it was so risky ^^'' (I think that an error by the CLI on the push operation, before all the operations start, is really necessary).

I've just tried to push a clean version of graphql schema but doesn't solve the problem. I can't found anything clear in the documentation and here on GitHub there are sooo many partial solutions but I don't know what's the best (if it exist).

EDIT:

I solved in this way: push all tables without connections and relations. After that I push one by one all connections. Now is ok, but if exist a better solution... well, it would be great.

@mikeparisstuff i tried to go to the AppSync console, but happens that the specific resolver not even exists there, which make me think that this resolver is in some kind of cache or something. I cannot manage to get a pattern here as well as every time a different resolver name appears.

In my case, i deleted old models that were no longer needed in our codebase, but we found out that afterall we need to have them until we release a new version of our services after running some tests. We start to run into this kind of problems when we reverted our models and pushed again.

It seems there is some path that leaves APIs in a state where a resolver is left dangling even after the field is removed from the schema. When this happens you can get around the issue by going to the AppSync console, adding a field to the schema with the same name as the supposed conflict, delete the resolver record by selecting it from the right half of the schema page and clicking "Delete Resolver" on the resolver page.

I will try to reproduce and identify the underlying issue but it appears that it is not within the Amplify CLI itself.

@mikeparisstuff this intel is up-to-date? I went to the appsync console and i cannot delete any resolver. That option is not available. Perhaps is due to the new UI? Help needed here.

Always deleting the resolver from the build connection stack solves the issue, but doing it all the time. There is any kind of cache from the cloudformation node_module function that gets S3BackendZipFileName while doing a push?

If you are facing issues about "Only one resolver is allowed per field..." when pushing an update relating to a connection in your schema, then maybe try the following:

- In your schema, keep the model, but change the offending resolver key from a connection type to any primitive type i.e. String, then do amplify push.

- Once pushed, delete that key from your schema and push again (this is so as to wipe it out from the cloud).

- Finally, add the key back again as the intended connection type and push.

Worked for me after that. Good luck!

I opted for more brute force approach.

To solve same problem "only one resolver is allowed per field."

amplify remove api

amplify push

amplify add api

amplify push

works 100% of the time. don't forget to copy the schema.

I opted for more brute force approach.

To solve same problem "only one resolver is allowed per field."

amplify remove api

amplify push

amplify add api

amplify push

works 100% of the time. don't forget to copy the schema.

ABSOLUTELY DO NOT DO THIS IF YOU HAVE DATA IN YOUR DYNAMODB DATABASE.

This will delete all tables and remove all data. This can only work if your project is in a very early stage and does not have data already.

>

ABSOLUTELY DO NOT DO THIS IF YOU HAVE DATA IN YOUR DYNAMODB DATABASE.

This will delete all tables and remove all data. This can only work if your project is in a very early stage and does not have data already.

Yes you are right. I should have mentioned this in my reply. You will lose everything. And I am in early stage so this works for me.

I found a solution for this error without losing the data.

Do the following actions for the affected types:

- Add generated operations with null value inside @model:

type Name @model(queries: null, mutations: null, subscriptions: null) {...} amplify push- Remove generated operations with null value inside @model:

type Name @model {...} amplify push

Good luck!

I also experienced this issue.

I found a solution for this error without losing the data.

Do the following actions for the affected types:

- Add generated operations with null value inside @model:

type Name @model(queries: null, mutations: null, subscriptions: null) {...}amplify push- Remove generated operations with null value inside @model:

type Name @model {...}amplify pushGood luck!

Working perfectly.

You save me lot of hours.

I had lot of errors that persisted even after losing the data. Try to either run the app on incognito mode (this can be annoying) or remove all data for localhost from setting from time to time. Especially if you are getting lots of errors.

I created a new environment and ran into this issue. I commented out the @searchable transform in all models and it worked. I then uncommented it and re-deployed with @searchable.

I found a solution for this error without losing the data.

Do the following actions for the affected types:

- Add generated operations with null value inside @model:

type Name @model(queries: null, mutations: null, subscriptions: null) {...}amplify push- Remove generated operations with null value inside @model:

type Name @model {...}amplify pushGood luck!

@yonatanganot I tray this solution but the next error appear:

I found a solution for this error without losing the data.

Do the following actions for the affected types:

- Add generated operations with null value inside @model:

type Name @model(queries: null, mutations: null, subscriptions: null) {...}amplify push- Remove generated operations with null value inside @model:

type Name @model {...}amplify pushGood luck!

@yonatanganot I tray this solution but the next error appear:

Yep - I get the same here. Since you removed the operations (queries, mutations) on the model you run into this error.

- Tried also @clodal 's approach but this results in the same

resolver is allowed per fielderror. - You also run into problems if you have a CustomResolver setup

- Tried also to remove schema models add some test table -> push -> add schema and custom resolver back into the scheme -> push - fail

- The only way which worked me so far is the dump everything approach described here: https://github.com/aws-amplify/amplify-cli/issues/682#issuecomment-598624485

(which shouldn't really be the current solution at all. Dumping is ok while developing but you waste so much time with this...)

I think this issue will avoid most of the problems here (hopefully): https://github.com/aws-amplify/amplify-cli/issues/2384

@iqaldebaran @regenrek

What is your schema structure?

@iqaldebaran @regenrek

What is your schema structure?

@yonatanganot , my schema is the next...

When I comment the "WfItem" and its connection in "WfNode", the schema works without problems, but when I add the "WfItem", it throws the error.

type TKRProject

@model

@auth(rules: [{ allow: owner }, { allow: groups, groups: ["Admin"] }]) {

id: ID!

projectName: String!

description: String

studyCoordinator: String

facility: String

company: String

plant: String

location: String

lat: Float

lon: Float

createdAt: String

updatedAt: String

whatIf: WhatIf @connection(name: "WhatIfModel")

}

type WhatIf

@model

@auth(rules: [{ allow: owner }, { allow: groups, groups: ["Admin"] }]) {

id: ID!

name: String

project: TKRProject! @connection(name: "WhatIfModel")

nodesWhatIf: [WfNode] @connection(name: "NodesWhatIf")

}

type WfNode

@model

@auth(rules: [{ allow: owner }, { allow: groups, groups: ["Admin"] }]) {

id: ID!

description: String

intention: String

boundary: String

designConditions: String

operatingConditions: String

hazardMaterials: String

drawing: String

comments: String

whatIf: WhatIf! @connection(name: "NodesWhatIf")

itemsWf: [WfItem] @connection(name: "ItemsNode")

}

type WfItem

@model

@auth(rules: [{ allow: owner }, { allow: groups, groups: ["Admin"] }]) {

id: ID!

whatIfQuestion: String

node: WfNode! @connection(name: "ItemsNode")

}

@yonatanganot @regenrek

My solution for my problem... without delete data... was:

- In the schema.graphql, change the name of the "items" in the connection:

- Change "itemsWF" for "nodeItemsWf" and "node" for "itemWfNode" -> amplify push -> Ok¡ All green¡

Other better solution:

1.- Comment all connections or relationships with "#"

2.- Amplify push api

3.- Uncomment all above commented

4.- Amplify push api

Most helpful comment

It seems there is some path that leaves APIs in a state where a resolver is left dangling even after the field is removed from the schema. When this happens you can get around the issue by going to the AppSync console, adding a field to the schema with the same name as the supposed conflict, delete the resolver record by selecting it from the right half of the schema page and clicking "Delete Resolver" on the resolver page.

I will try to reproduce and identify the underlying issue but it appears that it is not within the Amplify CLI itself.